Author: Denis Avetisyan

This review explores how deep learning techniques are being deployed to forecast electricity prices across diverse market timescales, from day-ahead to real-time balancing.

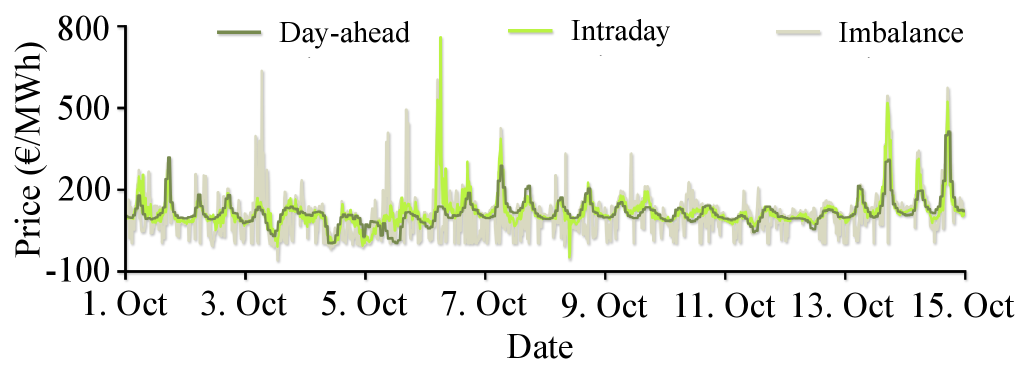

A comprehensive analysis of deep learning architectures and loss functions for short-term electricity price forecasting in day-ahead, intraday, and balancing markets.

Accurate electricity price forecasting is crucial for efficient power system operation, yet existing reviews lag behind the rapidly evolving landscape of deep learning techniques. This paper, ‘Deep Learning for Electricity Price Forecasting: A Review of Day-Ahead, Intraday, and Balancing Electricity Markets’, presents a structured analysis of deep learning applications across day-ahead, intraday, and balancing markets, introducing a novel taxonomy based on model backbone, head, and loss components. Our review reveals a clear trend toward probabilistic, microstructure-centric, and market-aware designs, while also highlighting a relative scarcity of research focused on intraday and balancing markets. Will future research prioritize market-specific modeling strategies to unlock further improvements in forecasting accuracy and grid efficiency?

The Inevitable Failure of Price Prediction (and Why We Keep Trying)

Efficient operation of modern energy markets fundamentally relies on the ability to accurately predict electricity prices. These forecasts aren’t merely financial tools; they are integral to maintaining grid stability by allowing system operators to anticipate demand and schedule generation resources effectively. A miscalculation in price forecasting can lead to imbalances between supply and demand, potentially triggering blackouts or requiring costly emergency interventions. Furthermore, accurate price signals empower consumers to make informed decisions about their energy consumption, incentivizing efficiency and reducing overall costs. From large-scale power plant investments to individual household energy choices, reliable electricity price forecasting is thus a cornerstone of a sustainable and economically viable energy future, impacting every level of the power system.

Electricity price forecasting has long presented a substantial challenge due to the dynamic and often unpredictable nature of energy markets. Traditional statistical methods, like autoregressive models and simple regression analyses, frequently fall short when attempting to capture the non-linear relationships and rapid fluctuations characteristic of electricity pricing. These models often assume stable conditions and normal distributions, assumptions routinely violated by factors such as intermittent renewable energy sources, sudden shifts in demand due to weather events, and unforeseen disruptions in supply chains. Consequently, forecasts generated by these conventional techniques exhibit significant errors, particularly during periods of high volatility or extreme events, impacting the accuracy of grid operations and increasing financial risks for energy providers and consumers alike. The complexity arises not only from the sheer number of influencing variables but also from their intricate interactions and the constantly evolving market landscape.

Forecasting inaccuracies within electricity markets don’t remain isolated incidents; they cascade throughout the energy system, creating substantial inefficiencies and vulnerabilities. When price predictions deviate from reality, power generators, transmission operators, and retailers struggle to make informed decisions regarding energy procurement and distribution. This misallocation of resources leads to increased operational costs, potentially requiring the activation of expensive reserve capacity to compensate for unexpected demand or supply fluctuations. Furthermore, amplified forecasting errors heighten the financial risk for market participants, as incorrect predictions can result in substantial losses when procuring or selling electricity. Ultimately, these propagated errors undermine the stability and efficiency of the entire energy ecosystem, impacting both economic outcomes and the reliability of power delivery.

Deep Learning: A Temporary Stay of Execution

Deep Learning techniques demonstrate potential for Enhanced Predictive Forecasting (EPF) by leveraging their ability to model non-linear relationships within time-series data. Traditional statistical and machine learning models, such as ARIMA or linear regression, often struggle with complex patterns and high-dimensional datasets. Deep Learning architectures, including recurrent neural networks (RNNs) and transformers, can automatically learn hierarchical representations from historical data, capturing intricate dependencies that may be missed by simpler models. This capability is particularly valuable in EPF applications where even small improvements in forecast accuracy can translate to significant economic benefits, and where datasets frequently contain numerous interacting variables influencing future outcomes.

A Deep Learning model designed for Energy Production Forecasting (EPF) is fundamentally structured around three core components. The Backbone is responsible for extracting relevant features and creating a meaningful representation of the input data – typically historical energy production figures and related influencing factors. This learned representation is then passed to the Head, which defines the specific output structure of the forecast – for example, predicting a single value for total production or a probabilistic distribution representing forecast uncertainty. Finally, a Loss Function quantifies the difference between the model’s predictions and the actual observed values, providing a signal used during the optimization process – typically through gradient descent – to adjust model parameters and improve forecasting accuracy.

Prior to 2022, training of Energy Production Forecasting (EPF) models largely relied on pointwise loss functions such as Mean Absolute Error (MAE) and Mean Squared Error (MSE). Current practice demonstrates a growing trend toward quantile-based forecasting, utilizing methods like Pinball Loss to predict specific quantiles of the future energy production distribution. This shift allows models to not only predict a single expected value but also to provide probabilistic forecasts, quantifying the uncertainty associated with predictions. Pinball Loss, specifically, minimizes the absolute error weighted by the quantile level, leading to improved calibration and more robust performance, especially in scenarios with non-normal error distributions or heteroscedasticity. The use of quantile-based forecasts enables better risk management and decision-making in energy systems.

Attention and Transformers: A Slightly More Sophisticated Illusion

Attention mechanisms address limitations of recurrent neural networks (RNNs) in processing long sequences of electricity price data by assigning weights to different parts of the input sequence. This allows the model to prioritize relevant historical data points when making predictions, effectively capturing long-range dependencies that influence current prices. Unlike RNNs which process data sequentially and may suffer from vanishing gradients, attention mechanisms allow the model to directly access any part of the input sequence, improving performance in forecasting tasks where relationships between distant time steps are significant. The calculated attention weights represent the importance of each input element, enabling the model to dynamically focus on the most informative features within the time series data.

The Transformer architecture has achieved state-of-the-art results across numerous sequence modeling tasks, including machine translation, text summarization, and time series forecasting. Its core mechanism, self-attention, allows the model to weigh the importance of different parts of the input sequence when processing information, mitigating the vanishing gradient problem inherent in recurrent neural networks. In the context of Electricity Price Forecasting (EPF), Transformers are increasingly utilized due to their ability to capture complex temporal dependencies and handle long sequences of historical price data. Compared to traditional methods like ARIMA and earlier neural network architectures, Transformers demonstrate superior performance in both short-term and long-term forecasting horizons, leading to their growing adoption within the energy trading and grid management sectors.

The application of Transformer architectures to Intraday Market dynamics facilitates accurate Trajectory Forecasting, a critical capability for real-time electricity trading and grid management decisions. Current research indicates a shift in Day-Ahead forecasting models from simpler Transformer backbones – prevalent between 2018 and 2020 – towards larger-scale architectures. This evolution includes the implementation of Mixture-of-Experts (MoE) layers to increase model capacity and graph neural network structures to better represent relationships within the electricity network, both aimed at improving forecast accuracy and capturing complex dependencies in electricity price data.

The Futility of Ignoring the Rules

Predictive modeling within energy markets often falls short when relying solely on standard deep learning techniques; a crucial element frequently overlooked is the inherent logic governing these systems. Effective forecasting demands an understanding of the underlying market mechanisms – the rules dictating how supply and demand interact to determine prices. Simply feeding historical data into a complex algorithm ignores the contextual information that drives price fluctuations, limiting the model’s ability to generalize and accurately predict future behavior. By explicitly incorporating knowledge of these mechanisms, such as the protocols of a balancing market or the dynamics of order books, models can move beyond pattern recognition and begin to understand the forces at play, ultimately leading to significantly improved forecasting accuracy and more reliable predictions.

Mechanism-aware modeling represents a significant advancement in forecasting by directly embedding the rules and dynamics of energy markets into predictive algorithms. Specifically, within the balancing market – where electricity supply and demand are continuously reconciled – these models move beyond simply analyzing historical price data. Instead, they simulate how market participants react to imbalances, considering factors like generation costs, reserve capacities, and network constraints. This approach allows the system to anticipate price fluctuations not just from overall supply and demand, but from the specific mechanisms governing how those forces interact. By understanding these inherent market rules, the models achieve a more nuanced and accurate prediction of price movements, ultimately improving the reliability and efficiency of energy trading and grid operation.

Recent advancements in short-term price forecasting demonstrate the significant benefits of incorporating granular orderbook data alongside established market rules. Unlike traditional methods relying on aggregated price indices or manually engineered features, this approach leverages the detailed information contained within the orderbook – encompassing up to 384 distinct features that capture the depth and dynamics of buy and sell orders. By directly modeling these orderbook dynamics and their interplay with market mechanisms, predictive models achieve demonstrably superior accuracy in forecasting short-term price fluctuations. This detailed modeling allows for a more nuanced understanding of supply and demand imbalances, translating into more reliable predictions and improved decision-making in dynamic market environments.

The pursuit of increasingly complex deep learning architectures for electricity price forecasting feels…predictable. This paper meticulously charts that evolution, from basic backbones to elaborate heads and loss functions, across day-ahead, intraday, and balancing markets. It’s a detailed catalog of solutions chasing a moving target. As Jean-Jacques Rousseau observed, “The more we sweat, the more we forget.” Each novel approach, each optimized loss function, is a temporary reprieve. Production data will inevitably expose limitations, revealing the next refinement needed. The promise of a perfect forecasting model remains elusive; it’s simply expensive ways to complicate everything, and the cost of maintaining these systems will, ultimately, outweigh the gains. The core idea-market-specific designs-is sound, but the cycle of innovation feels less revolutionary and more…inevitable.

What’s Next?

The pursuit of ever-more-complex deep learning architectures for electricity price forecasting will, predictably, continue. One anticipates a proliferation of ‘novel’ combinations of backbones, heads, and loss functions, each promising marginal gains over its predecessor-gains quickly eroded by the inherent noise of energy markets and the inevitable introduction of new regulatory quirks. The current focus on market microstructure, while admirable, risks becoming a distraction; a relentless optimization for increasingly granular data that overlooks the fundamental unpredictability of human behavior and geopolitical events.

It seems likely that the field will cycle through phases of architectural innovation followed by periods of desperate attempts to explain why those architectures work, or more often, don’t. The ‘black box’ nature of these models will remain a persistent concern, particularly as they are deployed in increasingly critical infrastructure. Expect a surge in ‘explainable AI’ papers, most of which will offer post-hoc rationalizations rather than genuine insights into model decision-making.

Ultimately, the quest for perfect electricity price prediction is a fool’s errand. These models will become increasingly sophisticated tools for managing uncertainty, rather than eliminating it. And, inevitably, when the next paradigm arrives-quantum machine learning, perhaps-it will be touted as a revolution, before revealing itself to be just the old thing with worse docs.

Original article: https://arxiv.org/pdf/2602.10071.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 21 Movies Filmed in Real Abandoned Locations

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- 10 Hulu Originals You’re Missing Out On

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- 39th Developer Notes: 2.5th Anniversary Update

- 10 Underrated Films by Ben Mendelsohn You Must See

- ICP: $1 Crash or Moon Mission? 🚀💸

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Crypto’s Comeback? $5.5B Sell-Off Fails to Dampen Enthusiasm!

2026-02-11 12:23