Author: Denis Avetisyan

New research explores whether artificial intelligence agents exhibit predictable behavioral patterns in financial markets, specifically when shifting between different investment strategies.

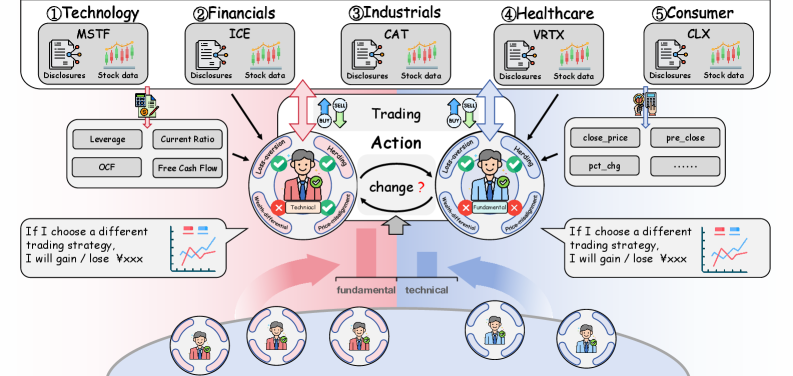

This study validates behavioral consistency in AI agents using stock-market simulation and agent-based modeling, revealing partial alignment with behavioral finance theories regarding style switching.

While agent-based modeling increasingly leverages large language models (LLMs) to simulate financial markets, a critical gap remains in validating the behavioral realism of these agents. This work, ‘Behavioral Consistency Validation for LLM Agents: An Analysis of Trading-Style Switching through Stock-Market Simulation’, addresses this by assessing whether LLM agents’ dynamic shifts between fundamental and technical trading styles align with established behavioral-finance theories. Our year-long stock-market simulations reveal only partial consistency between agent behavior and principles like loss aversion and herding, suggesting current LLMs struggle to fully replicate the nuanced decision-making of human traders. Can future refinements in prompting and agent architecture bridge this gap and unlock more reliable and insightful financial simulations?

The Illusion of Rationality: Why Markets Whisper, They Don’t Calculate

Conventional financial modeling frequently operates under the premise of homo economicus – the rational actor consistently making optimal decisions. However, this foundational assumption overlooks the inherent complexities of human psychology. Real-world investors are demonstrably subject to cognitive biases – systematic patterns of deviation from norm or rationality in judgment – and emotional influences, such as fear and greed. These factors routinely lead to decisions that diverge from purely logical calculations, creating market anomalies and unpredictable fluctuations. The reliance on rational actor models, therefore, often results in simulations that fail to adequately represent actual market behavior and can underestimate the potential for both rapid growth and sudden, substantial declines. Consequently, a growing body of research emphasizes the necessity of incorporating behavioral insights to create more realistic and robust financial projections.

Conventional financial models frequently falter when forecasting market swings and sudden collapses because they operate under the flawed premise of perfectly rational economic actors. In reality, human decision-making is riddled with cognitive biases – systematic patterns of deviation from norm or rationality in judgment – and powerfully influenced by emotions like fear and greed. These psychological factors create feedback loops and herd behavior that traditional models simply cannot anticipate. For example, the tendency for investors to overreact to negative news, or to follow the crowd during bubbles, introduces volatility not driven by fundamental economic changes. Consequently, these models often underestimate the probability of extreme events, leaving investors and institutions vulnerable to unforeseen risks and substantial losses when market sentiment overrides logical analysis.

The pursuit of realistic market simulations necessitates moving beyond purely quantitative models and integrating the principles of behavioral finance, a field recognizing that investors are not always rational actors. However, translating the complexities of human psychology – encompassing biases like loss aversion, herd behavior, and overconfidence – into computational terms presents formidable challenges. Each cognitive quirk requires nuanced mathematical representation, drastically increasing model parameters and computational demands. Simulating a market with even a modest number of agents, each exhibiting realistic behavioral traits, quickly escalates into a high-dimensional problem demanding substantial processing power and sophisticated algorithms. Furthermore, calibrating these behavioral parameters – determining the precise weight given to each bias – requires extensive datasets and robust statistical techniques, often hampered by the inherent unpredictability of market participants and the difficulty of isolating specific behavioral influences. The resulting computational burden often forces researchers to make simplifying assumptions, potentially sacrificing the very realism they aim to achieve.

Awakening the Agents: LLMs and the New Alchemy of Market Simulation

LLM-Based Agent-Based Modeling (ABM) is a computational technique used to simulate the behaviors and interactions of numerous autonomous agents within a defined system. Unlike traditional modeling approaches that rely on aggregate data and statistical relationships, ABM focuses on the individual decision-making processes of each agent and how these decisions, when combined, lead to emergent system-level outcomes. Each agent is programmed with specific attributes, rules, and learning capabilities, allowing it to react to its environment and interact with other agents. This bottom-up approach facilitates the exploration of complex phenomena in fields such as economics, social science, and epidemiology, where system behavior arises from decentralized interactions rather than centralized control. The integration of Large Language Models (LLMs) enhances ABM by enabling agents to exhibit more sophisticated and realistic behaviors based on natural language understanding and generation.

Integrating Large Language Models (LLMs) into Agent-Based Modeling (ABM) enhances agent decision-making capabilities beyond traditional rule-based or statistical approaches. LLMs enable agents to process and interpret unstructured data, such as news articles, social media feeds, and earnings reports, to inform their actions. This allows for the simulation of cognitive biases, emotional responses, and adaptive learning, resulting in more realistic and nuanced behaviors. Unlike conventional ABM agents that rely on pre-defined parameters, LLM-powered agents can generate responses based on contextual understanding, increasing the fidelity of simulated market dynamics and providing a more granular representation of individual and collective behavior.

The integration of Large Language Models into Agent-Based Modeling enables the systematic investigation of behavioral biases and their quantifiable impact on market dynamics. Specifically, agents can be programmed to exhibit cognitive biases – such as loss aversion, where potential losses exert a disproportionately strong influence on decision-making, and herding behavior, where agents mimic the actions of others – allowing researchers to observe emergent market phenomena resulting from these individual behaviors. Simulations can then be run to assess how the prevalence of these biases affects metrics like price volatility, trading volume, and market equilibrium, providing insights beyond those offered by purely rational economic models. This approach allows for controlled experiments to determine the magnitude and direction of bias-induced deviations from efficient market outcomes.

The core of this framework is a financial market simulation built upon the principles of Agent-Based Modeling (ABM). Traditional ABM constructs agents with predefined rules governing their interactions; however, this implementation extends that functionality by integrating Large Language Models (LLMs). This LLM integration enables agents to exhibit more dynamic and realistic behaviors, moving beyond static rule sets to generate responses based on contextual understanding and learned patterns within the simulated market. The resulting system allows for the modeling of complex interactions and emergent phenomena not easily captured in conventional financial simulations.

Echoes of Reality: Validating Behavioral Consistency in Simulated Markets

Simulations utilizing Large Language Models (LLMs) as agents have demonstrated behavioral patterns aligning with established concepts in behavioral finance. Specifically, these agents exhibited tendencies toward both herding – mirroring the actions of other agents – and style switching, adapting their investment strategies in response to perceived market conditions. Validation of behavioral consistency was performed by observing agent reactions to price discrepancies, confirming sensitivity to market inefficiencies. These findings suggest LLMs can effectively model and replicate economically relevant decision-making biases, offering a platform for studying and potentially predicting market behavior. The observed behaviors were statistically significant across several models, including GPT-4o-mini, Deepseek-Chat, Gemini-2.5-flash-lite-thinking-8192, and Qwen-2.5-72B-Instruct.

Agent behavior within the simulated markets demonstrated both herding tendency and style switching in response to market signals. Herding tendency was observed as agents adjusted their trading positions to align with the actions of other agents, indicating a susceptibility to social influence. Concurrently, agents exhibited style switching, dynamically altering their trading strategies – from risk-averse to risk-seeking, for example – based on perceived market conditions and price fluctuations. This dynamic adjustment suggests the agents were not employing static strategies, but instead adapting their behavior based on real-time market data, with a moderate positive correlation of 0.45 between herding alignment and observed behavior when utilizing the Qwen model.

Behavioral Consistency Validation was implemented to assess agent response to artificial price discrepancies within the simulated market. This validation process involved introducing deliberately misaligned prices for specific assets and monitoring subsequent agent trading behavior. Results indicated that agents, across all tested Large Language Models (LLMs), demonstrated a statistically measurable, though moderate, sensitivity to these price misalignments, evidenced by adjustments to buying and selling activity. While a Common Language Effect Size of less than 0.5 suggests limited overall alignment in this area, the observed responses confirm that the agents do, in fact, register and react to discrepancies between expected and actual asset prices, indicating a basic level of price misalignment sensitivity.

Agent decision-making within the simulated markets was driven by several Large Language Models (LLMs), including GPT-4o-mini, Deepseek-Chat, Gemini-2.5-flash-lite-thinking-8192, and Qwen-2.5-72B-Instruct. Statistical analysis revealed a statistically significant p-value of less than 0.05 for loss aversion specifically when utilizing the GPT-4o-mini model, indicating a demonstrable tendency to prioritize avoiding losses over acquiring equivalent gains. This finding suggests that, under these simulated conditions, GPT-4o-mini exhibited behavior consistent with a core principle of behavioral finance. The performance of other models in exhibiting loss aversion was not statistically significant at this threshold.

Analysis utilizing the Qwen model demonstrated a moderate positive correlation of 0.45 between herding alignment – the degree to which an agent’s decisions mirrored those of other agents – and observed behavioral patterns within the simulated market. This indicates a tendency for agents powered by Qwen to exhibit herding behavior, meaning their actions were positively associated with the prevailing trends exhibited by the agent population. The correlation value suggests a noticeable, but not overwhelmingly strong, relationship between the alignment score and the actual manifestation of herding within the simulation, implying that while the model demonstrates this behavior, other factors also influence individual agent decisions.

Analysis of Cliff’s delta demonstrated a moderate effect size of 0.38, indicating that agents powered by the GPT-4o-mini model exhibited a statistically discernible preference for wealth differentiation. This metric quantifies the degree to which agents consistently favored strategies that led to divergent wealth outcomes amongst the simulated population. A value of 0.38 suggests a moderate, but not overwhelming, tendency for these agents to prioritize strategies resulting in some level of wealth disparity, relative to agents not explicitly aligned to wealth differentiation principles.

Analysis of price misalignment sensitivity across all tested Large Language Models – GPT-4o-mini, Deepseek-Chat, Gemini-2.5-flash-lite-thinking-8192, and Qwen-2.5-72B-Instruct – yielded a Common Language Effect Size (CLES) below 0.5. This indicates a moderate to low degree of consistent responsiveness to price discrepancies among the agents powered by these models. While agents demonstrated some reaction to mispricing, the effect was not strong enough to establish robust alignment with the expected behavioral pattern of sensitivity to price misalignment, suggesting a limitation in the models’ ability to consistently simulate this specific aspect of rational economic behavior.

Beyond Equilibrium: Implications and Future Directions in Financial Modeling

Recent advancements showcase the capacity of Large Language Model-based Agent-Based Modeling (LLM-ABM) to significantly refine the precision and authenticity of financial modeling. Traditional models often rely on simplified assumptions about investor behavior, leading to inaccuracies when simulating complex market dynamics. LLM-ABM, however, allows for the creation of agents exhibiting more nuanced and realistic behaviors, driven by their ability to process and respond to information in a manner mirroring human cognition. This approach moves beyond purely rational actor models, incorporating elements of sentiment, cognitive biases, and adaptive learning. Consequently, simulations powered by LLM-ABM demonstrate a heightened ability to replicate observed market patterns, including volatility clusters, price bubbles, and the impact of news events – offering a more robust framework for forecasting and risk assessment than previously available.

Financial modeling traditionally relies on assumptions of rational actors and efficient markets, yet real-world financial systems are demonstrably influenced by psychological biases and emergent behaviors. Integrating principles from behavioral finance-such as loss aversion, herding, and overconfidence-into agent-based models creates simulations that more accurately reflect these influences. This approach allows for the exploration of how cognitive biases contribute to market anomalies like bubbles and crashes, and crucially, improves the robustness of models when subjected to crisis scenarios. By acknowledging that investors aren’t purely rational, these simulations can better predict systemic risks and provide more realistic assessments of portfolio vulnerability, ultimately enhancing risk management strategies and potentially mitigating the impact of future financial instability.

Ongoing research prioritizes expanding the scope of these agent-based models to encompass broader and more intricate financial ecosystems. Current efforts are directed toward increasing computational efficiency to facilitate simulations involving a significantly larger number of agents and assets, moving beyond simplified market representations. Furthermore, investigators aim to refine agent behaviors, incorporating advanced cognitive models and machine learning techniques to capture nuanced decision-making processes. This includes simulating diverse investment horizons, risk tolerances, and information processing capabilities among agents, ultimately leading to more realistic and robust financial simulations capable of predicting emergent market phenomena and stress-testing investment strategies under a wider range of conditions.

The precision with which agent behavior is modeled within stock market simulations carries substantial weight for both risk mitigation and the refinement of investment approaches. By simulating how individual agents – representing diverse investor profiles – utilize techniques like technical and fundamental analysis, researchers can observe emergent market dynamics and stress-test portfolios against various scenarios. This capability moves beyond traditional, equilibrium-based models, allowing for the exploration of non-linear effects and the potential for cascading failures. Consequently, financial institutions can leverage these simulations to assess systemic risk more accurately, optimize capital allocation, and develop more resilient investment strategies that account for the inherent unpredictability of human-driven markets. The potential extends to evaluating the impact of new regulations or market events before implementation, offering a proactive approach to financial stability and informed decision-making.

The pursuit of predictable behavior in these language-model agents feels less like science, and more like attempting to chart the currents of a restless sea. This paper’s exploration of style switching-the tendency for agents to shift trading strategies-reveals a flicker of alignment with established behavioral finance, yet falls short of true human mimicry. It’s a comforting delusion to believe a model will consistently rationalize its actions. As Ludwig Wittgenstein observed, “The limits of my language mean the limits of my world.” These agents, bound by the confines of their training data, demonstrate a similar limitation; their ‘world’ of financial decision-making remains a curated, incomplete echo of the messy reality. Everything unnormalized is still alive, and these models, despite their sophistication, remain stubbornly, beautifully, incomplete.

The Road Ahead

This exercise in coaxing artificial minds to mimic market behavior reveals, predictably, not sentience, but echoes. The agents demonstrate a tendency toward style switching, aligning with observations of human irrationality – a comfort, perhaps, in a world increasingly mediated by algorithms. But to mistake correlation for comprehension would be a gambler’s fallacy. The observed shifts are, at present, more akin to stochastic wandering than deliberate strategy, a difference metrics conveniently fail to address.

The true challenge isn’t replicating what humans do, but understanding why. Behavioral finance offers theories, but these are post-hoc narratives, stories the market tells itself. To imbue an agent with genuine adaptability requires not just data, but a model of self-preservation, of risk aversion beyond simple loss functions. And that, of course, implies a simulated consciousness-a convenient fiction, but a necessary one if these agents are to move beyond sophisticated pattern recognition.

Future work will undoubtedly focus on more complex simulations, larger datasets, and increasingly elaborate reward structures. But a deeper question lingers: are these agents learning, or are we simply learning to rationalize their inevitable failures? The pursuit of artificial intelligence in finance isn’t about predicting the future; it’s about creating increasingly convincing illusions of control.

Original article: https://arxiv.org/pdf/2602.07023.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 21 Movies Filmed in Real Abandoned Locations

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 10 Hulu Originals You’re Missing Out On

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- 20 Films Where the Opening Credits Play Over a Single Continuous Shot

- Crypto’s Comeback? $5.5B Sell-Off Fails to Dampen Enthusiasm!

- Bitcoin’s Ballet: Will the Bull Pirouette or Stumble? 💃🐂

- Gold Rate Forecast

- 10 Underrated Films by Ben Mendelsohn You Must See

2026-02-10 16:04