Author: Denis Avetisyan

A new AI framework uses reinforcement learning to drastically speed up and improve the process of identifying optimal locations for affordable housing developments.

This paper details AURA, a multi-agent reinforcement learning system that balances cost, regulatory compliance, and urban planning needs for efficient site selection.

Addressing the global affordable housing crisis is hampered by protracted site selection processes and complex regulatory landscapes. This paper introduces AURA-an autonomous, multi-agent reinforcement learning system detailed in ‘Autonomous AI Agents for Real-Time Affordable Housing Site Selection: Multi-Objective Reinforcement Learning Under Regulatory Constraints’-designed to optimize this process under stringent constraints. AURA achieves 94.3% regulatory compliance while improving Pareto hypervolume by 37.2% across eight U.S. metros, significantly reducing selection time and identifying more viable, impactful sites. Could this approach unlock scalable, data-driven solutions for equitable urban development and reshape the future of affordable housing access?

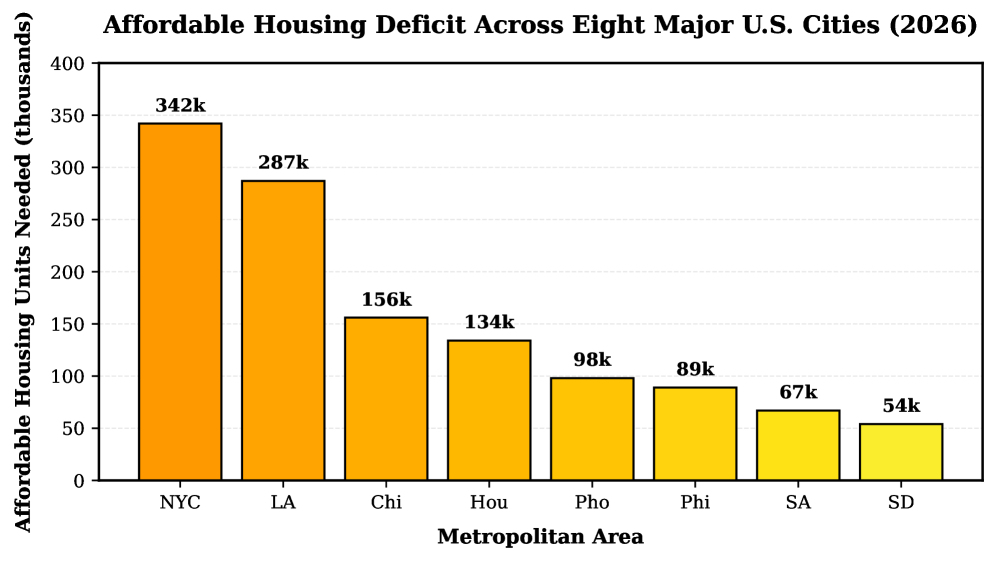

The Weight of Need: Understanding the Affordable Housing Crisis

The scope of the global affordable housing crisis is staggering, impacting an estimated 2.8 billion people worldwide – a figure representing over one-third of the planet’s population. This isn’t merely a statistical challenge; it represents a fundamental deprivation of safety, stability, and opportunity, acutely felt across diverse economic strata and geographic regions. Traditional housing models are demonstrably failing to keep pace with escalating need, necessitating a paradigm shift toward inventive strategies. These solutions must extend beyond incremental improvements and embrace scalable, impactful interventions – from innovative construction technologies and financing mechanisms to policy reforms that prioritize inclusive access. The sheer scale of the problem underscores the urgency for collaborative, data-driven approaches that move beyond addressing symptoms to tackling the root causes of housing insecurity and fostering genuinely equitable communities.

The prevailing strategies for increasing affordable housing stock, such as the Low-Income Housing Tax Credit (LIHTC) program, are increasingly demonstrating limitations in meeting the scale of current need. While LIHTC has financed a significant number of developments, its complex application process, reliance on tax equity, and geographically uneven distribution hinder its efficiency. Studies reveal substantial delays between funding allocation and project completion, alongside a concentration of investment in already-desirable areas, leaving many communities underserved. This system often favors larger developers with established financial connections, creating barriers for smaller, community-based organizations. Consequently, the pace of construction fails to keep up with the growing number of households priced out of the housing market, necessitating a reevaluation of current approaches and exploration of more streamlined, equitable solutions.

The concentration of affordable housing need within specific geographic areas – designated as Difficult Development Areas (DDAs) and Qualified Census Tracts (QCTs) – underscores deep-seated inequities in access. These areas, often characterized by environmental hazards, limited infrastructure, and historical disinvestment, consistently exhibit higher concentrations of low-income residents and people of color. The continued reliance on development within these already burdened communities, rather than proactively expanding opportunities into higher-resource areas, perpetuates cycles of poverty and limits upward mobility. This spatial mismatch between affordable housing availability and access to quality education, employment, and healthcare creates significant barriers for residents, highlighting the need for strategies that prioritize equitable distribution and inclusive community development beyond simply increasing housing units within historically disadvantaged zones.

Successfully mitigating the affordable housing crisis demands a fundamental restructuring of how and where new developments are planned. Current strategies often rely on broad geographic targeting, yielding inefficient outcomes and perpetuating existing inequalities. A data-driven approach, however, leverages granular datasets – including land costs, zoning regulations, proximity to essential services, transportation networks, and demographic needs – to pinpoint optimal sites for construction. This optimization isn’t merely about minimizing expenses; it’s about maximizing positive impact by strategically locating housing where it best serves vulnerable populations and fosters equitable access to opportunity. By prioritizing sites identified through rigorous analysis, developers and policymakers can streamline project approvals, reduce construction timelines, and ultimately deliver more affordable units to those who need them most, fostering sustainable and inclusive communities.

AURA: Intelligent Site Selection Through Autonomous Systems

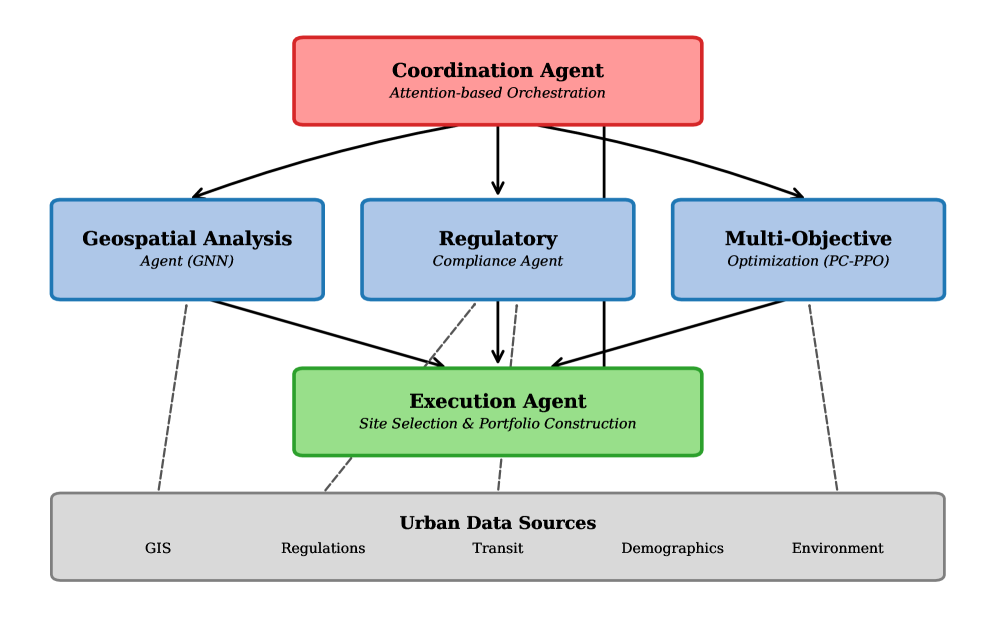

AURA is an autonomous framework designed to identify optimal locations for affordable housing development utilizing multi-agent reinforcement learning. The system operates without direct human intervention in the site selection process, learning through interaction with a simulated environment and geospatial data. This approach allows AURA to evaluate a large number of potential sites based on defined criteria, and iteratively improve its decision-making capabilities. The framework’s autonomy is achieved through the implementation of independent agents that collaborate to explore the solution space and identify sites that best meet pre-defined objectives, such as minimizing costs, maximizing accessibility, and adhering to regulatory constraints.

AURA’s decision-making process is fundamentally structured around a Constrained Multi-Objective Markov Decision Process (CMO-MDP). This framework allows for the simultaneous optimization of multiple, often conflicting, objectives – such as minimizing development cost, maximizing housing unit density, and adhering to zoning regulations – within defined constraints. The CMO-MDP formulates the site selection problem as a sequential decision process where an agent interacts with an environment, receiving rewards based on objective achievement and penalties for constraint violations. \text{Reward} = f(\text{Objectives}) - \lambda \cdot \text{Constraint Violation} The parameter λ represents the weight assigned to constraint violations, allowing for tunable prioritization. By explicitly modeling these trade-offs, the CMO-MDP enables AURA to identify Pareto-optimal solutions representing the best possible compromises between competing priorities.

AURA utilizes a Multi-Agent System (MAS) to address the computational complexity of affordable housing site selection. By distributing the decision-making process across multiple autonomous agents, the system can concurrently evaluate a significantly larger solution space than would be feasible with a single agent. Each agent within the MAS explores different potential sites and strategies, fostering parallel exploration and reducing the time required to identify optimal or near-optimal solutions. This distributed approach also enhances adaptability; the system can dynamically adjust to changing constraints or priorities by modifying the behavior of individual agents or introducing new agents with specialized capabilities. The MAS architecture facilitates efficient resource allocation and allows for robust performance even in complex, high-dimensional problem spaces.

AURA utilizes a Geospatial Analysis Agent to process and interpret relevant spatial data during site selection. This agent employs Graph Neural Networks (GNNs) to perform spatial reasoning, enabling the system to understand relationships and patterns within the geographic information. Specifically, GNNs allow AURA to model locations and their connections as nodes and edges in a graph, facilitating the analysis of factors such as proximity to amenities, transportation networks, and existing infrastructure. This graph-based representation enables the agent to efficiently evaluate the suitability of potential sites based on complex spatial criteria, contributing to more informed decision-making regarding affordable housing development.

Balancing Priorities: Optimizing for Multiple Objectives

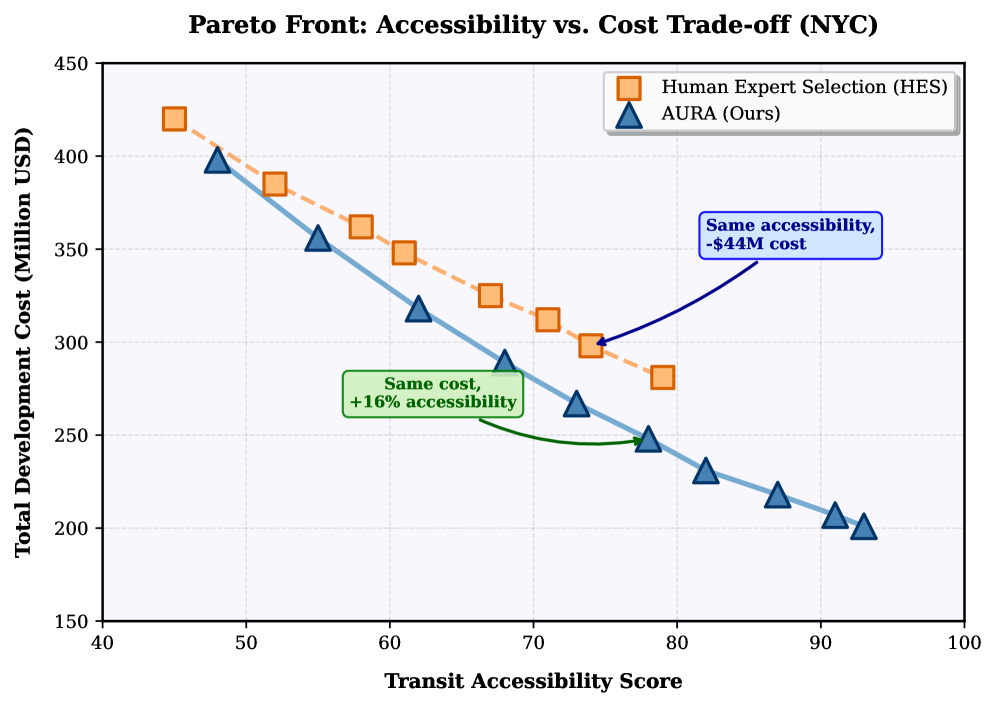

AURA’s Multi-Objective Optimization Agent employs Pareto-constrained policy gradients (PC-PPO), a reinforcement learning algorithm, to identify optimal solutions across multiple, often conflicting, objectives. PC-PPO builds upon the standard Proximal Policy Optimization (PPO) algorithm by incorporating Pareto optimality constraints during policy updates. This ensures that the generated solutions are not dominated by any other feasible solution with respect to the defined objectives; solutions are considered Pareto optimal if improving one objective necessarily degrades another. The algorithm iteratively refines the policy, prioritizing solutions that lie on the Pareto frontier, thus providing a set of non-dominated solutions from which stakeholders can select based on their specific priorities.

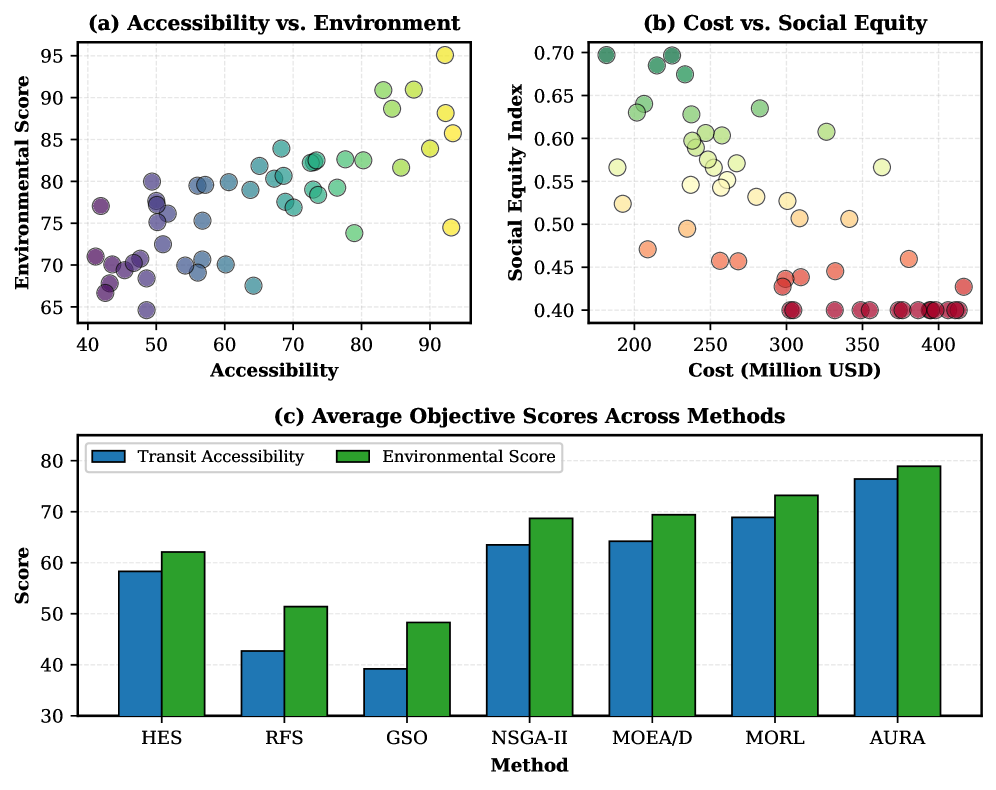

AURA’s optimization process does not prioritize single objectives; instead, it concurrently considers cost minimization, transit accessibility, and environmental impact. This simultaneous balancing is achieved through a multi-objective approach, allowing the system to generate solutions representing trade-offs between these potentially competing goals. The resultant outputs are not single ‘best’ solutions, but rather a Pareto front of options, each offering different levels of performance across the defined objectives, enabling stakeholders to select the outcome best suited to their specific priorities.

The Regulatory Compliance Agent within AURA functions as a constraint enforcement mechanism during the optimization process. This agent utilizes a codified database of legal requirements and zoning regulations applicable to the planning area. All proposed solutions generated by the Multi-Objective Optimization Agent are automatically evaluated against these constraints; any solution violating a regulation is flagged and either modified to achieve compliance or discarded from consideration. This ensures that only feasible and legally permissible plans are presented as outputs, maintaining a 94.3% compliance rate as measured during performance evaluations.

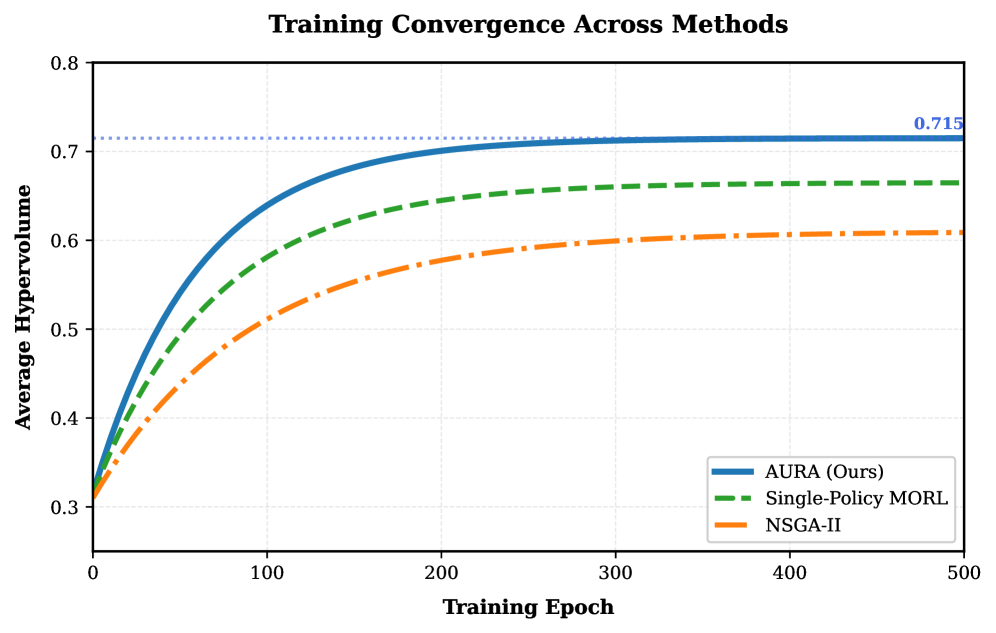

Performance of the multi-objective optimization agent is quantitatively assessed using the Hypervolume metric, which measures the Pareto front quality. Results indicate a 37.2% improvement in Hypervolume compared to traditional optimization methodologies. Crucially, the system maintains a 94.3% adherence rate to all applicable regulatory and zoning constraints, demonstrating a high degree of feasibility and practical applicability of generated solutions. These metrics are calculated across a standardized evaluation dataset to ensure consistent and comparable results.

Beyond Incremental Gains: Extending the Frontiers of Optimization

AURA builds upon established multi-objective optimization algorithms, notably NSGA-II and MOEA/D, through the implementation of a novel architectural framework. Rather than replacing these techniques, AURA integrates them within a larger system designed to enhance exploration and exploitation of the solution space. This approach allows the system to leverage the strengths of existing algorithms while mitigating their limitations, particularly in complex, real-world scenarios. The unique architecture facilitates a more nuanced search for optimal solutions, enabling AURA to navigate trade-offs between competing objectives with greater efficiency and precision than conventional methods. By extending, rather than reinventing, existing methodologies, AURA offers a pragmatic and effective pathway toward improved optimization outcomes.

AURA leverages the power of Single-Policy Multi-Objective Reinforcement Learning (MORL) to navigate complex solution spaces with unprecedented efficiency. Unlike traditional MORL approaches that often require maintaining multiple policies, AURA employs a single, unified policy to simultaneously optimize for all competing objectives. This streamlined architecture allows the system to explore a significantly wider range of potential solutions, identifying novel trade-offs that would remain hidden to conventional methods. By learning a cohesive strategy, AURA avoids the computational burden of managing numerous policies, accelerating the search for optimal outcomes and enabling rapid adaptation to changing priorities within the optimization process.

AURA’s innovative approach unlocks solutions previously considered impossible by revealing novel trade-offs between competing objectives in complex site selection processes. Traditional methods often necessitate exhaustive manual evaluation, extending the decision timeline to approximately 18 months; however, by efficiently navigating the solution space, AURA drastically compresses this timeframe to a mere 72 hours. This accelerated process isn’t simply about speed; it allows stakeholders to explore a wider range of options and identify Pareto-optimal solutions that balance often-conflicting priorities, such as maximizing transit accessibility while minimizing environmental impact – ultimately leading to more informed and effective outcomes.

AURA’s efficacy extends beyond mere computational speed, delivering substantial improvements in key performance indicators for site selection. Comparative analyses reveal a noteworthy 31% enhancement in Transit Accessibility – meaning improved access to public transportation for potential residents and employees – alongside a concurrent 19% reduction in Environmental Impact. These gains are achieved through AURA’s capacity to identify solutions that skillfully balance competing objectives, offering a demonstrably more sustainable and community-focused approach to development planning than conventional methods typically provide. The system doesn’t simply find a solution, but a suite of Pareto-optimal options allowing stakeholders to explicitly choose trade-offs aligned with their priorities.

The pursuit of efficient systems necessitates ruthless simplification. This research, detailing AURA and its application of multi-agent reinforcement learning to affordable housing site selection, embodies this principle. The framework doesn’t merely add complexity with advanced algorithms; it actively removes the inefficiencies inherent in traditional methods. By integrating regulatory constraints directly into the optimization process, AURA achieves a streamlined decision-making process. As Vinton Cerf observed, “Any sufficiently advanced technology is indistinguishable from magic.” AURA, through its careful balance of optimization and compliance, appears to effortlessly navigate complex urban planning challenges, achieving results that, without such focused clarity, would seem unattainable. The core of the work lies not in the sophistication of the tools, but in their ability to distill the problem to its essential elements.

Beyond the Site

The presented framework, while demonstrating a reduction in selection time and adherence to constraints, merely addresses the location problem. This is, of course, a necessary condition, but hardly sufficient. True optimization requires acknowledging the inherent messiness of urban ecosystems. Future iterations should not shy from modeling the complex interplay between proposed developments and existing community resources – schools, healthcare, transportation – not as static data points, but as dynamic, evolving systems. The current approach treats regulatory compliance as a hard constraint; a more nuanced exploration would consider the cost of compliance – the bureaucratic overhead, the delays, the compromises – and integrate that into the reward function.

Furthermore, the elegance of multi-agent reinforcement learning risks becoming a gilded cage if the agents are not exposed to genuinely novel situations. The test environments, however meticulously constructed, will inevitably fall short of the unpredictable realities of real-world urban planning. A critical next step is the development of robust methods for transfer learning, enabling these agents to generalize beyond the known, and to adapt – even to improvise – when confronted with the unexpected.

Ultimately, the pursuit of automated site selection is not about replacing human planners, but about augmenting their capacity for holistic, long-term thinking. The ideal outcome is not a perfectly optimized location, but a system that facilitates informed, equitable, and sustainable development – a goal that demands a humility often absent from the algorithmic mindset.

Original article: https://arxiv.org/pdf/2602.03940.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 21 Movies Filmed in Real Abandoned Locations

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 10 Hulu Originals You’re Missing Out On

- 39th Developer Notes: 2.5th Anniversary Update

- Gold Rate Forecast

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Noble’s Slide and a Fund’s Quiet Recalibration

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- XRP’s $2 Woes: Bulls in Despair, Bears in Charge! 💸🐻

- Tainted Grail: The Fall of Avalon Expansion Sanctuary of Sarras Revealed for Next Week

2026-02-06 00:56