Author: Denis Avetisyan

Artificial intelligence is rapidly transforming cellular infrastructure, promising more resilient, efficient, and accessible networks for all.

This review details the integration of AI-driven Radio Access Network optimization with edge computing to enhance 5G-NR performance and sustainability, particularly in backhaul-constrained environments.

Despite the increasing volume of performance and environmental telemetry generated by modern telecommunications networks, many LTE and 5G-NR deployments remain reliant on static, manually configured systems. This limitation hinders adaptability in dynamic environments, motivating the research presented in ‘Real-World Applications of AI in LTE and 5G-NR Network Infrastructure’, which proposes an integrated architecture for AI-driven Radio Access Network (RAN) optimization and edge-hosted application execution. By combining reinforcement learning, digital twin validation, and containerized deployment, this work demonstrates how AI can enhance network performance, reduce operational costs, and expand access to advanced digital services even in backhaul-constrained environments. Could this approach unlock a new era of sustainable and inclusive network development, delivering ubiquitous connectivity and intelligent services to underserved communities?

Beyond Static Prediction: The Evolving Radio Access Network

Historically, Radio Access Network (RAN) planning has depended on predictions based on fixed parameters and substantial human oversight. This approach assumes network conditions will remain relatively stable, a premise increasingly challenged by the unpredictable nature of modern mobile usage. Consequently, networks configured through these static methods struggle to efficiently handle sudden surges in demand, shifts in user location, or the introduction of new applications. The reliance on manual adjustments also introduces delays and inefficiencies, preventing operators from responding quickly to evolving network needs and hindering their ability to deliver consistent, high-quality service in a landscape characterized by rapidly changing user behavior and device proliferation.

Despite widespread mobile-phone ownership – reaching 75 to 90% across many global regions – traditional Radio Access Network (RAN) planning methods are increasingly challenged by fluctuating user demands. These conventional approaches, built upon static models and manual configuration, struggle to dynamically optimize for real-time user experience. Networks designed with fixed parameters often fail to adapt to rapidly changing traffic patterns driven by factors like peak hours, special events, or the growing popularity of data-intensive applications. Consequently, users may experience inconsistent service quality, reduced speeds, and increased latency, even in areas with high network coverage and device penetration, highlighting a critical disconnect between infrastructure capacity and actual performance needs.

The consequences of relying on outdated Radio Access Network (RAN) planning methods are now acutely felt across mobile networks globally. Despite the proliferation of mobile broadband – with subscriptions frequently exceeding one per inhabitant in many regions – and the explosive growth of mobile data traffic, often increasing at a rate of 20-30% compounded annually, network performance remains hampered by inefficiencies. This disconnect manifests as sub-optimal speeds, dropped connections, and increased latency, leading to a frustrating user experience. Furthermore, the inability to dynamically adapt to changing traffic patterns drives up operational expenditures, as manual interventions and hardware upgrades become increasingly necessary to maintain even a baseline level of service. The combination of diminished performance and rising costs underscores the urgent need for more intelligent and automated network optimization strategies.

Modern networks are increasingly burdened by escalating data demands and unpredictable traffic patterns, necessitating a fundamental change in how they are managed. Traditional planning methods, reliant on pre-defined configurations and manual adjustments, simply cannot keep pace with this dynamism. Instead, a transition towards intelligent, data-driven optimization offers a viable solution, leveraging real-time network data and advanced analytics to proactively adapt to changing conditions. This approach allows for automated resource allocation, predictive capacity planning, and self-optimizing networks that can dynamically adjust to user behavior and application demands. By embracing this shift, network operators can move beyond reactive troubleshooting and towards a proactive, efficient, and ultimately more reliable network infrastructure capable of delivering a superior user experience.

Intelligent Adaptation: The Rise of AI-Driven RAN Optimization

AI-Driven Radio Access Network (RAN) optimization employs machine learning algorithms to automatically configure network parameters, moving beyond traditional static or rule-based approaches. This dynamic adjustment encompasses parameters such as transmit power, beamforming weights, and resource allocation, responding to changing network conditions and user demands. The system analyzes network data to identify patterns and correlations, then predicts optimal parameter settings to enhance Key Performance Indicators (KPIs). This continuous learning and adaptation process aims to improve overall network capacity, coverage, and user experience without manual intervention, allowing for a more efficient and responsive network infrastructure.

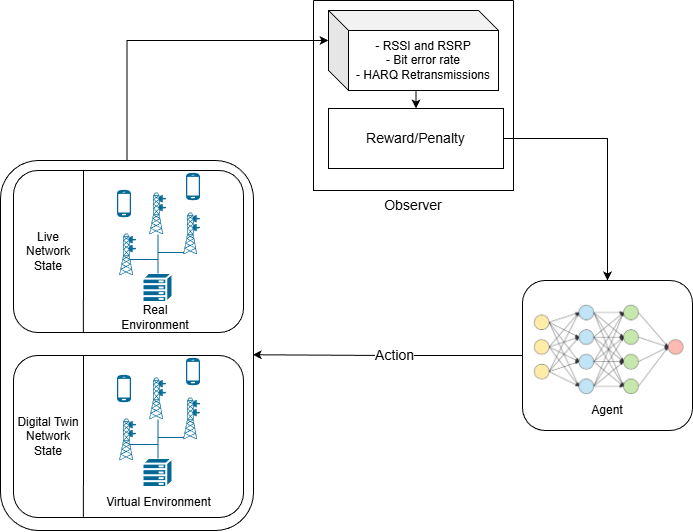

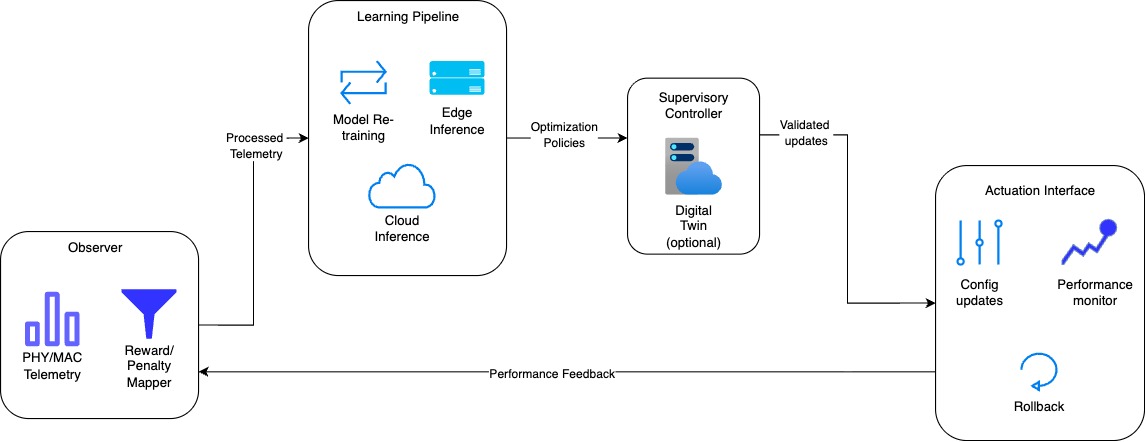

Reinforcement Learning (RL) and Graph Neural Networks (GNNs) are central to AI-driven Radio Access Network (RAN) optimization due to their ability to handle the complex, dynamic nature of wireless environments. RL algorithms enable the RAN to learn optimal configurations through trial and error, receiving rewards for actions that improve key performance indicators. GNNs are particularly effective at modeling the interdependencies between network elements – such as base stations and user equipment – representing the RAN as a graph where nodes represent entities and edges represent connections. This allows the system to predict the impact of configuration changes across the network, facilitating more informed decisions and improving overall performance compared to traditional, static optimization methods. The combination of RL for decision-making and GNNs for network representation provides a powerful framework for intelligent adaptation in modern RANs.

AI-driven Radio Access Network (RAN) optimization relies on the continuous analysis of key performance indicators to dynamically adjust network parameters. Specifically, the system ingests real-time data including Reference Signal Received Power (RSRP), Signal-to-Interference-plus-Noise Ratio (SINR), and Hybrid Automatic Repeat Request (HARQ) statistics. RSRP and SINR provide immediate insight into signal quality and interference levels, while HARQ statistics – encompassing retransmission rates and successful packet delivery – indicate link reliability. By correlating these metrics, the AI algorithms identify patterns and predict optimal configurations for radio resource allocation, power control, and modulation schemes, ultimately maximizing network throughput and minimizing latency for end-users.

A Digital Twin, in the context of RAN optimization, functions as a virtual replica of the physical network, enabling comprehensive testing of new configurations and algorithms without impacting live performance. This involves simulating network behavior based on real-world data feeds, allowing engineers to validate optimization strategies-such as beamforming adjustments or resource allocation changes-under a range of conditions. By identifying potential issues – including signal interference or capacity bottlenecks – within the simulated environment, operators can proactively address them before deployment, minimizing the risk of service disruptions and ensuring network stability. The Digital Twin facilitates iterative refinement of optimization policies, accelerating the deployment of performance enhancements and reducing operational costs associated with trial-and-error adjustments on the live network.

Decentralized Intelligence: Edge Hosting and the RAN

Edge hosting involves the strategic placement of application processing capabilities directly onto Radio Access Network (RAN) infrastructure – encompassing base stations, distributed antenna systems, and related hardware. This distributed architecture contrasts with traditional centralized cloud-based processing by minimizing data transmission distances and, consequently, network latency. By executing applications closer to the end-user, edge hosting reduces the dependence on backhaul connectivity to centralized data centers. This is achieved through the deployment of software applications, utilizing technologies like containerization, directly onto the RAN, enabling faster response times and improved performance for latency-sensitive applications and services.

The deployment and management of applications on RAN infrastructure utilizing Edge Hosting relies heavily on Virtual Network Functions (VNFs) and the Docker Engine. VNFs encapsulate network functions – previously handled by dedicated hardware – into software, enabling them to run on standard servers. Docker Engine provides a platform for containerizing these VNFs, packaging them with all dependencies, and ensuring consistent operation across different environments. This containerization simplifies application deployment, scaling, and updates, while also improving resource utilization and portability. Specifically, Docker facilitates the creation, deployment, and management of VNFs as isolated containers, allowing multiple network functions to coexist on a single physical server and enabling dynamic allocation of resources based on demand.

Localized processing at the radio access network (RAN) edge facilitates real-time decision-making by minimizing data transmission distances and associated delays. Applications requiring consistently low latency, such as augmented and virtual reality (AR/VR) experiences and autonomous vehicle control systems, benefit directly from this reduced latency. AR/VR applications demand sub-30ms latency for a seamless user experience, while autonomous vehicles require consistently low latency-often under 10ms-for critical functions like object detection and collision avoidance. By processing data closer to the user or vehicle, edge hosting circumvents the limitations of centralized cloud infrastructure and supports the performance requirements of these demanding applications.

AI-Driven Radio Access Network (RAN) Optimization requires significant computational resources to process data and implement real-time adjustments; Edge Hosting directly addresses this need by deploying those resources at the network edge. This localized processing is particularly critical in areas with limited or unreliable backhaul connectivity, such as rural environments where, despite high mobile phone penetration, 4G population coverage remains below 60%. By minimizing data transmission latency and reliance on centralized cloud infrastructure, Edge Hosting enables effective AI-driven optimization even in regions with suboptimal network conditions, improving performance and user experience where it is most needed.

Beamlink: An Integrated Architecture for Future Networks

The Beamlink architecture proposes a fundamental shift in radio access network (RAN) design by tightly integrating base stations with on-site edge computing resources, facilitated by a modular hardware unit called the Bentocell. This convergence aims to overcome limitations of traditional, centralized RANs by bringing computational power closer to the data source – the user or device. Instead of transmitting vast amounts of raw data to distant centralized servers, the Bentocell processes information locally, reducing latency and bandwidth demands. This distributed approach not only enhances responsiveness for applications like augmented reality and autonomous vehicles, but also enables new possibilities for localized data analytics and privacy-preserving computation directly at the network edge, paving the way for a more efficient and scalable 5G infrastructure and beyond.

The Beamlink architecture deliberately embraces OpenRAN principles to foster a uniquely adaptable and collaborative network environment. This commitment moves away from traditional, monolithic systems by enabling the disaggregation of hardware and software components, allowing for the seamless integration of diverse vendors and technologies. Consequently, operators gain substantial flexibility in building and customizing their networks, avoiding vendor lock-in and accelerating the deployment of innovative services. Interoperability is further enhanced through standardized interfaces and open protocols, creating a vibrant ecosystem where best-of-breed components can work together harmoniously, ultimately driving down costs and fostering rapid innovation in the rapidly evolving landscape of 5G and beyond.

At the heart of the Beamlink architecture lies Maia, a sophisticated cloud controller designed to dynamically orchestrate and manage the distribution of artificial intelligence services across the network edge. Rather than relying on centralized cloud processing, Maia intelligently allocates AI tasks to the Bentocells closest to the data source, minimizing latency and maximizing efficiency. This distributed approach allows for real-time data analysis and decision-making directly at the network edge, significantly reducing the need for extensive backhaul bandwidth. Maia continually monitors network conditions and resource availability, adapting the allocation of AI services to optimize performance and ensure scalability – effectively transforming the RAN into a responsive, self-organizing system capable of handling the complex demands of modern applications, from autonomous vehicles to advanced security systems.

The Beamlink architecture presents a paradigm shift in radio access network (RAN) design, fostering a system capable of dynamically adapting to the increasing demands of 5G and future wireless technologies. By tightly integrating base stations with on-site edge computing capabilities, Beamlink minimizes reliance on centralized processing and dramatically reduces backhaul requirements. Consider the example of intelligent security cameras; traditionally, these devices would transmit high-bandwidth video streams to a remote server for analysis, consuming significant backhaul capacity – often exceeding 100 Mbps. However, with Beamlink, AI processing occurs directly at the network edge, analyzing footage locally and transmitting only relevant metadata or alerts, effectively reducing backhaul usage to negligible levels. This distributed intelligence not only conserves bandwidth but also significantly lowers latency, enabling real-time responsiveness crucial for applications requiring immediate action and creating a foundation for a more scalable and efficient wireless infrastructure.

Embedded Intelligence: The Future of RAN Control

The integration of artificial intelligence directly within the radio access network (RAN) signifies a paradigm shift towards decentralized, localized intelligence. By embedding AI services – increasingly driven by sophisticated models like Large Language Models – data processing moves closer to the source, enabling immediate insights and actions without the delays inherent in centralized cloud-based systems. This localized approach fosters real-time responsiveness, allowing the RAN to independently analyze network behavior, predict potential issues, and dynamically optimize performance based on immediate conditions. Consequently, the RAN evolves from a passive conduit of data to an active, intelligent entity capable of self-management and adaptation, paving the way for more efficient, resilient, and user-centric mobile networks.

The integration of embedded AI within radio access networks facilitates a suite of sophisticated capabilities extending beyond traditional performance metrics. Real-time anomaly detection, for example, allows the network to instantly identify and address unusual traffic patterns or equipment malfunctions, minimizing service disruptions. Furthermore, proactive network optimization utilizes predictive analytics to anticipate congestion and dynamically adjust resource allocation, ensuring consistently high performance for all users. This localized intelligence also paves the way for personalized user experiences; the network can learn individual preferences and tailor service delivery accordingly, offering customized bandwidth allocation or prioritized access based on application needs and user behavior – ultimately redefining connectivity as a truly adaptive and responsive service.

Traditional radio access networks often funnel vast amounts of data to centralized servers for processing, creating potential bottlenecks and vulnerabilities. However, shifting computational power to the network edge – directly within the base stations and distributed access points – dramatically reduces this reliance on the backhaul network. This localized data processing enables faster response times, as decisions are made closer to the source of information, and minimizes the impact of network outages. Consequently, the system becomes significantly more resilient; even if the connection to the central core is disrupted, the RAN can continue to operate intelligently, maintaining critical services and adapting to changing conditions in real-time. This distributed architecture not only enhances reliability but also optimizes bandwidth usage and lowers latency, paving the way for more responsive and dependable wireless communication.

The future of radio access networks is rapidly being reshaped by a powerful confluence of technologies: artificial intelligence, edge computing, and open architectures. This convergence allows for a paradigm shift from centralized, static networks to distributed, intelligent systems capable of self-optimization and adaptation. By bringing AI processing closer to the user – at the network edge – RANs can dramatically reduce latency, enhance security, and improve resource allocation. Open architectures, characterized by disaggregation and virtualization, further accelerate this transformation by fostering innovation and allowing for the seamless integration of diverse AI applications. This synergy isn’t simply an incremental improvement; it promises a fundamentally new level of network responsiveness, efficiency, and the ability to support the ever-increasing demands of emerging technologies and connected devices.

The pursuit of increasingly intricate network architectures often obscures a fundamental truth: elegance lies in reduction. This paper’s focus on integrating AI at the edge, simplifying backhaul demands, isn’t merely a technical refinement, but a philosophical stance. It recalls Marvin Minsky’s observation: “The more you think about it, the more you realize that there is a simple way to do things.” The proposition to utilize AI-driven Radio Access Network optimization isn’t about adding layers of complexity; it’s about distilling the essential elements for resilience and accessibility, particularly in challenging connectivity scenarios. They called it a framework to hide the panic, but here, the aim is to remove the framework, revealing a network built on inherent simplicity.

What’s Next?

The presented architecture, while addressing immediate concerns of resilience and efficiency, merely shifts the complexity. True optimization isn’t about adding layers of intelligence, but reducing the need for them. The dependence on extensive training data remains a vulnerability. Generalization to unseen network conditions, to the long tail of real-world variance, is not demonstrated, only implied. Future work must focus on minimizing data requirements-on algorithms that learn from scarcity, not abundance.

The integration with edge computing introduces a new locus of failure. Distributed intelligence is only beneficial if the distribution is robust. Current models assume a level of edge resource availability that is, charitably, optimistic. A sustainable architecture demands self-healing, self-provisioning capabilities at the edge-a system that adapts to its own limitations, not one that is paralyzed by them.

Ultimately, the pursuit of “intelligent” networks risks obscuring a simpler truth: networks function best when they are as close to invisible as possible. The goal isn’t to build networks that think, but networks that anticipate-and anticipation requires, not complexity, but radical simplification. Clarity is the minimum viable kindness.

Original article: https://arxiv.org/pdf/2602.02787.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 21 Movies Filmed in Real Abandoned Locations

- Gold Rate Forecast

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- 10 Hulu Originals You’re Missing Out On

- 39th Developer Notes: 2.5th Anniversary Update

- TON PREDICTION. TON cryptocurrency

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- The Timeless Allure of Dividend Stocks for Millennial Investors

- Trump Did Back Ben Affleck’s Batman, And Brett Ratner Financed The SnyderVerse

2026-02-05 01:37