Author: Denis Avetisyan

Researchers have developed a novel framework that combines graph neural networks and rare pattern mining to detect subtle attacks lurking within normal system behavior.

RPG-AE leverages provenance data and graph autoencoders to identify anomalies by pinpointing unusual patterns indicative of malicious activity.

Detecting Advanced Persistent Threats (APTs) remains a critical challenge due to their stealth and blending with normal system activity. This paper introduces ‘RPG-AE: Neuro-Symbolic Graph Autoencoders with Rare Pattern Mining for Provenance-Based Anomaly Detection’, a novel framework that couples graph autoencoders with rare pattern mining to enhance anomaly detection in system provenance data. By learning normal relational structures and identifying infrequent behavioral co-occurrences, RPG-AE demonstrably improves anomaly ranking quality compared to baseline methods. Could this neuro-symbolic approach offer a pathway toward more interpretable and effective cybersecurity solutions for uncovering subtle attacks?

The Illusion of Security: Why Today’s Defenses Are Failing

Traditional anomaly detection systems often falter when confronted with Advanced Persistent Threats (APTs) due to the attackers’ sophisticated techniques of blending malicious activity with normal system operations. These threats don’t rely on blatant, easily identifiable signatures; instead, APTs meticulously mimic legitimate user and application behavior, making it exceptionally difficult to distinguish harmful actions from routine processes. Consequently, systems designed to flag deviations from established baselines frequently miss subtle, yet dangerous, intrusions. This camouflage effectively allows APTs to establish a foothold within a network and operate undetected for extended periods, exfiltrating sensitive data or disrupting critical infrastructure – highlighting a fundamental limitation of relying solely on signature-based or statistical anomaly detection methods.

Conventional cybersecurity defenses, heavily reliant on static signatures and predefined rule-based systems, increasingly falter when confronted with novel attack vectors. These systems operate by identifying known malicious patterns, proving largely ineffective against adversaries employing polymorphic malware or zero-day exploits – attacks that haven’t been previously cataloged. The very nature of these defenses creates a reactive posture; a new threat necessitates a new signature, leaving systems vulnerable during the critical window between initial compromise and signature deployment. This limitation is particularly acute in the face of sophisticated attackers who intentionally craft their attacks to evade signature-based detection, often by mimicking legitimate system processes or utilizing encryption to obscure malicious code. Consequently, organizations require proactive approaches that move beyond simply recognizing what is malicious to understanding how systems typically behave, enabling the identification of anomalous activity regardless of whether a corresponding signature exists.

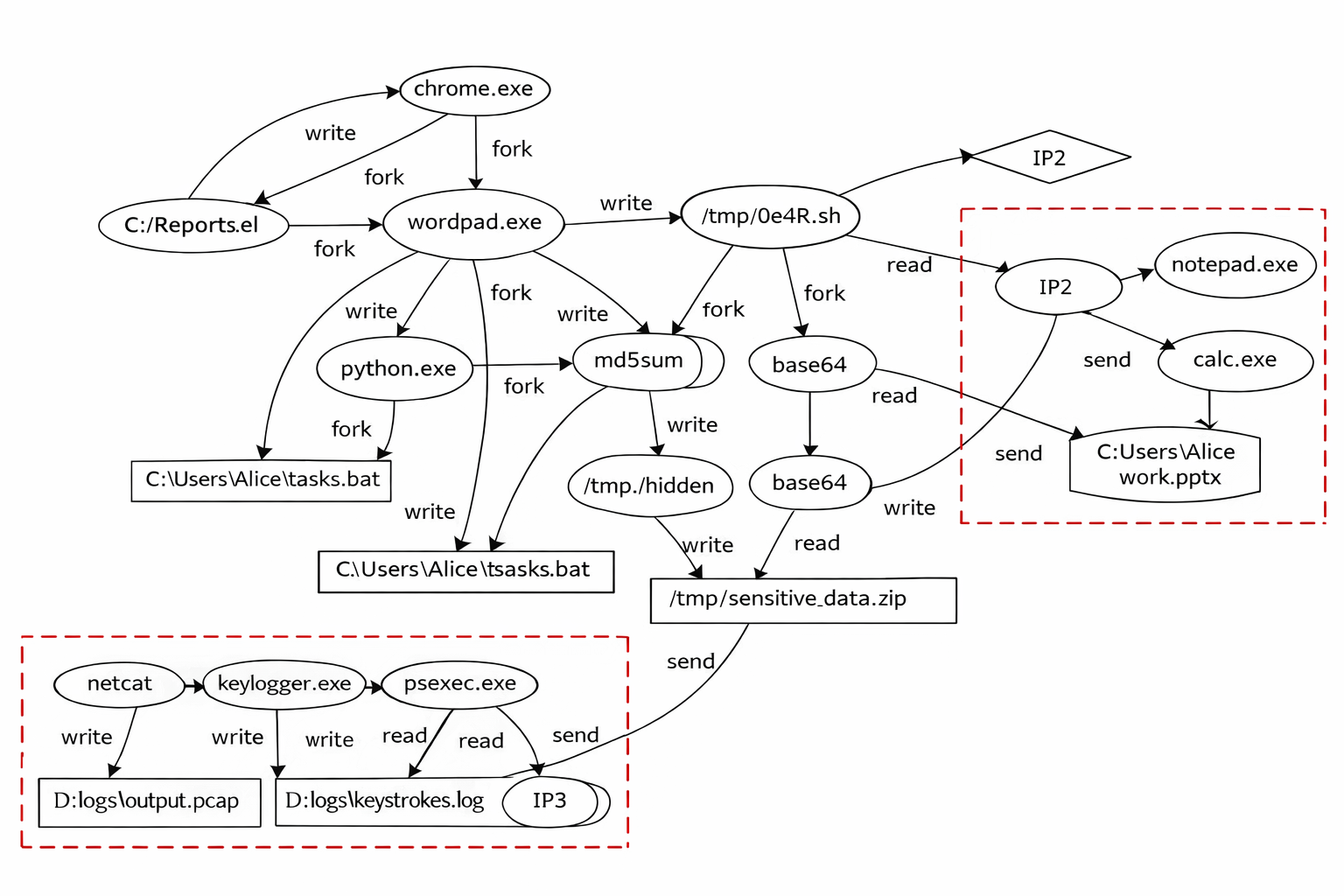

Robust defense against increasingly sophisticated cyberattacks hinges on a comprehensive understanding of system behavior, and this is precisely what System Provenance Data enables. This data doesn’t merely track what happened on a system, but meticulously documents how an action occurred – detailing the originating process, the specific commands executed, and the modifications made to the system’s state. By establishing a detailed lineage of every event, security systems can move beyond simply identifying malicious signatures and instead assess the legitimacy of actions based on their context and origin. This approach is particularly effective against Advanced Persistent Threats, which often masquerade as legitimate processes; a complete record of an action’s provenance reveals deviations from established baselines and exposes malicious intent hidden within seemingly normal behavior. Ultimately, leveraging System Provenance Data allows for a proactive and adaptive security posture, capable of responding to novel threats that bypass traditional detection methods.

Contemporary security systems frequently falter not because of overtly malicious actions, but due to their inability to recognize subtle shifts in how a system typically functions. These systems are designed to flag anomalies – behaviors that drastically deviate from the norm – yet sophisticated attacks often operate by making minute, incremental changes to established relationships within a network. This ‘Normal Relational Structure’ encompasses the predictable patterns of communication between processes, the usual data flow, and the expected sequence of operations. When attackers subtly alter these relationships – for example, by initiating legitimate processes at unusual times or modifying the size of data packets within acceptable ranges – current detection methods often fail to recognize the threat. Consequently, these deviations, though individually insignificant, can collectively signal a compromise, remaining undetected amidst the noise of regular activity and highlighting the need for more sensitive analytical techniques.

Mapping the Battlefield: Process Graphs as System Blueprints

Process Behavioral Graphs (PBGs) represent system activity by modeling the relationships between processes as a directed graph, where nodes represent processes and edges denote interactions. These interactions are derived from system logs or event data, capturing the sequence and dependencies of process execution. PBGs move beyond simple sequential flowcharts by explicitly representing concurrent and parallel activities, allowing for a more complete depiction of system behavior. The resulting graph structure enables analysis of process dependencies, identification of critical paths, and detection of anomalous behavior based on deviations from established patterns. By representing processes and their interactions as a graph, PBGs facilitate the application of graph-based algorithms for performance analysis, security monitoring, and system understanding.

Process Behavioral Graphs utilize algorithms such as k-Nearest Neighbors (k-NN) to identify and represent behavioral similarities between system processes. The k-NN algorithm assesses the proximity of process behaviors based on defined features, grouping processes with similar characteristics. This involves calculating distances between processes in a feature space; a lower distance indicates greater similarity. The ‘k’ parameter defines the number of nearest neighbors considered when classifying a process’s behavior. By identifying these similar behavioral patterns, the graph can represent not just direct interactions, but also contextual relationships based on shared actions or sequences of events, allowing for a more nuanced understanding of system activity.

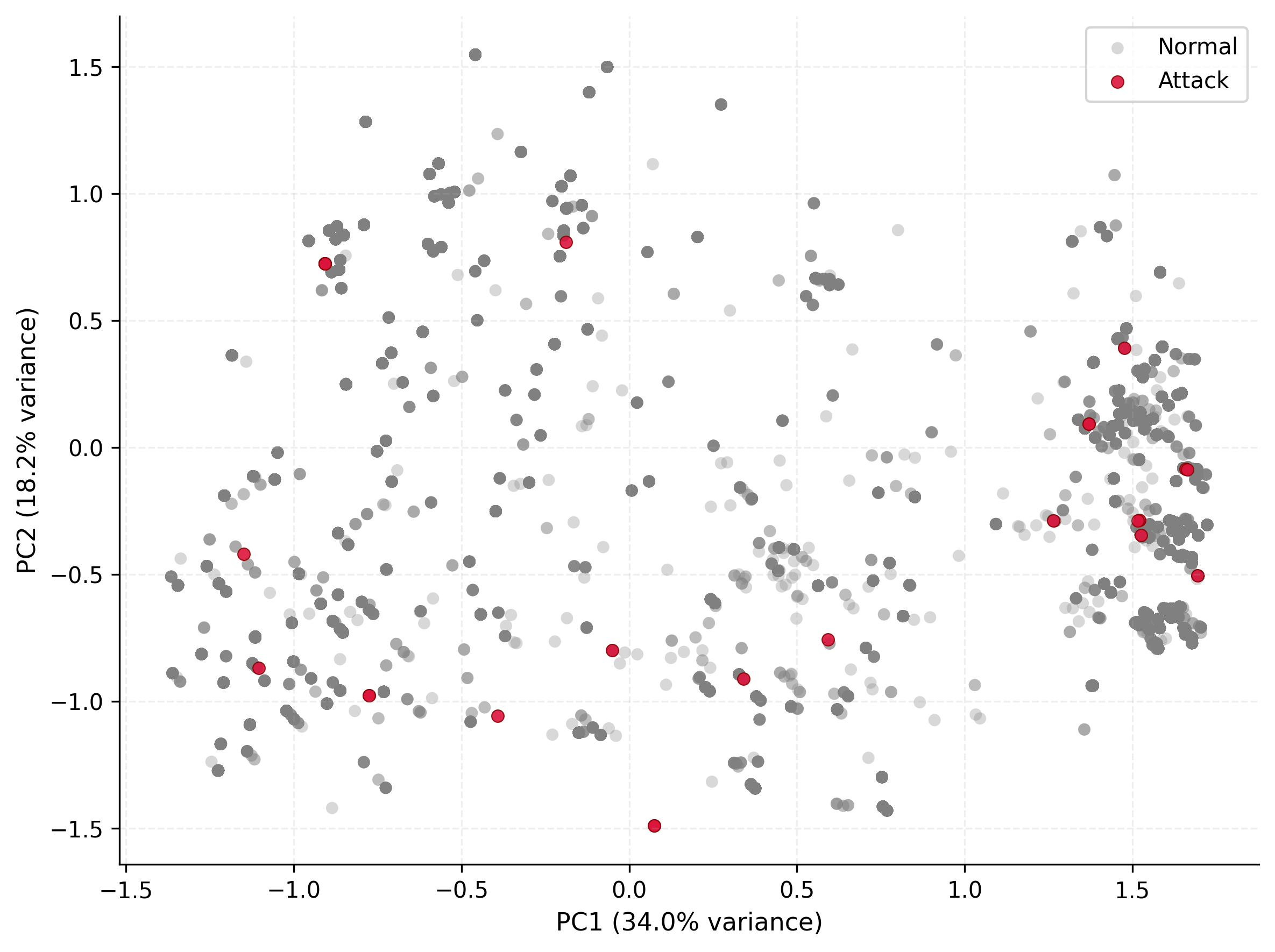

Graph Neural Networks (GNNs), and particularly Graph Autoencoders, facilitate the learning of low-dimensional vector representations, known as embeddings, from process graphs. These embeddings capture the inherent structural properties of the graph, representing nodes and edges in a continuous vector space. The autoencoder architecture consists of an encoder network that maps the graph to a latent space representation, and a decoder network that reconstructs the graph from this latent representation. By minimizing the reconstruction error, the network learns embeddings that preserve critical graph characteristics, such as process relationships and behavioral patterns. These learned embeddings can then be used for downstream tasks including anomaly detection, process similarity analysis, and predictive modeling, offering a computationally efficient means of representing complex process behavior.

Process graphs, beyond representing direct process-to-process interactions, model comprehensive behavioral context by incorporating data points representing process states, resource utilization, and temporal dependencies. This holistic approach allows for the capture of nuanced relationships, including conditional execution paths and shared resource contention, which are often obscured by focusing solely on sequence of events. By including these contextual elements, the graph accurately reflects the complete operational environment, enabling more precise anomaly detection, root cause analysis, and predictive modeling of system behavior. This expanded modeling capability is achieved through the inclusion of edge weights and node attributes that encode these additional contextual factors, providing a richer and more informative representation of system activity.

RPG-AE: Combining the Symbolic and Neural for Robust Detection

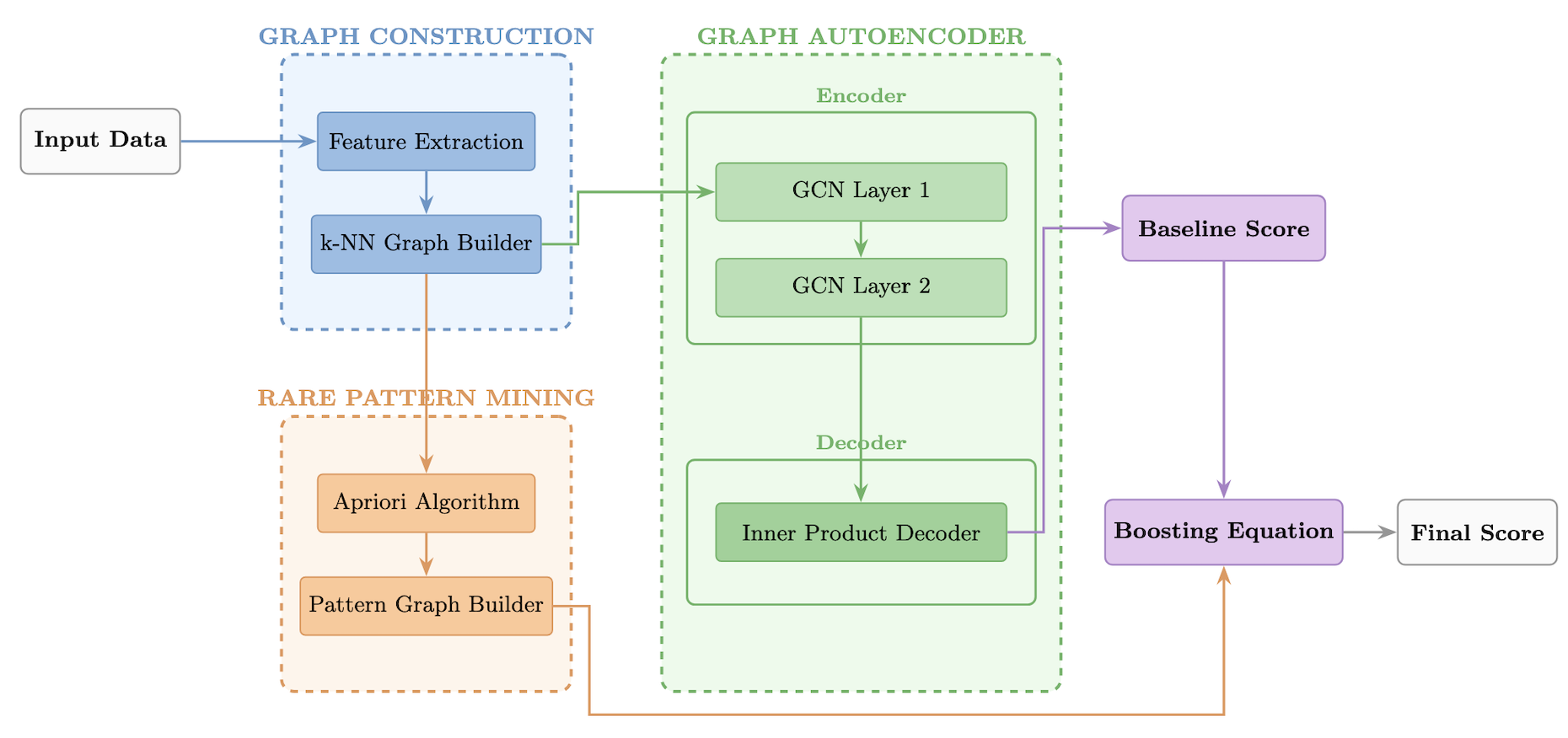

RPG-AE utilizes a neuro-symbolic approach to anomaly detection by combining Graph Autoencoders with Rare Pattern Mining. Graph Autoencoders learn the normal behavior of a network represented as a graph, quantifying deviations through reconstruction error. Rare Pattern Mining complements this by identifying infrequent combinations of events-using algorithms such as Apriori-which are likely indicative of malicious activity but may not manifest as large reconstruction errors alone. This integration allows RPG-AE to detect anomalies that either fall outside the learned normal behavior or represent unusual event sequences, providing a more comprehensive assessment than either technique used in isolation.

Rare Pattern Mining (RPM) is employed to detect anomalous system behaviors by identifying infrequently occurring combinations of events. Algorithms such as Apriori are utilized to analyze event logs and extract patterns that appear with significantly lower frequencies than expected. These infrequent patterns are then treated as potential indicators of malicious activity, as attacks often deviate from established normal behavior and manifest as unusual event sequences. The efficacy of RPM relies on the principle that while individual events may not be anomalous, their specific co-occurrence can strongly suggest an attack. The resulting rare patterns are quantified by their support – the frequency with which they appear in the dataset – and patterns with low support are prioritized as potential anomalies.

RPG-AE demonstrates superior performance in anomaly detection when evaluated against other unsupervised baselines on the DARPA TC datasets. This improvement stems from the integration of two distinct analytical methods: rare pattern mining and graph autoencoder reconstruction error. Rare pattern mining identifies unusual combinations of behaviors, while the graph autoencoder measures the deviation of observed data from learned normal patterns. By combining the evidence from these two sources, RPG-AE effectively reduces false positives and increases the precision of anomaly identification, resulting in a higher overall detection rate compared to methods relying on a single approach.

Score boosting is implemented to enhance the reliability of anomaly ranking by weighting the combined anomaly score derived from graph autoencoder reconstruction error and rare pattern mining evidence. This process utilizes a parameter, α, representing the boosting weight, which linearly scales the rare pattern contribution to the final score. Empirical results demonstrate a positive correlation between α and performance, with improvements observed across tested DARPA TC datasets as the value increases from 0 to 2.0. A higher α value effectively prioritizes anomalies identified through rare pattern analysis, leading to a more accurate ranking of potentially malicious activities; however, performance plateaus beyond α = 2.0, suggesting diminishing returns.

Validating the Approach and Its Real-World Implications

The efficacy of RPG-AE has been rigorously tested against the DARPA Transparent Computing (DTC) dataset, a widely recognized benchmark for evaluating provenance-based security analytics. This dataset, specifically designed to challenge anomaly detection systems, provides a realistic simulation of cyberattack scenarios and system behaviors. Utilizing the DTC dataset allowed for a standardized and comparative assessment of RPG-AE’s performance against existing state-of-the-art techniques. The results demonstrate RPG-AE’s ability to accurately identify malicious activities within complex system logs, solidifying its potential as a valuable tool for proactive threat detection and enhancing overall cybersecurity posture. The DTC dataset’s established credibility further validates the framework’s robustness and reliability in a practical, real-world context.

A significant hurdle in security analytics lies in the inherent class imbalance present in most datasets – malicious activities are, thankfully, far less frequent than normal operations. This disparity often leads to models that are biased towards the majority class, failing to accurately identify rare, yet critical, attacks. The proposed framework directly addresses this challenge through a carefully tuned analytical process. By employing techniques that give greater weight to the minority class during pattern identification, it ensures that even infrequently occurring attack signatures are not overlooked. This approach not only improves the detection rate of rare threats but also minimizes false negatives, providing a more robust and reliable security posture by accurately flagging genuine anomalies amidst a sea of normal activity.

RPG-AE distinguishes itself through a novel approach to threat detection by focusing on the relationships between system events, rather than individual occurrences. This is achieved by constructing a ‘Transaction Space’ – a comprehensive map of how different actions combine within the system. By analyzing this space, the framework identifies anomalous combinations of events that, while not necessarily indicative of malicious activity in isolation, signal potentially novel threats. This capability is crucial for proactively defending against zero-day exploits and advanced persistent threats, as it doesn’t rely on pre-defined signatures or known attack patterns. Instead, RPG-AE flags deviations from established transactional norms, effectively revealing previously unknown malicious behaviors hidden within legitimate system activity.

The efficacy of this analytical framework rests on its ability to pinpoint truly significant anomalies by focusing on patterns with a carefully calibrated support range of 0.012% to 1.22%. This filtering mechanism is crucial because security datasets are often plagued by either overwhelmingly frequent events – which are not indicative of attacks – or exceedingly rare occurrences that represent noise rather than genuine threats. By excluding both these extremes, the system hones in on subtle, yet meaningful, combinations of events that likely signify malicious activity. This precision allows for the identification of previously unseen attack vectors, distinguishing them from the typical background ‘chatter’ and providing a more actionable signal for security analysts.

Evaluations utilizing the nDCG@K metric reveal that RPG-AE consistently outperforms existing anomaly detection baselines, signifying a substantial advancement in ranking the relevance of identified threats. This metric, which assesses the quality of ranking by considering both the relevance of items and their position in the ranked list, demonstrates RPG-AE’s ability to not only detect anomalies but to prioritize them effectively. Higher nDCG@K scores indicate that the most critical anomalies are positioned at the top of the ranked list, allowing security analysts to focus their attention on the most pressing issues first. This superior performance suggests RPG-AE offers a more efficient and accurate approach to threat identification, minimizing false positives and maximizing the speed of response to genuine security breaches.

The pursuit of elegant anomaly detection, as demonstrated by RPG-AE’s integration of graph autoencoders and rare pattern mining, invariably courts future technical debt. This framework, while promising improved identification of subtle attacks within provenance data, will eventually succumb to the realities of production systems. It’s a predictable cycle; the novelty of combining these techniques will fade as attackers adapt and systems evolve, demanding constant refinement. As Grace Hopper observed, “It’s easier to ask forgiveness than it is to get permission.” This sentiment applies perfectly; the rapid deployment of such a system, even with known limitations, may prove more effective than endlessly perfecting it in isolation. The system’s current stability is merely a temporary reprieve before the inevitable bugs surface.

What’s Next?

The presented framework, while demonstrating a capacity for identifying subtle anomalies within provenance data, inevitably introduces a new class of failure modes. The elegance of combining graph autoencoders with rare pattern mining obscures the inevitable: production systems are not governed by statistical distributions, but by the chaotic whims of users, updates, and unforeseen interactions. The current reliance on ‘normal’ behavior as a baseline is, at best, a temporary reprieve; entropy always wins.

Future work will undoubtedly focus on scaling this approach-larger graphs, faster processing-but that’s merely treating the symptom. A more pressing concern is the inherent fragility of anomaly detection itself. How does one differentiate between a genuinely novel attack and a legitimate, but previously unseen, system operation? The answer won’t be found in more sophisticated algorithms, but in accepting that false positives will always exist, and building systems resilient enough to tolerate them.

Ultimately, the true measure of success won’t be the number of anomalies detected, but the cost of the ones that slip through. Tests are a form of faith, not certainty. The field should shift its focus from chasing perfect detection rates to quantifying the acceptable level of risk – a decidedly less glamorous, but far more practical, pursuit.

Original article: https://arxiv.org/pdf/2602.02929.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- TON PREDICTION. TON cryptocurrency

- 10 Hulu Originals You’re Missing Out On

- Gold Rate Forecast

- Bitcoin and XRP Dips: Normal Corrections or Market Fatigue?

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- Walmart: The Galactic Grocery Giant and Its Dividend Delights

- The Gambler’s Dilemma: A Trillion-Dollar Riddle of Fate and Fortune

- Is T-Mobile’s Dividend Dream Too Good to Be True?

2026-02-04 10:19