The current fascination with artificial intelligence—a term, I confess, that always strikes me as delightfully oxymoronic—has induced a rather predictable frenzy in the markets. One might even call it a gilded rush, though the gold in question is, of course, silicon. While the ultimate return on this vast investment remains a question for the oracles (or, more prosaically, quarterly earnings reports), there are certain entities already benefiting, and one, in particular, demands a closer, more discerning gaze. I refer, naturally, to Nvidia (NVDA 0.72%).

The company, you see, isn’t merely participating in this algorithmic ballet; it’s, to a considerable degree, choreographing it. They are pursuing an estimated $3 trillion market opportunity by 2030—a sum so vast it almost feels… theoretical. But then, so is much of what we now label ‘progress.’ The stock, I suspect, is only just beginning to reflect this dominance, a slow awakening of the market’s collective consciousness.

Nvidia: The Nervous System of the New Machine

Nvidia manufactures graphics processing units, or GPUs—those intricate, humming boxes that serve as the brains—or perhaps, more accurately, the nervous systems—of these burgeoning AI constructs. They don’t simply innovate; they orchestrate a continuous evolution, launching new chip architectures with a frequency that leaves competitors scrambling in the dust. The latest iteration, I’m told, represents a significant leap forward—a refinement of the silicon soul, if you will.

Compared to the Blackwell GPUs, the next-generation Rubin GPUs require a mere quarter of the processing power to train an AI model, and a tenth for inference. A rather elegant reduction, wouldn’t you agree? It’s a compelling argument for upgrade, a siren song for those who demand ever-greater computational velocity. And, naturally, with each improvement comes a corresponding increase in price—a perfectly predictable dance of supply and demand.

Nvidia operates on a remarkably efficient one-year product development cycle, a relentless pursuit of the optimal algorithm. Investors can anticipate further revelations regarding the next-generation architecture later this year. Each launch promises not merely greater capabilities, but a more substantial price tag. However, if one can acquire four to ten times the performance for a mere doubling of the cost, the equation, as any sensible trader will attest, is undeniably appealing.

A considerable number of GPUs have already been deployed, but this represents only the faintest glimmer of what’s necessary to sustain a truly AI-driven world. It demands a proliferation of data centers, those vast, humming cathedrals of computation. Many of these facilities, announced throughout 2025, won’t require chips until 2027 or beyond. The construction of these behemoths is a protracted affair, and filling them with the necessary computing units is, naturally, one of the final, most crucial steps.

This underpins Nvidia’s projection that global data center expenditure will swell to $3 to $4 trillion annually by 2030—a figure that, I confess, borders on the astronomical. The company already possesses orders stretching out for several years, a testament to the demand and a shrewd anticipation of future needs. The growth narrative, therefore, is only just unfolding, yet the stock, remarkably, trades at a premium only slightly above the market average.

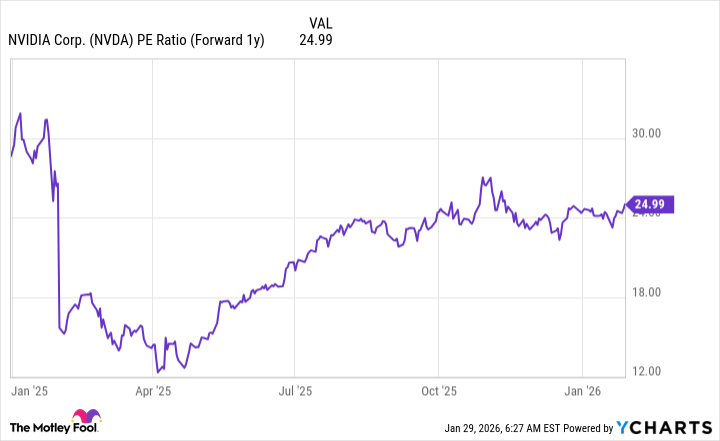

At 25 times fiscal 2027 earnings (ending January 2027), Nvidia’s valuation is barely more extravagant than that of the S&P 500 index, which currently trades at 22.2 times forward earnings. A rather modest premium, wouldn’t you say, for a company poised to capitalize on such a monumental market opportunity and exhibiting such vigorous revenue growth? I, therefore, consider Nvidia the most compelling AI stock to acquire in February, and suggest investors act with alacrity before the quarterly earnings report arrives, lest they miss the initial ascent.

Read More

- TON PREDICTION. TON cryptocurrency

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 10 Hulu Originals You’re Missing Out On

- Is T-Mobile’s Dividend Dream Too Good to Be True?

- Walmart: The Galactic Grocery Giant and Its Dividend Delights

- Unlocking Neural Network Secrets: A System for Automated Code Discovery

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- Gold Rate Forecast

- The Gambler’s Dilemma: A Trillion-Dollar Riddle of Fate and Fortune

2026-02-02 16:43