Author: Denis Avetisyan

A new meta-reinforcement learning framework enables autonomous electric taxi fleets to quickly adjust to evolving charging infrastructure and maintain optimal performance.

This paper introduces GAT-PEARL, a hierarchical meta-RL approach leveraging Graph Attention Networks for efficient electric vehicle fleet management under dynamic infrastructure conditions.

The increasing dynamism of urban environments presents a key challenge to the reliable operation of autonomous electric vehicle fleets, particularly as charging infrastructure evolves. This paper, ‘Few-Shot Learning for Dynamic Operations of Automated Electric Taxi Fleets under Evolving Charging Infrastructure: A Meta-Deep Reinforcement Learning Approach’, addresses this limitation by introducing GAT-PEARL, a novel meta-reinforcement learning framework capable of rapidly adapting to changes in charging network layouts. Through the integration of graph attention networks and probabilistic embeddings, GAT-PEARL demonstrates superior performance and generalization capabilities in simulated real-world scenarios. Could this approach unlock truly resilient and efficient autonomous mobility solutions in rapidly changing urban landscapes?

Navigating the Complexities of Dynamic Fleet Management

Effective management of an electric vehicle fleet presents a unique logistical challenge: harmonizing passenger transportation needs with the finite capacity and accessibility of charging infrastructure. Unlike conventional fleets fueled by readily available resources, electric vehicles are intrinsically linked to the charging network, meaning operational planning must account for charger locations, charging speeds, and potential queuing. This balancing act demands a shift from simply matching vehicles to riders, to proactively anticipating charging requirements alongside passenger demand. Consequently, fleet operators must consider not only where a vehicle needs to go, but also when and how it will replenish its energy reserves, transforming a traditional dispatch problem into a complex, multi-faceted optimization scenario.

Conventional dispatching systems, designed for static conditions, often falter when applied to electric vehicle fleets due to the fluctuating nature of charging infrastructure and grid stability. These systems typically rely on pre-defined routes and schedules, failing to account for real-time changes in charger availability – a station might be unexpectedly offline, occupied, or experiencing reduced charging speeds. Furthermore, network conditions, such as peak electricity demand or localized outages, introduce unpredictable constraints. This inherent dynamism means a vehicle dispatched based on static data may arrive at a non-operational charger or contribute to grid overload, necessitating a more responsive and adaptive approach to fleet management. Consequently, optimized routing and scheduling require continuous monitoring and recalculation based on live data, a capability beyond the scope of many traditional dispatching methods.

Electric vehicle fleet management faces significant hurdles when confronted with unforeseen demand surges, events that can rapidly overwhelm existing optimization strategies. These surges – triggered by factors like large-scale event dismissals or sudden weather changes – introduce volatility into charging schedules and potentially strand vehicles or delay passengers. Consequently, static routing and charging plans become ineffective, demanding a shift towards adaptive strategies. These strategies incorporate real-time data on grid load, charging station availability, and predicted demand to dynamically re-route vehicles and adjust charging times. Effective management, therefore, requires algorithms capable of rapidly assessing the impact of these surges and implementing responsive solutions, prioritizing critical services and minimizing disruption to the overall fleet operation.

A Hierarchical Control Architecture for Adaptability

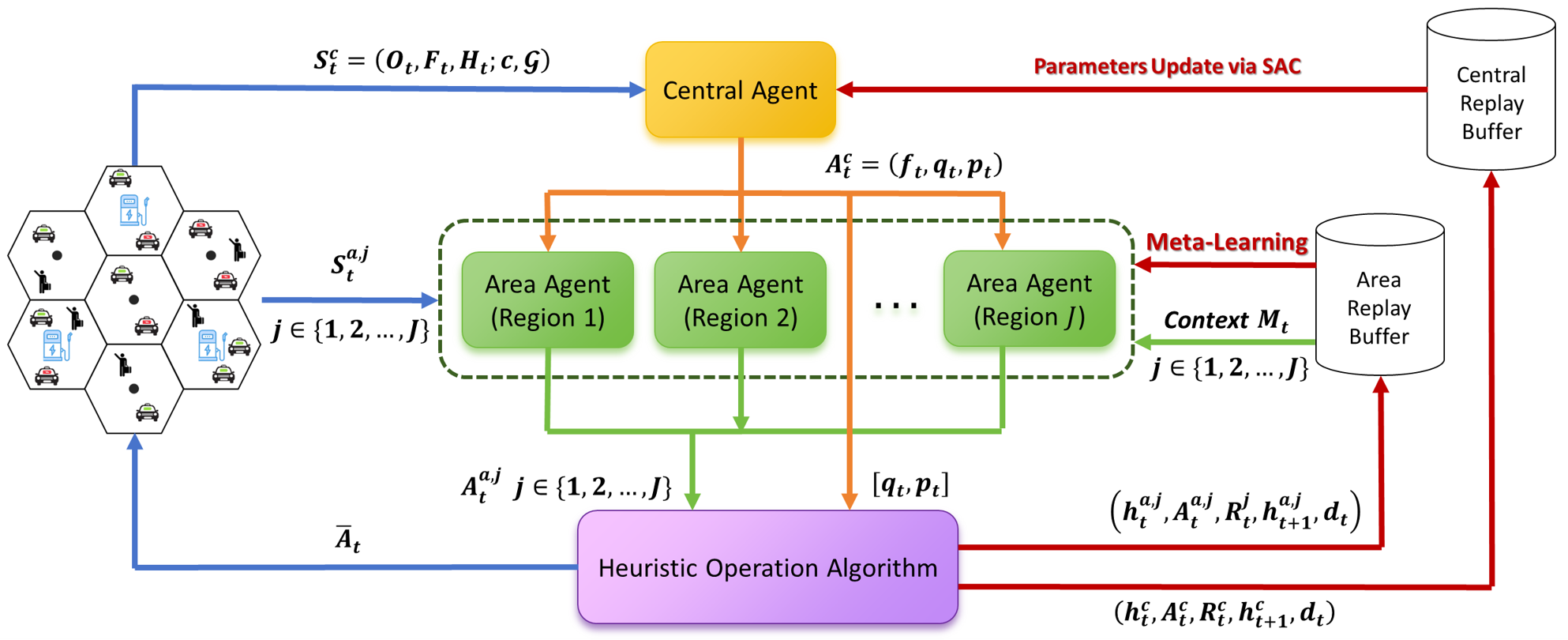

The implemented control architecture is hierarchical, functionally dividing the automated electric taxi (AET) fleet management into distinct levels of decision-making. A central agent operates at the high level, responsible for strategic allocations such as ride request acceptance and overall fleet positioning to anticipate future demand. Conversely, area agents function at the low level, managing individual vehicle actions including navigation to pick-up/drop-off locations and charging station utilization. This separation allows the central agent to focus on long-term optimization without being burdened by the complexities of real-time vehicle control, while area agents execute those high-level directives with localized awareness and responsiveness. This decomposition simplifies the problem and improves scalability for larger fleets and more complex operational environments.

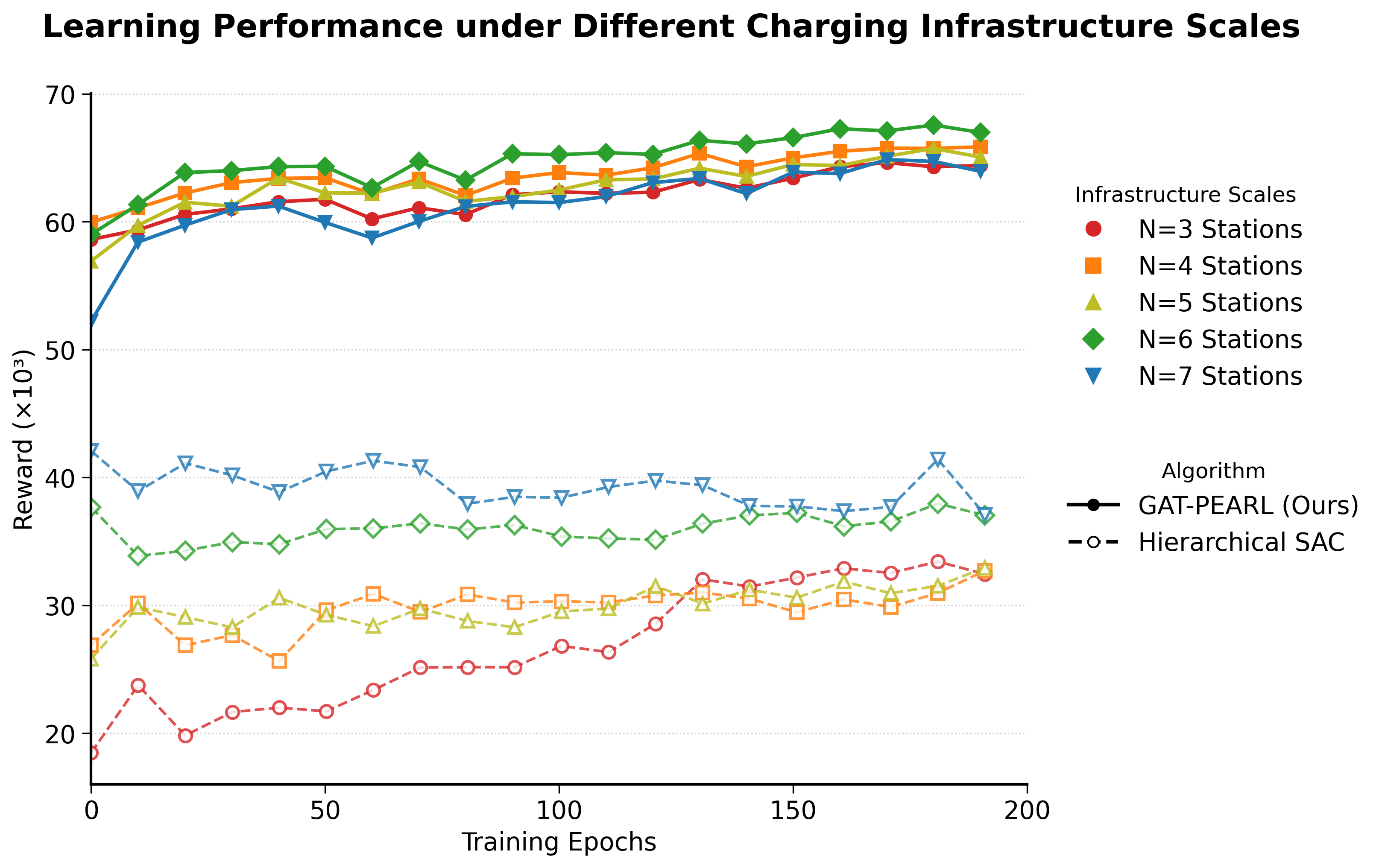

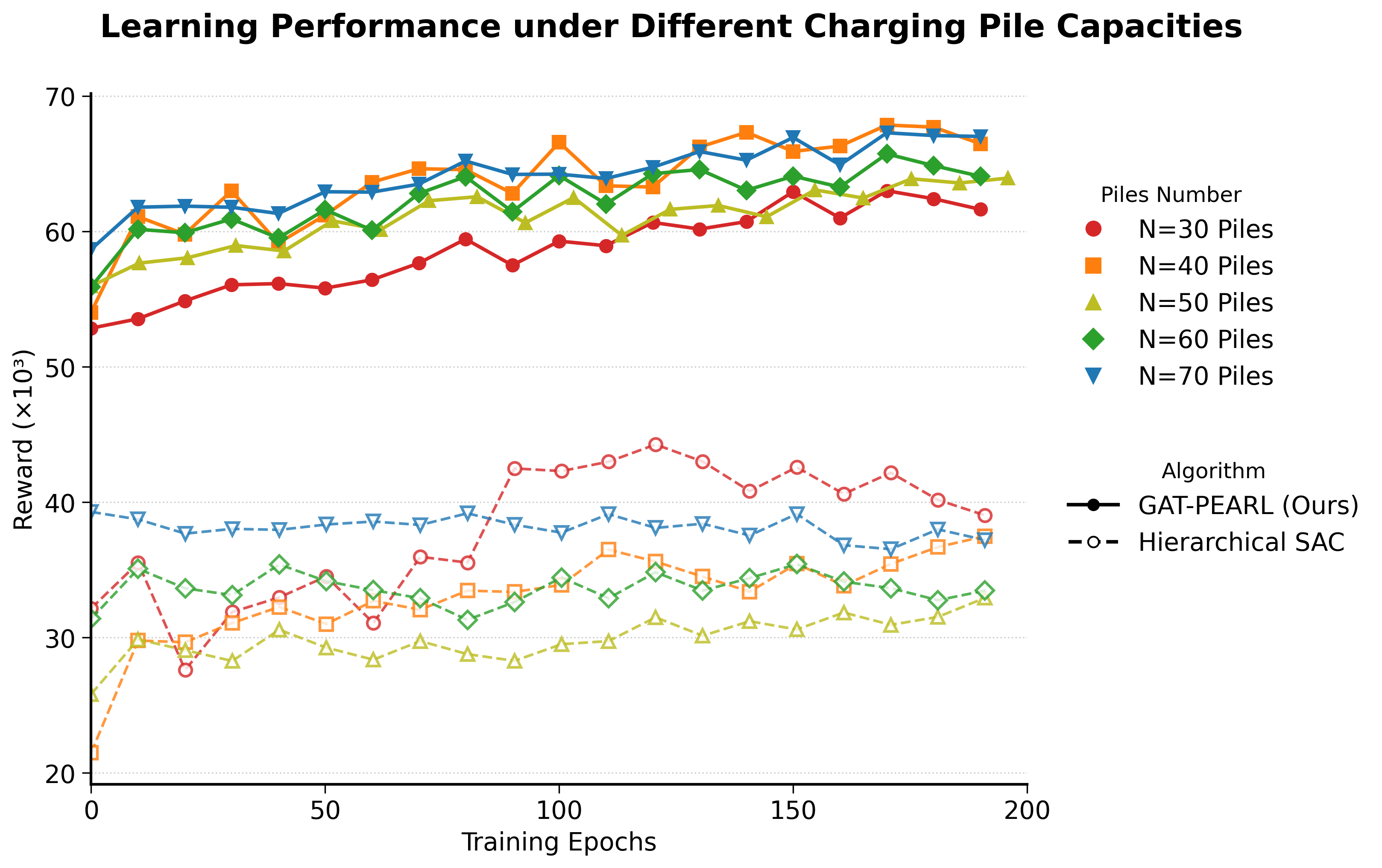

Meta-Reinforcement Learning (Meta-RL) was implemented to enable rapid adaptation of agents within the Automated Electric Transit (AET) system to variations in charging infrastructure layouts and fluctuating demand profiles. This approach trains agents not just to perform well in a single environment, but to quickly learn optimal policies in new, unseen environments with minimal additional training. In simulations of AET fleet operations, Meta-RL agents demonstrated consistently improved performance – measured by metrics such as total operational cost and service level adherence – and exhibited significantly lower variance in performance across different simulated scenarios compared to traditional Reinforcement Learning baselines. This reduction in variance indicates a greater robustness and reliability of the Meta-RL agents when faced with unpredictable real-world conditions.

The Soft Actor-Critic (SAC) algorithm was implemented to train both the Central Agent and Area Agents due to its capacity for off-policy learning and its inherent stability. SAC utilizes a maximum entropy reinforcement learning approach, encouraging exploration and preventing premature convergence to suboptimal policies. Specifically, the algorithm employs a stochastic policy and a learned value function, parameterized by neural networks, to maximize expected reward while also maximizing entropy. This entropy regularization term encourages the agent to maintain a diverse behavioral policy, which is beneficial in complex and dynamic environments like automated electric vehicle charging. Implementation involved utilizing the automatic differentiation capabilities of PyTorch to compute policy gradients and update network parameters via the Adam optimizer, with hyperparameters tuned to optimize convergence speed and overall performance within the hierarchical framework.

Encoding Spatial Intelligence with Graph Attention Networks

The charging infrastructure is modeled as a graph, where charging stations are represented as nodes and spatial relationships – determined by road network proximity – are represented as edges. A Graph Attention Network (GAT) is then applied to this graph; the GAT learns node embeddings that incorporate information from neighboring stations, weighted by an attention mechanism. This attention mechanism allows the network to prioritize relevant neighboring stations based on their proximity and potential impact on routing decisions. The resulting node embeddings effectively encode the spatial context of each charging station, capturing its relationship to the broader charging network and facilitating informed policy decisions regarding vehicle routing and charging scheduling.

The Graph Attention Network (GAT) provides the reinforcement learning policy with data regarding the operational status and accessibility of charging stations beyond simple availability. The GAT propagates information between stations, allowing the policy to assess network-wide conditions such as congestion at nearby stations or the presence of alternative routes with higher probabilities of success. This contextual awareness is achieved through attention weights assigned to neighboring stations, reflecting the relevance of their status to the current station, and is incorporated as a feature vector within the policy’s state representation, enabling more informed decision-making regarding charging stop selection and route planning.

Probabilistic Context Inference addresses the inherent uncertainty in real-world charging station data by enabling agents to reason with potentially unreliable or incomplete status information. This is achieved through a probabilistic modeling approach where each charging station’s availability is represented as a probability distribution rather than a definitive state. Agents then utilize these distributions to assess risk and reward, considering the likelihood of a station being operational upon arrival. This allows for robust decision-making even with imperfect data, enabling the agents to proactively navigate to alternative stations if the probability of a preferred station being available falls below a defined threshold. The system does not rely on absolute certainty but instead quantifies and incorporates uncertainty into the policy optimization process.

Accelerated Learning Through Meta-Update and Task Adaptation

The architecture incorporates a ‘Meta-Update’ process designed to accelerate the agent’s capacity for learning novel tasks. This isn’t simply about incremental adjustments; rather, the system proactively refines its core parameters before encountering a new challenge. By pre-optimizing these initial conditions, the agent starts each new task from a more advantageous position, significantly reducing the time required to achieve proficient performance. Essentially, the Meta-Update acts as a form of ‘prior knowledge’ injection, allowing the system to generalize more effectively and adapt with remarkable speed to previously unseen scenarios. This pre-conditioning fosters robust learning, enabling the agent to quickly identify optimal strategies and maximize rewards across a diverse range of tasks and environments.

The system’s capacity for ‘Task Adaptation’ represents a crucial advancement in autonomous fleet management, enabling it to tailor its operational policy to the nuances of varying environments and fluctuating demands. Rather than relying on a static, pre-programmed strategy, the framework dynamically modifies its approach based on real-time data concerning charging station locations and the evolving needs of service requests. This adaptability allows the fleet to optimize its charging schedules and route planning, minimizing wait times and maximizing efficiency even in complex and unpredictable scenarios. Consequently, the system can effectively navigate diverse urban landscapes and respond to shifting patterns of demand, demonstrating a level of flexibility previously unattainable in autonomous vehicle dispatch systems.

The system’s adaptability hinges on a carefully tuned ‘Hybrid Gradient Strategy’ designed to reconcile the competing needs of learning and memory retention. This approach avoids ‘catastrophic forgetting’ – the tendency of neural networks to abruptly lose previously learned information when exposed to new data – by modulating the magnitude of weight updates. Specifically, the strategy employs a combination of large gradients for rapidly acquiring new skills and smaller, stabilizing gradients to preserve knowledge from prior tasks. This balance is achieved through a dynamic weighting scheme, allowing the system to maintain performance across a diverse range of charging scenarios and demand patterns without succumbing to the instability that often plagues continuously learning agents. The result is a robust and reliable system capable of adapting to evolving conditions while retaining valuable past experiences.

The system’s efficiency stems, in part, from a dedicated Heuristic Dispatching Module, which serves as a crucial bridge between strategic directives and real-world fleet operations. This module doesn’t simply receive commands; it intelligently interprets high-level control signals – such as “maximize charging throughput” or “prioritize critical deliveries” – and translates them into a sequence of precise, actionable maneuvers for each vehicle. Rather than relying on complex, computationally expensive path planning for every scenario, the module leverages pre-defined heuristics and optimized algorithms to rapidly determine the most efficient routes, charging schedules, and task assignments. This allows the fleet to respond dynamically to changing conditions and demands, minimizing delays and maximizing overall performance without being hampered by excessive processing time.

Evaluations reveal this framework consistently achieves faster convergence and exhibits significantly lower performance variance when compared to established baseline methods. This enhanced stability translates directly into improved reward acquisition across diverse scenarios, demonstrating a robust learning capability. Critically, the system doesn’t simply learn faster; it also displays superior adaptability to previously unseen charging layouts and fluctuating energy demands. The observed performance suggests a more efficient exploration of the solution space, allowing the agent to quickly identify and consistently execute optimal charging strategies even under dynamic conditions – a crucial advantage for real-world deployment in complex, evolving energy grids.

The pursuit of adaptability, central to the GAT-PEARL framework, mirrors a fundamental tenet of robust system design. Grace Hopper once stated, “It’s easier to ask forgiveness than it is to get permission.” This sentiment resonates with the paper’s approach to dynamic infrastructure; rather than demanding a perfect pre-defined environment, the system learns to navigate changes, effectively ‘asking forgiveness’ for deviations from initial plans and adjusting its strategy. The hierarchical meta-RL approach allows the electric taxi fleet to rapidly generalize to new charging layouts, demonstrating that a system’s ability to respond to unforeseen circumstances-to learn and forgive-is paramount to its overall resilience and operational efficiency. This aligns with the concept of contextual Markov Decision Processes, where the system efficiently handles variations in the environment.

Beyond the Charging Station

The presented framework, while demonstrating adaptability to shifting infrastructural constraints, merely addresses the symptoms of a larger problem. True resilience in autonomous fleet management isn’t simply about reacting to change, but anticipating it. The current approach, predicated on contextual Markov Decision Processes, implicitly assumes a static underlying system – a fallacy in any truly dynamic environment. Future work must grapple with the non-stationarity of the demand itself, rather than solely focusing on the geography of replenishment. A fleet that understands why charging locations shift-due to behavioral patterns, external events, or even predictive maintenance-will inherently outperform one that merely learns to navigate the consequences.

Furthermore, the reliance on graph attention networks, while effective for representing infrastructural relationships, introduces a rigidity. Infrastructure is rarely a neatly defined graph. Consider the inevitable encroachment of ad-hoc charging points – a repurposed parking space, a temporary generator. A truly elegant system would embrace such imperfections, treating them not as anomalies, but as integral components of a constantly evolving network. This necessitates a move beyond fixed graph structures, perhaps toward continuous representations that can accommodate arbitrary connectivity.

Ultimately, the pursuit of adaptability should not be mistaken for a substitute for robust design. The most resilient system is not one that can recover from any perturbation, but one that minimizes the need for recovery in the first place. This demands a holistic view-integrating predictive modeling of both demand and infrastructure, and prioritizing preventative measures over reactive adjustments. The question is not simply “how can the fleet adapt?”, but “how can the system avoid needing to adapt?”

Original article: https://arxiv.org/pdf/2601.21312.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- TON PREDICTION. TON cryptocurrency

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- 10 Hulu Originals You’re Missing Out On

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- Gold Rate Forecast

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- Is T-Mobile’s Dividend Dream Too Good to Be True?

- The Gambler’s Dilemma: A Trillion-Dollar Riddle of Fate and Fortune

- 📢【Browndust2 at SDCC 2025】Meet Our Second Cosplayer!

- Walmart: The Galactic Grocery Giant and Its Dividend Delights

2026-02-01 15:06