Author: Denis Avetisyan

A novel machine learning framework enables efficient modeling of diverse energy storage systems, paving the way for greater participation in power markets.

This review details a mean-field learning approach for accurate and scalable storage aggregation, leveraging convex relaxation techniques for optimal power flow and price-responsive control in modern power systems.

Coordinating a growing population of distributed energy storage devices presents a significant challenge for power system operators and market participants. This paper, ‘Mean-Field Learning for Storage Aggregation’, introduces a novel framework leveraging mean-field learning to create a computationally efficient and accurate surrogate model for large-scale storage aggregation. By representing aggregate behavior as a convex limit, the approach enables tractable population-level modeling and facilitates price-responsive control strategies. Will this methodology unlock greater flexibility and profitability in future power systems through improved integration of distributed energy resources?

The Inevitable Complexity of Distributed Dreams

The integration of renewable energy sources, while crucial for a sustainable future, fundamentally challenges the operational paradigm of modern power grids. Unlike traditional, centralized generation, solar and wind power are inherently intermittent and geographically dispersed, necessitating a more flexible and responsive grid infrastructure. However, realizing this flexibility through the aggregation of distributed energy resources – such as residential batteries, electric vehicles, and small-scale commercial storage – introduces considerable complexities. Coordinating these numerous, geographically fragmented assets presents difficulties in maintaining grid stability, forecasting reliable power output, and ensuring efficient resource allocation. The sheer scale and heterogeneity of these distributed resources demand innovative approaches to grid management, moving beyond the capabilities of systems designed for large, predictable power plants.

Existing energy markets were not designed to handle the granularity and variability inherent in a multitude of small-scale storage devices. Traditionally, these markets function most effectively with large, predictable generation sources; however, distributed storage – encompassing everything from residential batteries to commercial energy storage systems – presents a fundamentally different challenge. Each storage unit possesses unique charging and discharging profiles, response times, and operational preferences, creating a complex aggregate behavior that conventional market mechanisms struggle to accurately assess and value. This mismatch hinders the efficient integration of these resources, as price signals may not adequately reflect their capabilities or incentivize optimal participation, ultimately limiting the potential of distributed storage to enhance grid flexibility and reliability.

The promise of demand response – incentivizing consumers to adjust energy usage based on price signals – faces considerable hurdles when applied to distributed energy storage. Forecasting the collective behavior of numerous, individually-controlled storage units proves exceptionally difficult; unlike a single power plant, aggregate storage doesn’t follow predictable patterns. This complexity arises from the diversity of storage technologies, varying owner preferences, and unpredictable environmental factors influencing charging and discharging cycles. Consequently, relying solely on real-time pricing to modulate storage output introduces significant uncertainty for grid operators, potentially leading to imbalances between supply and demand. Accurate forecasting requires sophisticated modeling that accounts for these granular details, demanding substantial computational resources and advanced algorithms to effectively integrate distributed storage into demand response programs.

A Modeling Paradigm Shift: Wrangling the Heterogeneity

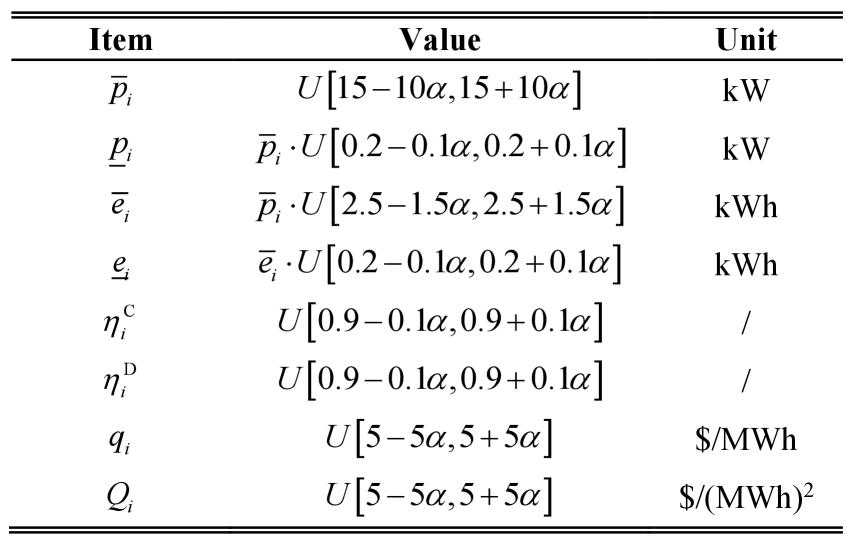

StorageAggregation, the process of combining the capabilities of multiple distributed energy resources (DERs) such as batteries, electric vehicles, and thermal storage, presents a viable method for increasing grid flexibility and enabling greater renewable energy integration. However, the inherent heterogeneity of these DERs – differing capacities, charge/discharge rates, efficiencies, and operational constraints – complicates traditional power system modeling. Accurate representation of aggregated storage requires innovative techniques that move beyond simple summation of capacities and instead account for the complex interplay between individual unit characteristics and system-level constraints. This necessitates the development of modeling approaches capable of representing the combined flexibility while maintaining computational tractability for real-time grid operation and planning applications.

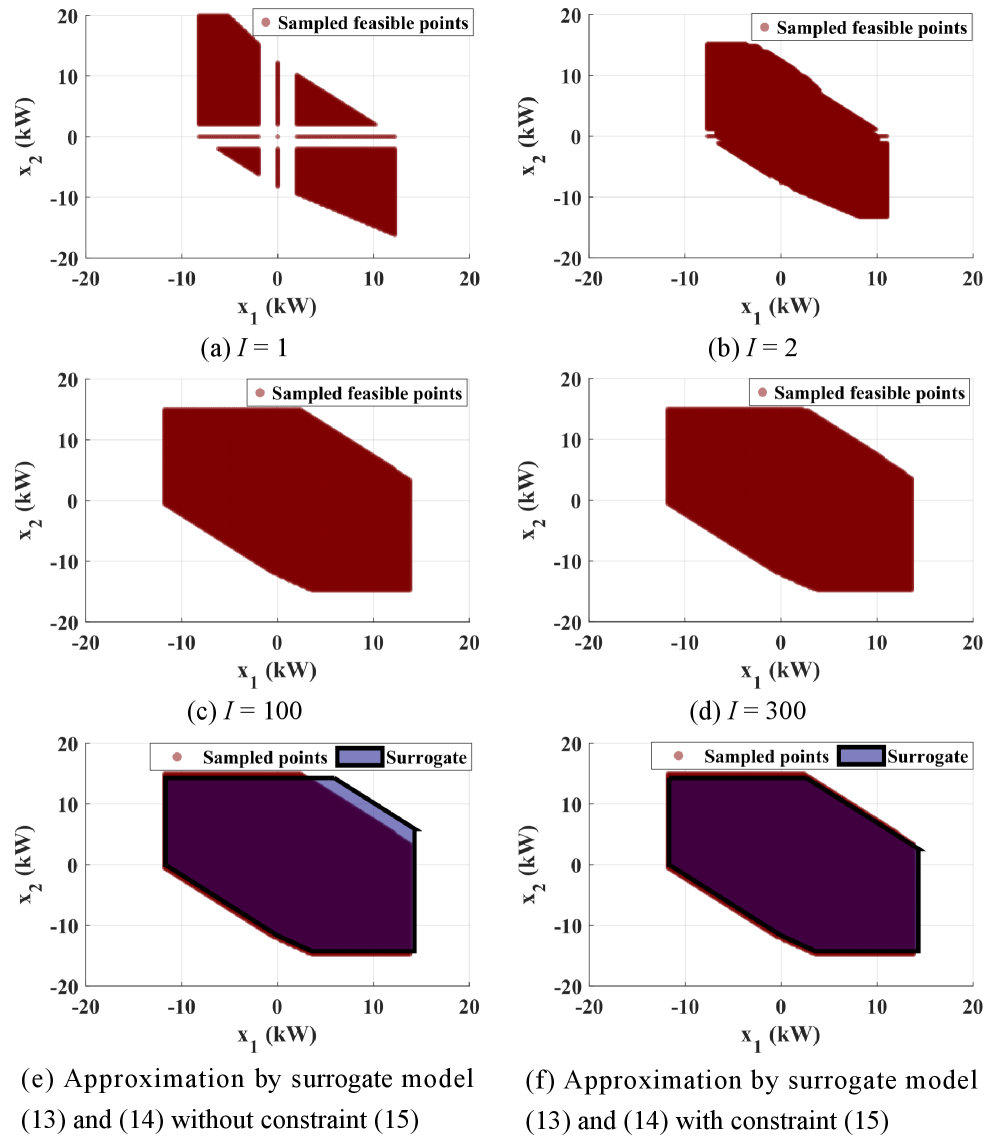

The MinkowskiSum operation, in the context of energy storage aggregation, provides a method for determining the combined operational boundaries of multiple storage units. Specifically, it mathematically defines the set of all possible aggregate power outputs achievable by combining the individual power capabilities of each storage unit at any given time. This is accomplished by summing the individual state-of-charge (SoC) and power limits of each unit; formally, the aggregated flexibility set is defined as \mathcal{F} = \{ \sum_{i=1}^{n} p_i \mid p_i \in \mathcal{P}_i \} , where \mathcal{P}_i represents the feasible power set of the ith storage unit and n is the total number of units. The resulting \mathcal{F} represents the combined flexibility envelope, enabling assessment of the aggregate’s ability to provide grid services and participate in energy markets.

The aggregation of distributed energy storage resources presents a non-convex optimization problem due to the complex interactions between individual unit charging and discharging constraints, and the non-linear nature of power flow calculations within the grid. This non-convexity hinders the application of standard optimization techniques, potentially leading to locally optimal solutions or computational intractability as the number of aggregated units increases. Consequently, effective simplification strategies – such as convex relaxation, piecewise linear approximation of storage behavior, or the use of surrogate models – are required to transform the original problem into a tractable form, enabling efficient and reliable control of the aggregated storage system while maintaining acceptable solution accuracy.

Convexity as a Crutch: Forcing Order onto Chaos

ConvexSurrogate methods address the computational challenges posed by the inherent non-convexity of aggregated storage system behavior. These methods construct convex approximations of the true system response, allowing the use of efficient ConvexOptimization techniques. Aggregated storage, due to factors like battery degradation, state of charge constraints, and power limits of individual units, often exhibits non-linear and non-convex characteristics. Direct optimization of these systems is computationally expensive, often requiring mixed-integer programming or other complex methods. ConvexSurrogate methods replace these non-convex functions with convex proxies – typically piecewise linear or second-order cone relaxations – that are mathematically tractable while maintaining a reasonable level of accuracy in representing the system’s operational envelope.

Convex optimization techniques enable the efficient determination of optimal operating strategies for aggregated storage systems by formulating the control problem as a convex program. This allows for the global optimum to be found in polynomial time using well-established algorithms such as interior-point methods. Specifically, the objective function and constraints governing system operation – including charging/discharging rates, state-of-charge limits, and response to price signals – are expressed in a convex form. This formulation guarantees that any local optimum found is also the global optimum, significantly reducing computational burden compared to non-convex optimization approaches. The efficiency gains are critical for real-time control and decision-making in dynamic market environments, enabling participation in fast-paced \text{MarketClearing} processes.

Effective participation in market clearing and adherence to operational limits for aggregated storage systems necessitates precise and rapid response to grid signals and regulatory constraints. Market clearing processes require accurate forecasting of storage system capabilities – including charge/discharge rates and total energy capacity – to ensure grid stability and efficient resource allocation. Simultaneously, operational limits, dictated by grid operators and regulatory bodies, define permissible operating ranges for voltage, current, and power factor. Failure to meet these limits can result in penalties or service disruptions. Consequently, optimization techniques enabling swift and reliable fulfillment of market bids and adherence to these operational boundaries are critical for maximizing revenue and maintaining grid reliability, particularly as aggregated storage capacity increases and becomes more integrated into the power system.

The efficacy of optimization strategies for aggregated storage systems is directly dependent on the fidelity of the behavioral representation used in modeling. Traditional approaches often rely on static assumptions regarding storage unit characteristics and response, which fail to capture the dynamic and interdependent nature of aggregated systems. Accurate representation requires accounting for factors such as unit-to-unit variability, state-of-charge imbalances, temperature effects, and degradation patterns. Moving beyond static models necessitates employing techniques capable of characterizing this complex, time-varying behavior, enabling more precise predictions of system response and facilitating the development of robust and efficient control algorithms. This improved modeling allows for more effective participation in market clearing and adherence to operational limits by providing a realistic assessment of system capabilities.

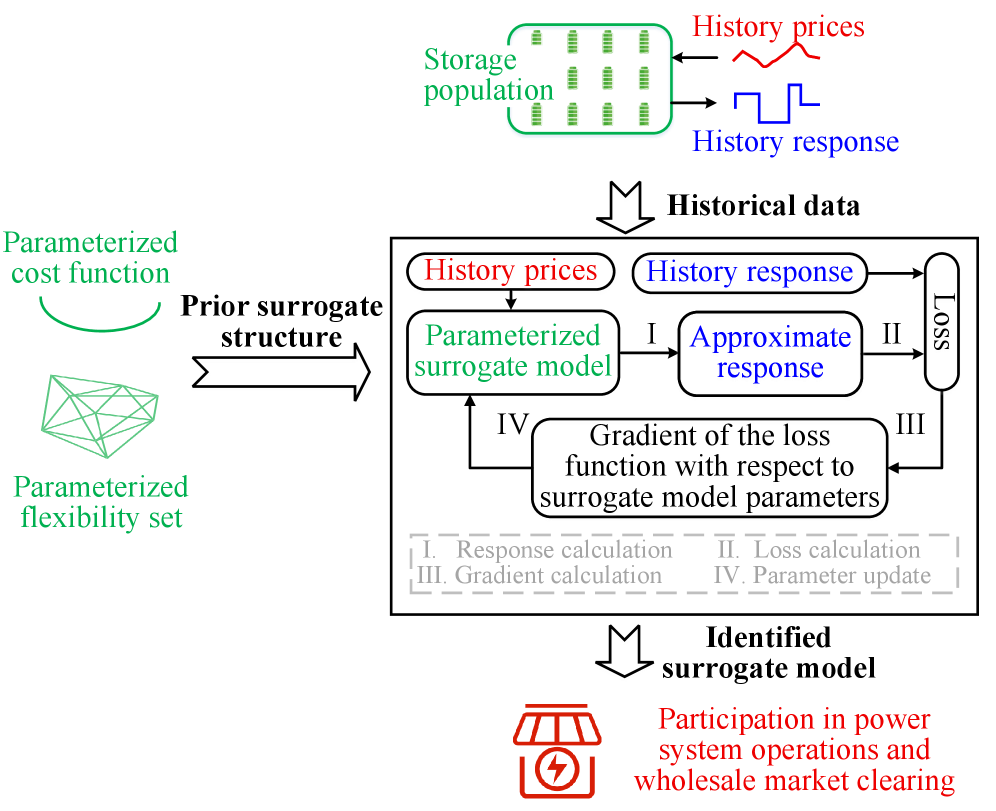

Statistical Sleight of Hand: Scaling Beyond Unit-by-Unit Accounting

Mean Field Learning presents a compelling solution for analyzing the coordinated actions of a large number of distributed energy storage units, sidestepping the computational burdens typically associated with modeling each unit individually. This technique simplifies the analysis by representing the collective behavior as an interaction with an average, or ‘mean’, field, effectively reducing a high-dimensional problem into a more manageable one. Instead of tracking the state of every storage device, the model focuses on the statistical distribution of their responses, allowing for efficient prediction of aggregate system behavior. This approach not only lowers computational costs, but also enables scalable analysis for systems with hundreds or even thousands of storage assets, paving the way for smarter grid management and improved energy delivery.

A precise understanding of how energy storage systems react to price signals is fundamental to managing a stable and efficient power grid. Researchers are increasingly utilizing historical PriceResponseData to build detailed statistical models of these systems, moving beyond simplified assumptions. These models aren’t simply about predicting averages; they aim to capture the full distribution of possible responses, acknowledging the inherent variability in real-world performance. To quantify the accuracy of these distributional models, the HausdorffDistance serves as a critical metric, measuring the maximum distance between the predicted and actual flexibility sets of storage responses. A smaller HausdorffDistance indicates a closer match between the model and reality, and therefore, a more reliable prediction of aggregate storage behavior – essential for grid operators seeking to optimize resource allocation and maintain system resilience.

Accurate forecasting of aggregated storage system behavior hinges on a robust statistical methodology, crucial for maintaining dependable performance across diverse operational scenarios. This approach centers on creating a learned surrogate – a simplified model – that closely mirrors the collective flexibility of numerous storage units. Validation relies heavily on the Hausdorff distance, a metric quantifying the maximum dissimilarity between the actual aggregate flexibility set and its surrogate representation; achieving a distance of less than 2Rρ(Π) demonstrates a highly accurate and reliable model. This level of precision isn’t merely academic; it directly translates to improved system stability, optimized resource allocation, and the ability to confidently predict responses to grid demands, forming the bedrock of resilient energy infrastructure.

The successful deployment of StorageAggregation techniques, built upon advanced statistical modeling, directly addresses the increasing demands of modern energy regulatory frameworks, notably FERC2222. This compliance is achieved through a system capable of accurately forecasting and managing aggregated energy storage resources, enabling participation in wholesale electricity markets. Independent evaluations demonstrate that this approach minimizes potential revenue loss, exhibiting a profit gap of no more than 6% when contrasted with alternative aggregation methodologies. This comparatively narrow margin underscores the economic viability and efficiency of the statistical modeling framework, positioning it as a crucial tool for energy providers navigating evolving market dynamics and regulatory requirements.

The pursuit of elegant solutions in power systems, as this paper details with its mean-field learning framework for storage aggregation, invariably courts future complications. The authors strive for computational efficiency and accurate modeling of heterogeneous storage, a laudable goal, yet one always shadowed by the realities of deployment. As Jean-Jacques Rousseau observed, “The more we sweat, the less we sweat.” This applies perfectly; the more intricate the model, the more potential points of failure emerge when faced with the unpredictable nature of real-world energy markets. The promise of improved flexibility through aggregation is enticing, but someone, somewhere, will inevitably find an edge case that breaks the convex relaxation. It’s not a condemnation of the work, simply an acknowledgment that MVP often translates to ‘we’ll refactor this later’.

Sooner or Later, It All Breaks

This exploration of mean-field learning for storage aggregation, while elegantly sidestepping some computational hurdles, merely shifts the inevitable pain point. The assumption of statistically representative ‘fields’ feels… optimistic. Production, as always, will reveal the edge cases – the idiosyncratic battery chemistries, the unexpectedly correlated demands, the market participants who decide ‘rationality’ is boring. The reduced complexity is attractive, certainly, but the devil, predictably, will reside in the discarded terms.

The focus on price-responsive control is logical, given the current market structures. However, anticipating future revenue streams based on these models feels like building a sandcastle at high tide. Regulatory changes, unforeseen grid events, and the inherent unpredictability of human behavior will likely render even the most sophisticated algorithms… suboptimal. It’s a recurring theme: optimization for yesterday’s problems.

One suspects the real progress won’t be in the learning algorithm itself, but in the tooling that allows engineers to rapidly diagnose and patch the resulting chaos. Everything new is old again, just renamed and still broken. The pursuit of ‘flexibility’ is, after all, just a fancier way of saying ‘damage control.’

Original article: https://arxiv.org/pdf/2601.21039.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- TON PREDICTION. TON cryptocurrency

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- 10 Hulu Originals You’re Missing Out On

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- Gold Rate Forecast

- Is T-Mobile’s Dividend Dream Too Good to Be True?

- The Gambler’s Dilemma: A Trillion-Dollar Riddle of Fate and Fortune

- Walmart: The Galactic Grocery Giant and Its Dividend Delights

- Is Kalshi the New Polymarket? 🤔💡

2026-02-01 06:41