Author: Denis Avetisyan

New research details a method for improving the ability of artificial intelligence to reliably identify misleading claims about sustainability in corporate reports.

A parameter-efficient framework leveraging contrastive learning and ordinal regression enhances large language models for robust greenwashing detection in sustainability reporting.

Despite increasing reliance on sustainability reports for ESG assessment, their vulnerability to greenwashing and ambiguous language undermines reliable evaluation. This challenge motivates our work, ‘Enhancing Language Models for Robust Greenwashing Detection’, which introduces a parameter-efficient framework to improve large language models’ ability to discern genuine sustainability claims from deceptive ones. By structuring latent representations via contrastive learning and ordinal regression, and stabilizing optimization with MetaGradNorm, we demonstrate superior robustness in cross-category settings. However, this improved performance reveals a critical trade-off between representational rigidity and generalization-can we further refine these methods to achieve both precision and broad applicability in detecting greenwashing?

The Illusion of Sustainability: Discerning Action from Rhetoric

The escalating importance of evaluating corporate Environmental, Social, and Governance (ESG) claims is colliding with the inherent limitations of current assessment techniques. While stakeholders increasingly demand transparency and accountability regarding sustainability efforts, existing methods frequently rely on superficial analyses of language, such as keyword spotting or simple sentiment analysis. This approach overlooks the crucial nuances embedded within corporate reporting – the carefully chosen qualifiers, conditional phrasing, and implied levels of commitment. Consequently, assessments often fail to accurately reflect the true depth of a company’s dedication to ESG principles, creating a significant gap between stated intentions and demonstrable action. The complexity arises because language is rarely straightforward; subtle variations in wording can dramatically alter the meaning and, critically, the actionability of a sustainability pledge, rendering traditional evaluation tools inadequate for discerning genuine commitment from mere ‘greenwashing.’

Current methods for evaluating corporate sustainability pledges frequently fall short of accurately reflecting genuine commitment. Analyses often rely on identifying keywords – terms like “sustainable” or “eco-friendly” – without assessing the depth or feasibility of associated actions. This superficial approach overlooks crucial nuances, such as vague timelines, undefined metrics, or a lack of concrete implementation plans, leading to an overestimation of actual progress. Consequently, investors, consumers, and policymakers are hampered in their ability to make informed decisions, potentially misallocating resources to companies that prioritize marketing over meaningful environmental or social impact. The result is a landscape where aspirational statements frequently overshadow demonstrable change, creating a barrier to true sustainability and hindering effective accountability.

Current evaluations of corporate Environmental, Social, and Governance (ESG) claims frequently rely on identifying specific keywords, a practice proving inadequate for discerning genuine commitment to sustainability. This superficial approach overlooks the crucial element of ‘actionability’ – whether statements translate into concrete, measurable steps. A robust framework must move beyond simple keyword detection and instead analyze the substance of pledges, assessing factors such as stated timelines, resource allocation, and clearly defined metrics for success. Without such a nuanced understanding, stakeholders are left unable to differentiate between meaningful progress and mere ‘greenwashing’, hindering informed investment and effective environmental stewardship. The development of tools capable of parsing linguistic complexity and identifying verifiable commitments is therefore paramount to fostering genuine sustainability and accountability within the corporate landscape.

Mapping Commitment to Action: A Rigorous Framework

Aspect-Action Modeling establishes a direct correlation between publicly stated Environmental, Social, and Governance (ESG) aspects and the concrete actions a corporation undertakes. This methodology moves beyond simple ESG disclosure by requiring a documented link between each reported aspect – such as carbon emissions reduction or supply chain labor standards – and specific, observable corporate activities designed to address that aspect. The framework necessitates identifying and detailing the initiatives, policies, and investments a company is making to demonstrably improve performance on each stated ESG priority, creating a traceable pathway from claim to implementation. This systematic connection allows for verification and facilitates a more accurate evaluation of a company’s commitment to sustainability.

The Actionability Continuum is a graded scale used to evaluate corporate sustainability actions based on their demonstrated commitment and specificity. Actions are categorized along this continuum, ranging from statements of policy or aspirational goals – representing low actionability – to quantifiable targets with defined timelines and allocated resources, indicating high actionability. Intermediate levels include the establishment of programs or initiatives, and the public reporting of progress against stated objectives. This framework allows for differentiation between broad commitments and concrete steps, providing a standardized method for assessing the credibility and potential impact of sustainability initiatives beyond simple declarations of intent.

Explicitly mapping Environmental, Social, and Governance (ESG) aspects to corresponding corporate actions facilitates a more granular evaluation of sustainability reporting. Traditional assessments often rely on qualitative statements of intent; however, linking stated aspects – such as carbon emissions reduction or improved labor standards – to specific, measurable actions – like investments in renewable energy or implementation of worker safety programs – allows for objective verification. This methodology moves beyond assessing whether a company addresses a given aspect to evaluating how and to what extent, enabling stakeholders to differentiate between aspirational goals and demonstrable progress. Consequently, the resulting analysis provides a more reliable basis for comparison between companies and identification of genuine sustainability leadership.

Encoding Intent: Structured Representation Learning for ESG Analysis

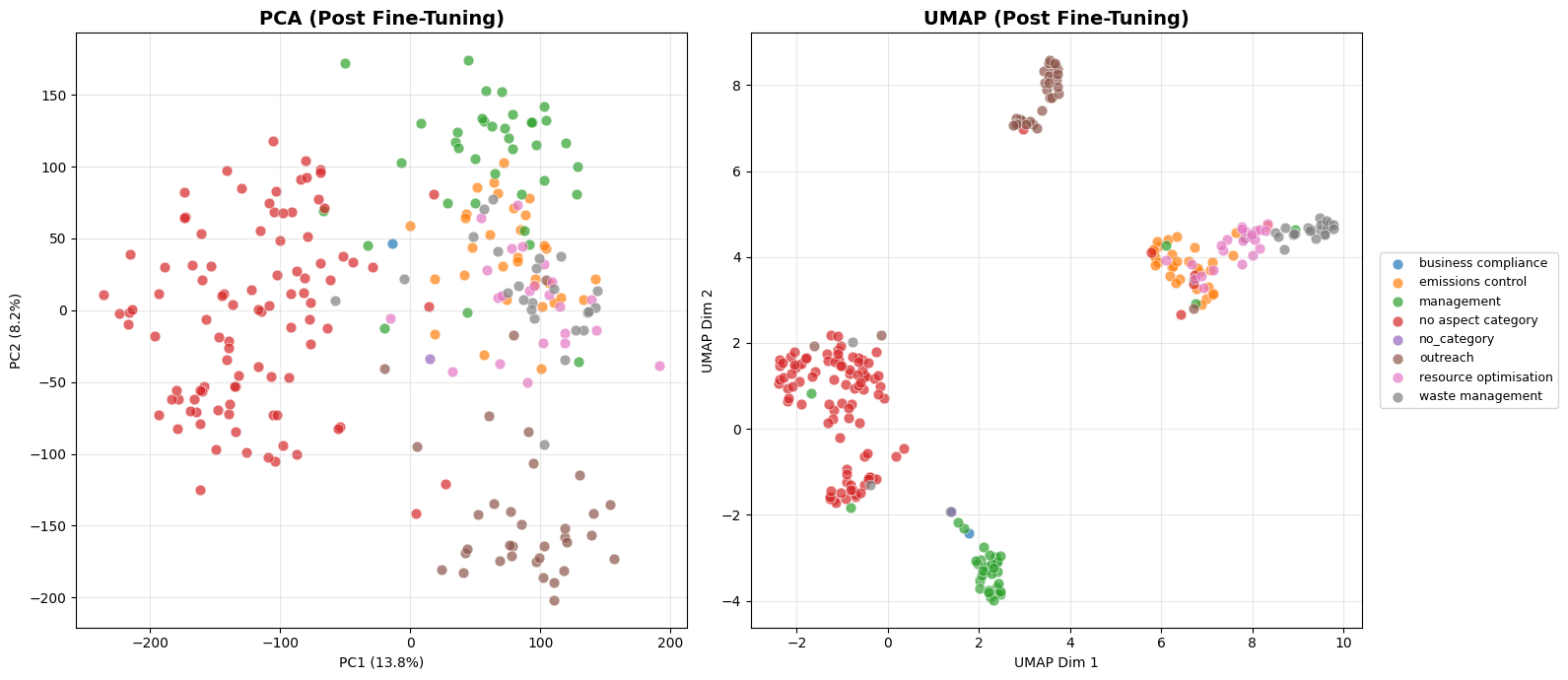

Structured Representation Learning is utilized to create organized latent spaces that capture the inherent relationships between Environmental, Social, and Governance (ESG) concepts and the actions associated with them. This methodology moves beyond treating ESG factors as isolated entities by encoding them in a manner that reflects their interconnectedness; for example, a concept like “carbon emissions” is represented in relation to actions like “investing in renewable energy” or “implementing carbon capture technologies”. This structured approach allows the model to generalize more effectively across different ESG categories and facilitates a more nuanced understanding of how actions address specific ESG concerns, ultimately improving the accuracy of predictions and recommendations.

Structured Representation Learning leverages Contrastive Learning, Ordinal Ranking Loss, and Gated Feature Modulation to establish a correlation between latent representations and the actionability scale of ESG concepts. Contrastive Learning differentiates representations based on the semantic similarity of associated actions, while Ordinal Ranking Loss enforces a consistent ordering of representations relative to actionability levels – ensuring concepts with higher actionability are positioned closer in the latent space. Gated Feature Modulation dynamically adjusts feature contributions, prioritizing those most relevant to specific actions and further refining alignment with the actionability scale. These techniques collectively produce representations that accurately reflect the degree to which an ESG concept translates into concrete, measurable action.

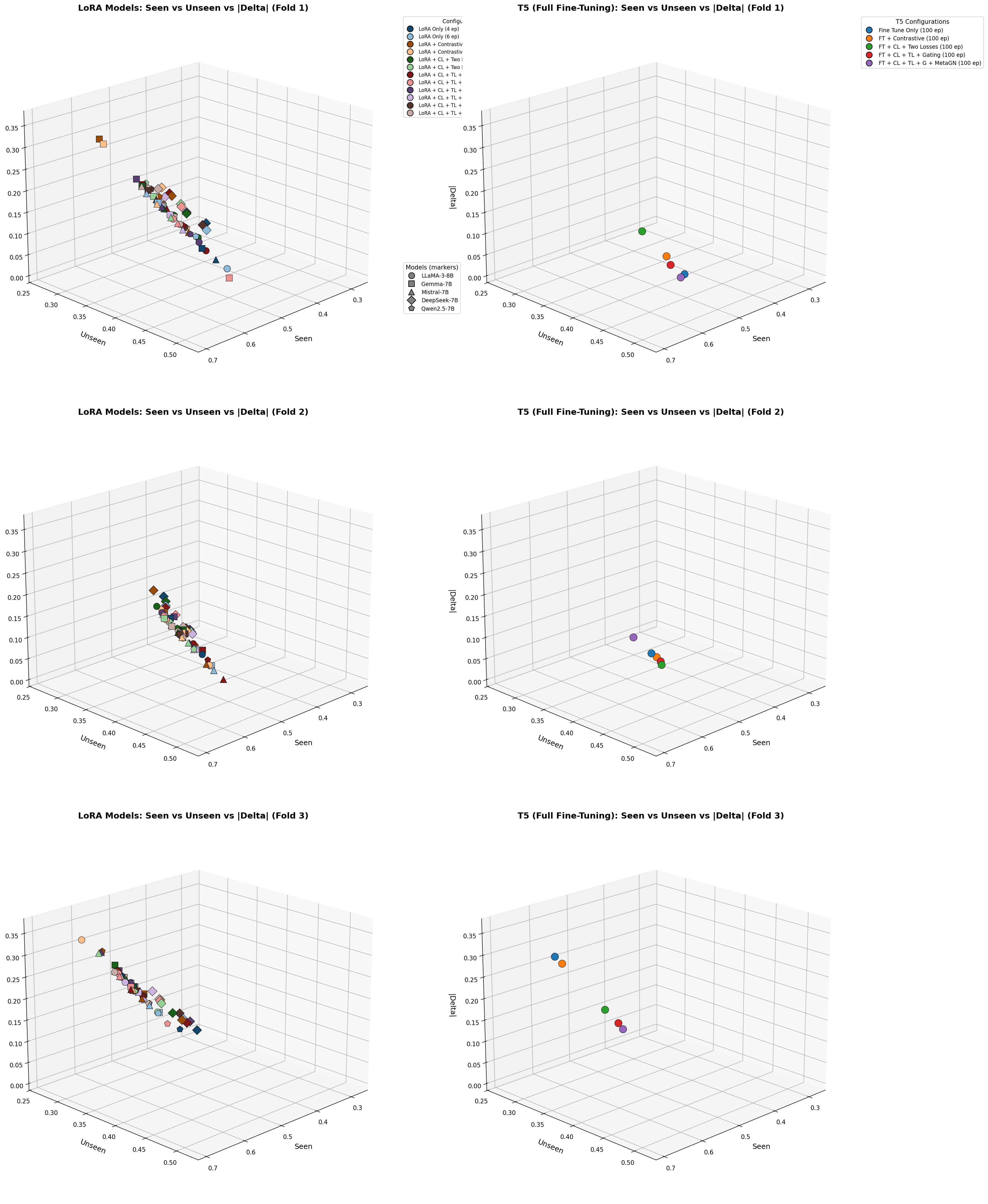

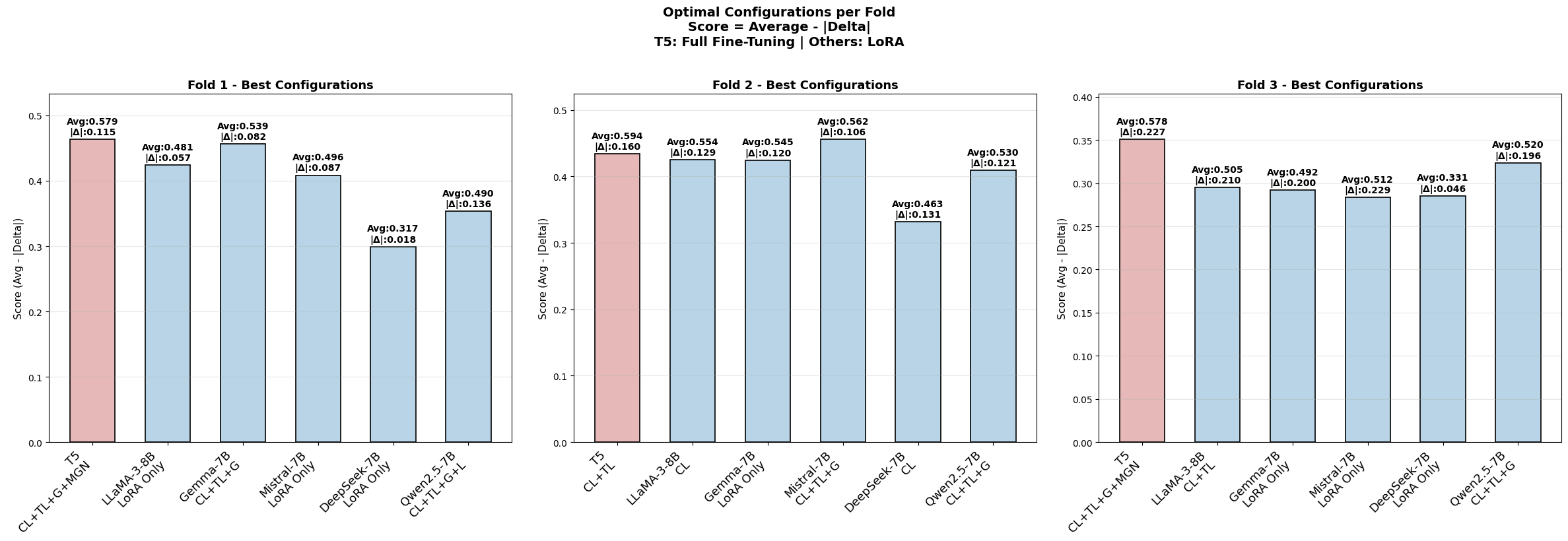

The proposed Structured Representation Learning approach demonstrates a maximum F1 score of 0.724 when evaluated on previously unseen Environmental, Social, and Governance (ESG) categories. This performance represents an improvement over both baseline models and the results achieved by prior A3CG implementations. Further optimization is achieved through the use of Low-Rank Adaptation (LoRA), a parameter-efficient fine-tuning technique which minimizes computational costs while maintaining and potentially improving model accuracy. LoRA enables adaptation to new ESG data with fewer trainable parameters, offering a practical advantage in resource-constrained environments.

Beyond Correlation: True Generalization and the Power of Efficient Models

The developed framework exhibits a notable capacity for cross-category generalization, a crucial ability for real-world applicability where models encounter data outside of their initial training scope. This capability was rigorously validated using the A3CG Dataset, a benchmark specifically designed to assess performance across diverse and previously unseen categories. Results demonstrate the framework’s ability to effectively transfer learned knowledge from familiar categories to accurately process and understand novel ones, suggesting a robust underlying representation of concepts rather than simple memorization of training data. This proficiency allows the model to maintain high accuracy and reliability even when faced with unfamiliar inputs, a significant advantage over systems limited by narrow categorical expertise.

The model’s efficacy was rigorously tested across a spectrum of prominent large language models, ensuring broad applicability and robustness. Evaluations incorporated T5, alongside several 7B parameter models including LLaMA-3-8B, Mistral-7B, Gemma-7B, DeepSeek-V3-7B, and Qwen2.5-7B. This diverse selection allowed for comparative analysis, revealing consistent performance gains irrespective of the underlying language model architecture. By assessing the framework’s adaptability to various LLMs, researchers demonstrated its potential for widespread integration and its independence from specific model limitations, fostering a versatile solution for cross-category generalization tasks.

The research demonstrates a compelling efficiency in natural language processing; a 7 billion parameter model achieved a 4-5% performance gain in unseen category F1 scores when benchmarked against several large language models. This improvement is particularly noteworthy as it positions the model as competitive with, and in some instances superior to, significantly larger models – those exceeding 70 billion parameters, including GPT-4o and LLaMA-3-70B. Further analysis, utilizing Silhouette Scores, reveals that this enhanced performance isn’t simply a matter of scale, but stems from a more effectively structured latent space within the smaller model, indicating a more efficient representation of information and a greater capacity for generalization to novel categories.

The pursuit of reliable greenwashing detection, as detailed in the paper, mirrors a fundamental principle of information theory. Claude Shannon once stated, “The most important thing in communication is to convey the meaning without losing it.” This resonates deeply with the work’s focus on structuring latent representations within large language models. The paper aims to ensure that the ‘meaning’ – the genuine sustainability claims – isn’t lost amidst the noise of deceptive language. By employing contrastive learning and ordinal regression, the framework attempts to distill signal from noise, creating a robust system that prioritizes accurate classification – a harmony of symmetry and necessity in discerning truthful reporting from misleading claims.

What’s Next?

The pursuit of robust greenwashing detection, as demonstrated by this work, ultimately reveals a deeper challenge: the formalization of semantic honesty. Current large language models, even when refined with techniques like contrastive learning and ordinal regression, remain fundamentally pattern-matching engines. A model can identify inconsistencies between stated sustainability goals and reported actions, but it cannot comprehend the intent behind those statements – and therefore, cannot truly assess deception. The framework presented offers a structured approach to latent representation, yet the very notion of ‘structuring’ implies an externally imposed order onto what is, in reality, a chaotic landscape of corporate rhetoric.

Future work must move beyond superficial detection and toward a provable understanding of communicative intent. This necessitates the integration of formal logic and knowledge representation techniques – a departure from the current emphasis on scale and empirical performance. A model that can formally verify the consistency between claims and evidence, rather than simply predicting a ‘greenwashing score’, would represent a genuine advancement. The current focus on parameter-efficient fine-tuning, while pragmatic, risks optimizing for correlation rather than causation – a mathematically unsatisfying outcome.

Ultimately, the true test lies not in achieving higher accuracy on benchmark datasets, but in constructing a system capable of demonstrably correct reasoning about sustainability claims. Until then, the detection of greenwashing will remain, at best, a sophisticated game of statistical inference, and not a triumph of artificial intelligence.

Original article: https://arxiv.org/pdf/2601.21722.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- TON PREDICTION. TON cryptocurrency

- 10 Hulu Originals You’re Missing Out On

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- The Gambler’s Dilemma: A Trillion-Dollar Riddle of Fate and Fortune

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- Gold Rate Forecast

- 📅 BrownDust2 | August Birthday Calendar

- MP Materials Stock: A Gonzo Trader’s Take on the Monday Mayhem

- Is T-Mobile’s Dividend Dream Too Good to Be True?

2026-01-31 13:38