Author: Denis Avetisyan

A new approach monitors how quickly clients learn to identify and neutralize those manipulating the system.

This paper introduces FL-LTD, a lightweight and privacy-preserving defense that detects malicious clients in federated learning by analyzing temporal loss dynamics.

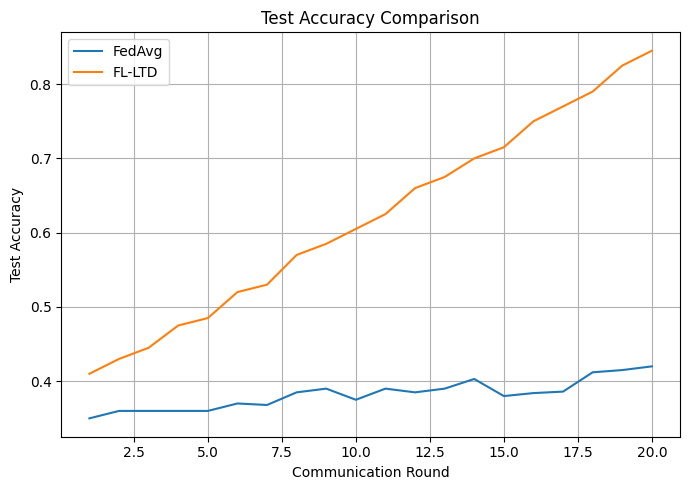

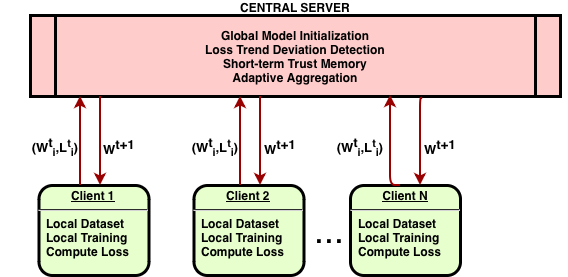

Despite the promise of collaborative learning, Federated Learning (FL) systems remain vulnerable to malicious clients capable of compromising global model performance. This paper introduces ‘Robust Federated Learning for Malicious Clients using Loss Trend Deviation Detection’, a novel defense framework, FL-LTD, that proactively identifies and mitigates such threats by monitoring temporal loss dynamics-without inspecting model gradients or accessing sensitive data. FL-LTD achieves significant robustness gains-reaching 0.84 accuracy under attack compared to 0.41 for standard FedAvg-while maintaining negligible computational overhead. Could this lightweight, loss-based monitoring approach represent a scalable path toward truly secure and reliable federated learning deployments?

The Inherent Vulnerabilities of Distributed Intelligence

Federated learning, while designed to enhance data privacy by distributing model training across numerous devices, inherently presents vulnerabilities to malicious participants. This decentralized approach, intended to circumvent the need for centralized data collection, introduces a risk: compromised clients can intentionally contribute biased or corrupted model updates. These adversarial contributions aren’t necessarily about stealing data; instead, they aim to subtly – or overtly – degrade the performance of the globally aggregated model, potentially causing misclassifications, biased predictions, or even complete model failure. The very architecture that promises privacy, therefore, becomes a potential avenue for attack, demanding robust defense mechanisms to ensure the integrity and reliability of the federated learning process.

The inherent difficulties in securing federated learning systems are notably amplified by the reality of Non-IID data – a condition where each client possesses a unique and often drastically different distribution of data. This disparity creates vulnerabilities because a malicious client, aware of these skewed distributions, can craft specifically tailored attacks that disproportionately influence the global model. Unlike scenarios with uniformly distributed data, standard aggregation methods struggle to identify and mitigate the impact of these targeted manipulations, as the ‘normal’ variations in client updates become masked by the intentional adversarial noise. Consequently, Non-IID data provides a fertile ground for attackers seeking to compromise model accuracy or even inject backdoors, rendering the privacy benefits of federated learning less effective without robust, distribution-aware defense mechanisms.

Federated learning systems, while designed to enhance data privacy, are increasingly vulnerable to a subtle but potent attack known as loss manipulation. This tactic exploits the core mechanism of federated averaging, where a central server aggregates model updates from multiple clients. Adversaries, controlling a subset of these clients, can strategically inflate or deflate the reported training loss – the measure of how well a model is learning – without significantly impacting their local model’s actual performance. This distorted loss signal misleads the central server, causing it to overweight or underweight malicious updates, ultimately corrupting the global model. Existing defenses, often focused on detecting blatant anomalies in model parameters, struggle to identify these nuanced manipulations, as they appear as legitimate variations within the expected training process, posing a significant challenge to the robustness of federated learning deployments.

Building Resilience Through Robust Aggregation

Robust aggregation techniques are designed to mitigate the influence of compromised or erroneous updates submitted by individual clients during distributed machine learning processes. Standard Federated Learning often relies on averaging model updates, which is vulnerable to manipulation; a single malicious client can significantly skew the global model. Robust aggregation methods, instead of simple averaging, employ algorithms that down-weight or entirely discard updates identified as outliers or exhibiting anomalous behavior. This is achieved through techniques like trimming, clipping, or utilizing more sophisticated statistical estimators less sensitive to extreme values. The goal is to converge to an accurate global model even with a subset of clients providing intentionally incorrect or unintentionally faulty contributions, thereby enhancing the overall system’s resilience and security.

Geometric-Median Aggregation and the Variance-Minimization Approach represent improvements over simple averaging in federated learning by mitigating the influence of outliers or malicious updates. Standard averaging calculates the mean of all client updates, making it susceptible to significant distortion from even a small number of corrupted contributions. Geometric-Median aggregation, conversely, identifies the central point that minimizes the sum of distances to all client updates, effectively downweighting extreme values. The Variance-Minimization Approach aims to minimize the variance of the aggregated model, also reducing the impact of divergent updates. These methods demonstrably improve model robustness, particularly when a substantial proportion of clients may be compromised or experience data corruption; simulations have shown resilience even with up to 30% of clients providing adversarial updates, whereas standard averaging can be severely impacted with far fewer malicious actors.

Effective defense against model corruption in federated learning necessitates a combined approach extending beyond robust aggregation techniques. While methods like Geometric Median or variance minimization mitigate the impact of outliers, they do not prevent malicious clients from submitting corrupted updates. Proactive Malicious Client Detection mechanisms are crucial for identifying compromised or adversarial participants before their updates influence the global model. These detection systems typically rely on analyzing client update patterns, data consistency checks, or reputation scoring to differentiate between legitimate and malicious behavior, allowing for the isolation or exclusion of threats and thus improving the overall system resilience.

FL-LTD: A Framework for Dynamic Defense

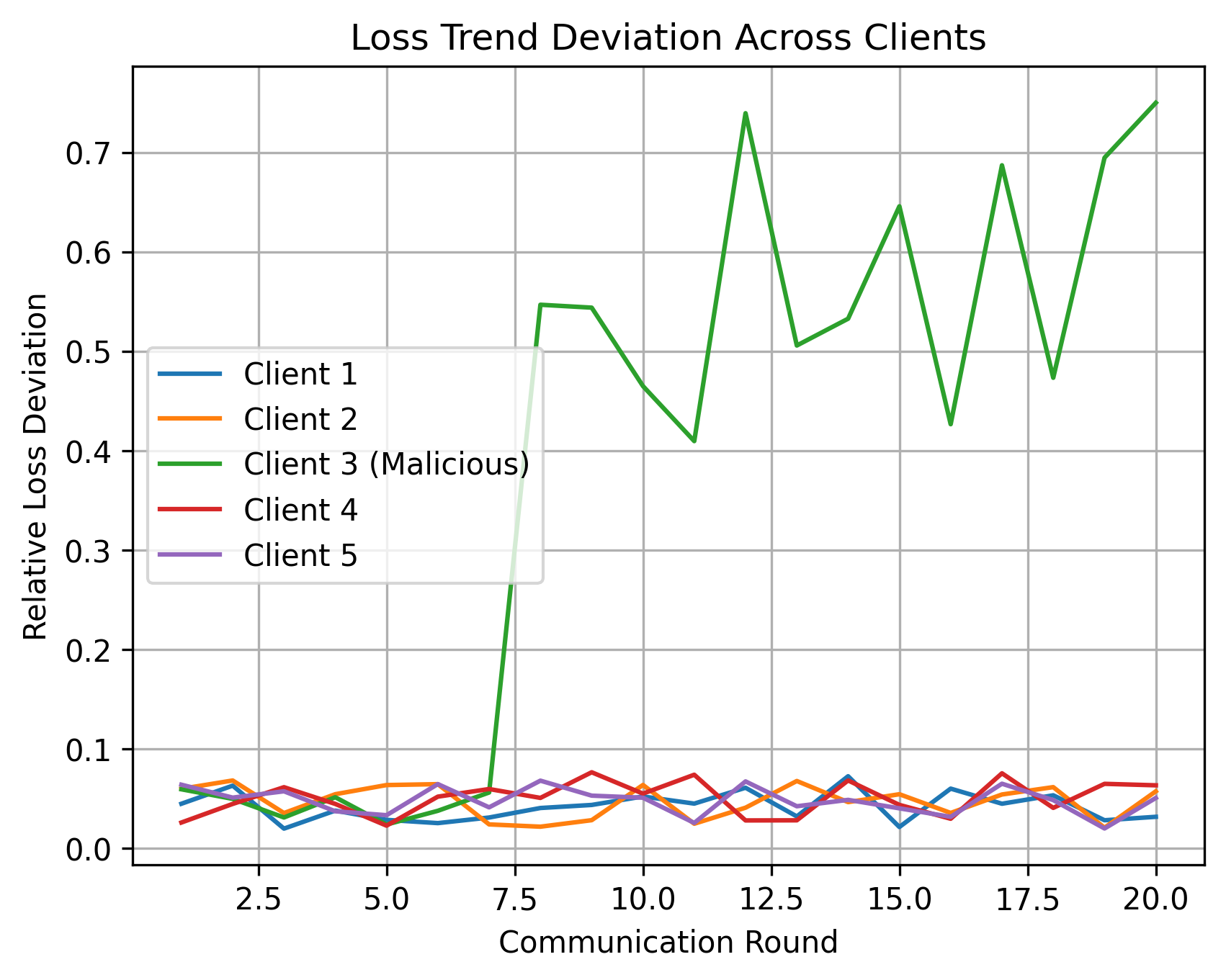

FL-LTD (Federated Learning with Loss Trend Deviation) establishes a defense framework predicated on the analysis of client update loss trajectories. This approach monitors the change in training loss reported by each client over successive communication rounds. Anomalies are identified by quantifying deviations from expected loss trends; clients exhibiting loss values that significantly differ from the established norm are flagged as potentially malicious. This method allows for the detection of adversarial behavior even when attackers attempt to subtly manipulate their updates to avoid immediate detection, as consistent deviations in loss trends indicate compromised or malicious participation in the federated learning process.

FL-LTD detects malicious clients in federated learning by analyzing the temporal behavior of training loss. This approach focuses on identifying deviations from established loss trends; even if an attacker successfully manipulates loss values to appear normal at a single training round, consistent alterations to the expected loss trajectory will be flagged as anomalous. The system monitors each client’s loss over multiple rounds, establishing a baseline of expected change; significant departures from this baseline, indicating potentially adversarial behavior, trigger further investigation or mitigation strategies. This temporal analysis is effective because attackers must maintain a consistent manipulation to avoid detection, increasing the difficulty of blending malicious updates with legitimate ones.

The FL-LTD framework demonstrably enhances federated learning robustness against loss manipulation attacks, achieving over twice the final test accuracy of standard Federated Averaging (FedAvg) under such conditions. This improvement is facilitated by Adaptive Down-weighting, a mechanism that reduces the contribution of clients identified as malicious based on Loss Trend Deviation. During the model aggregation phase, the influence of these clients is proportionally decreased, mitigating the impact of potentially corrupted updates and preserving the integrity of the global model. This approach effectively isolates and minimizes the damage caused by adversarial participants without requiring prior knowledge of attack strategies or client identities.

Sustaining Resilience: Memory and Preserving Privacy

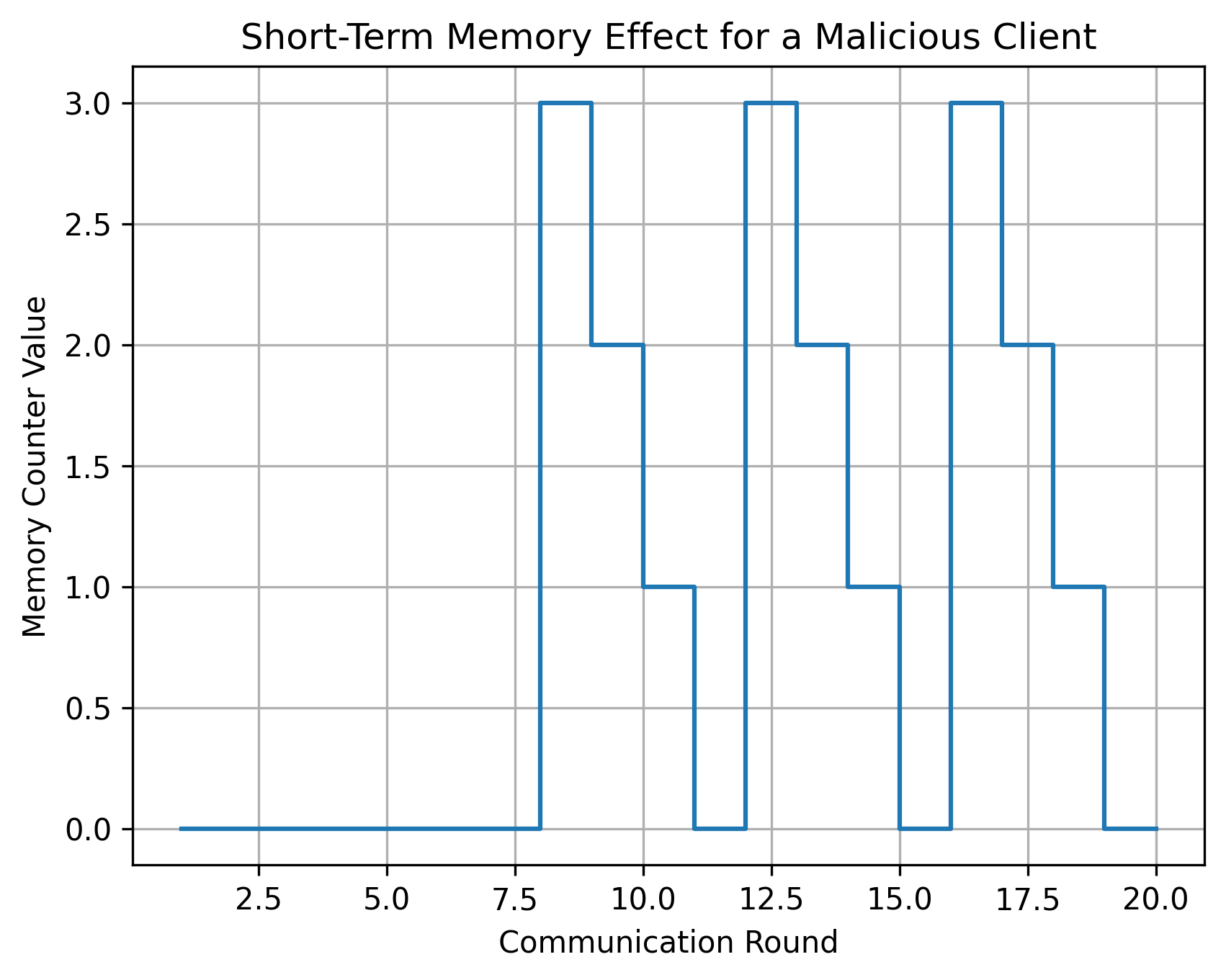

The effectiveness of FL-LTD hinges on a mechanism known as memory-based trust persistence, which allows the system to retain information regarding recently observed anomalous activity. This isn’t simply flagging a single intrusion; rather, FL-LTD builds a short-term memory of threats, enabling it to anticipate and proactively defend against recurring or evolving attacks. By remembering the characteristics of past malicious behaviors – such as specific data patterns or compromised client devices – the system can refine its detection parameters and strengthen its defenses. This proactive approach is crucial because many attacks aren’t one-off events, but sustained campaigns; remembering past anomalies allows FL-LTD to recognize and neutralize threats far more efficiently than systems relying solely on immediate detection, ultimately improving the resilience of the federated learning process.

The efficacy of FL-LTD in detecting and mitigating threats doesn’t necessitate compromising user privacy. The system achieves this balance through integration with Secure Aggregation techniques, a process designed to safeguard individual client data during collaborative learning. Rather than sharing raw data, clients encrypt their model updates using Homomorphic Encryption before transmission. This allows the central server to perform computations – such as averaging the updates – on the encrypted data itself, effectively learning from the collective intelligence without ever decrypting individual contributions. The result is a globally improved threat model built on anonymized insights, ensuring that sensitive information remains confidential while bolstering the system’s resilience against evolving attacks.

The confidentiality of individual client data within federated learning systems is maintained through a sophisticated application of homomorphic encryption. This technique allows for computations to be performed directly on encrypted data, meaning client updates – detailing learned patterns or model improvements – are never decrypted during the aggregation process. Instead of revealing sensitive information, these encrypted updates are combined, and the resulting aggregate remains encrypted. Only after aggregation is a single, secure decryption performed by a designated server, yielding a global model update without exposing the private contributions of any individual client. This ensures that while the system learns from distributed data, the privacy of each participating device remains fully protected, fostering trust and encouraging broader participation in collaborative machine learning initiatives.

The pursuit of robust federated learning, as detailed in this study, echoes a fundamental principle of computational elegance. The presented FL-LTD method, with its focus on identifying malicious clients through temporal loss dynamics, embodies a search for provable security rather than merely empirical resilience. As John McCarthy stated, “The best way to predict the future is to invent it.” This sentiment aptly captures the proactive approach taken by the researchers – they don’t simply react to attacks, but rather design a system capable of anticipating and neutralizing them based on inherent mathematical properties of the learning process. The monitoring of loss trends, devoid of gradient inspection, exemplifies this commitment to a mathematically sound and inherently secure framework, aligning with the ideal of a solution being demonstrably correct, not just functionally effective.

What’s Next?

The presented approach, FL-LTD, represents a commendable step towards practical robustness in federated learning. However, a reliance on detecting deviations from expected loss trends, while elegant in its simplicity, skirts the fundamental question of what constitutes ‘expected’ in genuinely heterogeneous systems. The current formulation tacitly assumes a degree of client similarity that may not hold in deployments spanning diverse data distributions and device capabilities. If the baseline ‘normal’ fluctuates wildly, distinguishing malice from mere misfortune becomes, shall the term be said, problematic.

Future work should therefore focus not solely on anomaly detection, but on provable bounds on acceptable loss variation. Establishing a mathematically rigorous notion of ‘normal’ – perhaps leveraging techniques from differential privacy to quantify acceptable deviation – would elevate this from an empirical defense to a genuinely trustworthy one. The current system flags irregularities; a more ambitious solution would guarantee convergence even in the presence of adversarial actors, provided their influence remains below a quantifiable threshold.

Ultimately, if anomaly detection feels like magic, it suggests the invariant hasn’t been fully revealed. The pursuit of robust federated learning demands not merely effective heuristics, but a deeper understanding of the underlying mathematical principles governing distributed optimization. A system that operates because it works is a curiosity; one that operates because it is correct is a foundation.

Original article: https://arxiv.org/pdf/2601.20915.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- TON PREDICTION. TON cryptocurrency

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- 10 Hulu Originals You’re Missing Out On

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- Gold Rate Forecast

- 📅 BrownDust2 | August Birthday Calendar

- MP Materials Stock: A Gonzo Trader’s Take on the Monday Mayhem

- The Gambler’s Dilemma: A Trillion-Dollar Riddle of Fate and Fortune

- Walmart: The Galactic Grocery Giant and Its Dividend Delights

- Leaked Set Footage Offers First Look at “Legend of Zelda” Live-Action Film

2026-01-31 05:16