Author: Denis Avetisyan

A new approach uses Transformer networks to proactively identify anomalous behaviors in complex, interacting environments like autonomous driving.

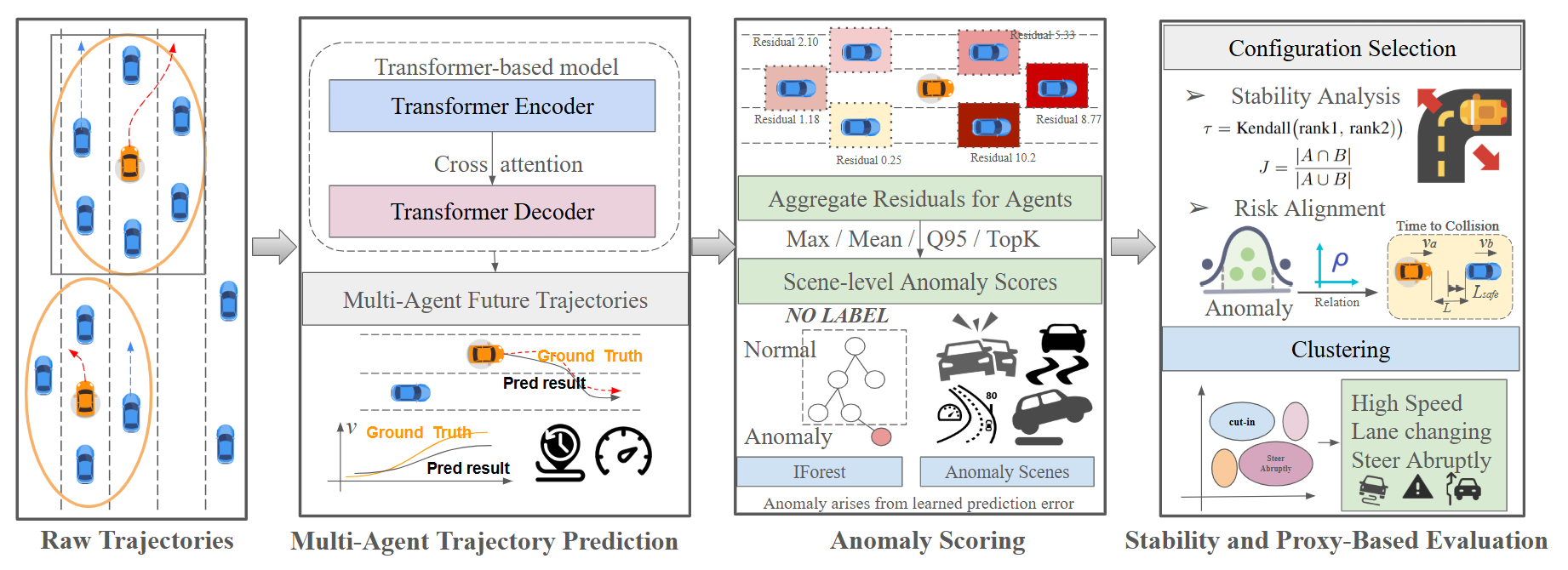

This paper introduces an unsupervised anomaly detection framework leveraging multi-agent Transformers to correlate statistical deviations with physical risk indicators in trajectory prediction.

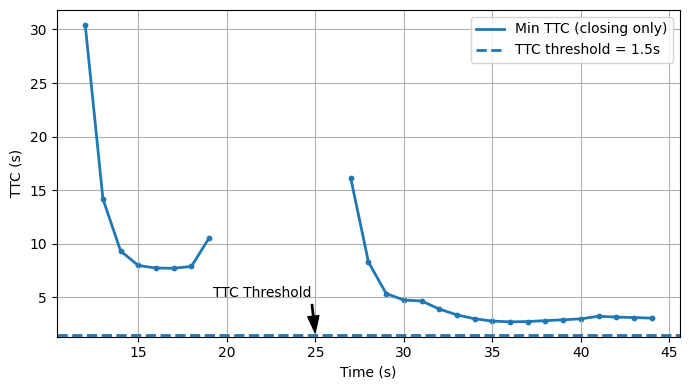

Identifying rare but critical safety risks in complex driving scenarios remains a challenge due to the impracticality of exhaustive labeled data and the limitations of simplistic risk metrics. This is addressed in ‘Unsupervised Anomaly Detection in Multi-Agent Trajectory Prediction via Transformer-Based Models’, which introduces a novel framework leveraging multi-agent Transformer networks to detect anomalies by modeling normal driving behavior and quantifying deviations through prediction residuals. Experiments demonstrate that this approach not only achieves high stability in anomaly detection but also exhibits strong alignment with established physical safety indicators, identifying nuanced risks-such as reactive braking during lateral drift-missed by conventional methods. Could this framework pave the way for more robust and proactive safety validation in autonomous vehicle development and testing?

Decoding the Chaos: Identifying Critical Anomalies in Naturalistic Driving

Contemporary automotive safety assessments frequently depend on meticulously designed, yet ultimately constrained, driving scenarios. These evaluations, while valuable for standardized testing, often fall short of replicating the unpredictable and nuanced conditions encountered in everyday driving. Real-world roadways present a continuous stream of unforeseen events – from sudden pedestrian movements and erratic lane changes to adverse weather conditions and poorly maintained infrastructure – complexities rarely incorporated into controlled testing environments. Consequently, a vehicle’s performance within these artificial settings may not accurately reflect its capabilities – or limitations – when confronted with the full spectrum of challenges inherent in naturalistic driving, creating a critical gap in ensuring genuine road safety and hindering the development of truly robust autonomous systems.

The pursuit of truly safe autonomous vehicles hinges on a capacity to anticipate and mitigate exceedingly rare, yet potentially catastrophic, driving scenarios. While extensive testing occurs, it often centers on predictable events, failing to adequately address the ‘long tail’ of unusual occurrences that define real-world complexity. Identifying these critical anomalies – a vehicle unexpectedly entering a roundabout, a pedestrian darting into traffic, or a sudden lane closure – demands analysis of vast datasets collected from naturalistic driving. These datasets, capturing millions of miles of everyday driving, hold the key to uncovering these infrequent events, allowing engineers to refine algorithms and enhance the robustness of autonomous systems. The ability to proactively address these anomalies, rather than reactively responding to them, represents a significant leap forward in ensuring passenger and public safety, and ultimately, fostering trust in self-driving technology.

The pursuit of enhanced vehicle safety faces a significant hurdle in the scarcity of labeled anomalous driving events; traditional supervised learning algorithms, reliant on extensive annotated datasets, falter when confronted with the unpredictable nature of real-world scenarios. Because dangerous situations are, thankfully, rare, acquiring sufficient examples for effective training proves incredibly difficult and expensive. This limitation necessitates a shift towards unsupervised methods, which can identify unusual patterns and potential hazards without requiring pre-defined labels. These techniques analyze vast streams of naturalistic driving data, seeking deviations from established norms to pinpoint potentially critical events – effectively learning what constitutes ‘unsafe’ behavior directly from the data itself, rather than relying on human-defined criteria. This approach offers a pathway to proactively improve autonomous vehicle safety by identifying and addressing previously unseen risks.

The challenge of pinpointing critical driving anomalies isn’t simply detecting unusual events, but deciphering the intricate relationships between vehicles that create those risks. A vehicle slowing unexpectedly isn’t inherently dangerous; it becomes so only when considered in relation to the proximity and actions of surrounding traffic. Researchers are finding that accurately characterizing these near-miss scenarios requires modeling not just individual vehicle behavior, but the dynamic interplay – the subtle negotiations, anticipatory adjustments, and potential conflicts – that define real-world traffic flow. This necessitates advanced techniques capable of capturing the multi-vehicle context, considering factors like relative speed, heading, and predicted trajectories to distinguish genuinely dangerous situations from benign variations in driving style. The complexity arises because a seemingly safe gap can rapidly become perilous with a minor adjustment by any involved vehicle, demanding a predictive approach that goes beyond simple reactive thresholds.

Unveiling the Hidden Order: Unsupervised Anomaly Detection for Driving Safety

Unsupervised anomaly detection techniques are utilized to identify atypical driving patterns without the need for pre-existing, manually labeled datasets. This approach circumvents the substantial costs and logistical challenges associated with creating and maintaining labeled data, which often requires expert annotators and is specific to particular driving environments. Instead, these methods learn the normal patterns of driving behavior directly from raw sensor data, such as vehicle speed, acceleration, and steering angle. Anomalies are then flagged as instances that deviate significantly from this learned baseline, offering a scalable and adaptable solution for identifying potentially unsafe or unusual driving actions.

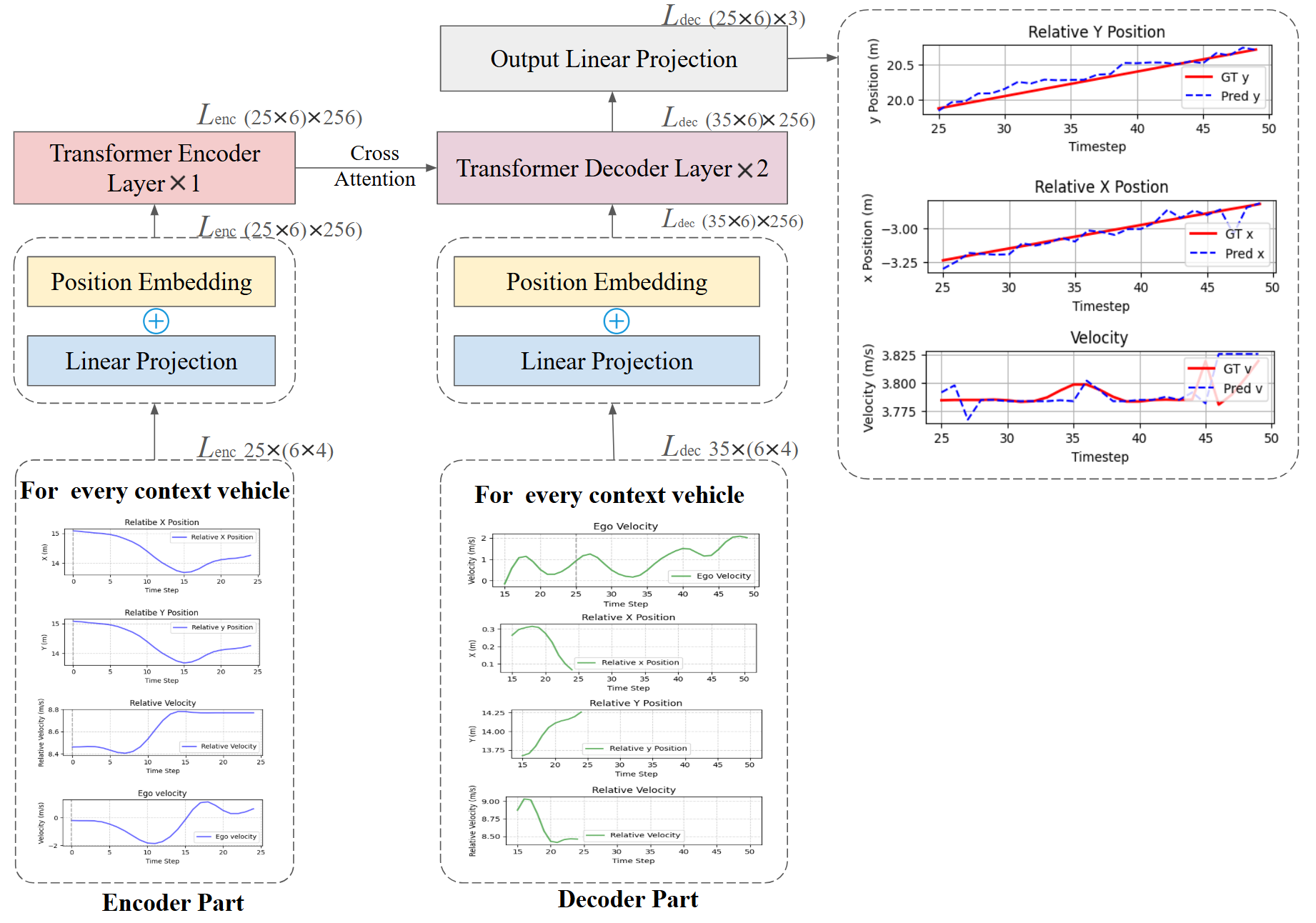

The Transformer architecture is utilized for trajectory prediction due to its capacity to model long-range dependencies in sequential data, crucial for anticipating vehicle movements. Unlike recurrent neural networks, Transformers employ self-attention mechanisms, allowing each vehicle’s trajectory to be considered in relation to all other vehicles within the observed scene, regardless of their temporal distance. This parallel processing capability significantly improves prediction accuracy and efficiency compared to sequential models. Input data consists of historical positions, velocities, and headings of surrounding vehicles, encoded as a sequence of vectors. The Transformer then outputs a probability distribution over possible future trajectories for each vehicle, enabling the system to anticipate potential hazards and deviations from expected behavior. The model is trained on large datasets of naturalistic driving data to learn complex patterns and relationships within traffic scenarios.

Prediction Residuals are calculated as the vector difference between the predicted trajectory and the ground truth, observed trajectory of a vehicle. These residuals, represented as r = y_{actual} - y_{predicted} , quantify the deviation of a vehicle’s behavior from what the model anticipates given the preceding scene context. The magnitude and direction of these residuals are indicative of anomalous behavior; larger residuals suggest a significant divergence from expected motion. These values are computed for each time step within the prediction horizon, resulting in a time series of residuals for each vehicle, which can then be aggregated to assess the overall anomalousness of the vehicle’s behavior.

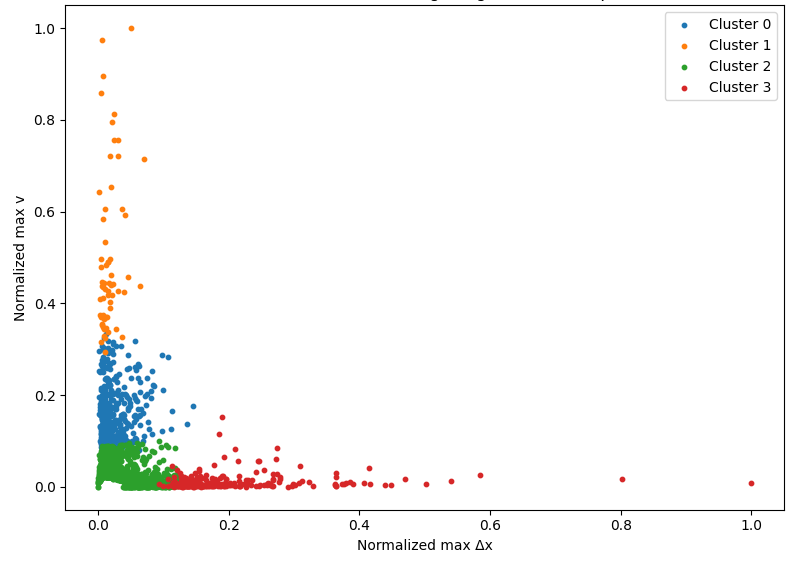

The Scene-Level Anomaly Score is computed by aggregating prediction residuals – the discrepancies between predicted and actual trajectories of surrounding vehicles – across all tracked agents within a given driving scene. This aggregation utilizes a weighted sum, where weights are determined by the relative importance of each agent based on proximity and potential impact on the ego-vehicle. The resulting score, a scalar value, represents the cumulative anomalousness of the scene; higher scores indicate a greater degree of unusual or potentially hazardous behavior exhibited by the collective of surrounding vehicles. This score allows for a holistic assessment of scene safety, moving beyond individual vehicle anomalies to capture complex interactions and emergent risks.

Evidence from the Real World: Evaluating Performance on Real-World Driving Data

The Next Generation Simulation (NGSIM) Dataset served as the primary evaluation resource for this research. NGSIM comprises a collection of approximately 10 hours of high-resolution naturalistic driving data recorded on Interstate 80 near Sacramento, California. This dataset includes trajectories of over 3,300 vehicles, along with corresponding sensor data and vehicle states. Data was collected using a combination of dedicated probe vehicles and roadside sensors, providing a detailed record of real-world driving behavior. The dataset’s size and realistic driving scenarios make it well-suited for evaluating the performance of anomaly detection algorithms in a complex and representative environment.

The proposed anomaly detection method identified 2,832 anomalous driving scenes within the NGSIM dataset. This performance is quantitatively comparable to the Isolation Forest algorithm, which detected 2,835 anomalous scenes using the same dataset. Further analysis revealed that our system identified 388 unique anomalous scenes not detected by Isolation Forest, indicating complementary detection capabilities. These results demonstrate the effectiveness of the approach in identifying potentially hazardous driving situations and highlight its ability to discern anomalies beyond those identified by existing methods.

Analysis of anomaly ranking agreement was quantified using Kendall’s τ statistic, which measures the ordinal association between two rankings. Our system achieved a consistently high level of agreement, exceeding a value of 0.95 across various aggregation operators used to consolidate individual anomaly scores. This indicates a strong correlation in the relative ordering of anomalous scenes as determined by our approach, suggesting robustness and consistency in its performance assessment despite variations in how individual anomaly scores are combined. A τ value approaching 1.0 signifies near-perfect agreement in ranking order.

Evaluation using the Jaccard@K metric demonstrates a high degree of consistency in the top-K anomalous scene detections. Specifically, the system achieved a Jaccard@K value exceeding 0.95, indicating substantial overlap between the sets of anomalous scenes identified across different evaluation runs. The Jaccard index, calculated as the size of the intersection divided by the size of the union of the detected scene sets, provides a quantitative measure of this agreement; a value of 0.95 signifies that, on average, 95% of the scenes present in one top-K detection set are also present in another.

Beyond Detection: Extending the Framework Towards Proactive Safety Systems

The developed framework transcends simple anomaly detection, offering a pathway toward genuinely proactive safety systems within vehicles. By shifting from identifying deviations after they occur to anticipating and mitigating potential hazards, the system moves beyond reactive measures. This extension envisions leveraging detected anomalies not as mere alerts, but as predictive indicators informing real-time control adjustments. The architecture allows for the integration of advanced algorithms capable of forecasting evolving scenarios and preemptively enacting safety protocols, ultimately reducing the risk of collisions and enhancing overall vehicle safety through preventative action rather than damage control.

Vehicle safety can be dramatically improved by moving beyond simply detecting unusual events to actively preventing collisions. Research demonstrates that techniques like Reinforcement Learning and Monte Carlo Tree Search offer promising avenues for achieving this. These methods allow a vehicle’s control system to learn from identified anomalies – such as a pedestrian unexpectedly entering a roadway – and proactively adjust its trajectory. Reinforcement Learning trains the vehicle through trial and error, rewarding safe actions and penalizing those that lead to potential hazards. Monte Carlo Tree Search, meanwhile, efficiently explores possible future scenarios, allowing the vehicle to select the safest course of action even in complex and rapidly changing environments. By leveraging these computational approaches, vehicles can move beyond reactive responses and instead anticipate and avoid dangerous situations, significantly enhancing overall safety.

Predictive accuracy in safety-critical systems benefits significantly from acknowledging inherent uncertainty. Models such as Gaussian Processes (GP) and Kalman Filters move beyond simple point predictions by quantifying the range of possible future states. A Gaussian Process, for example, doesn’t just estimate a vehicle’s likely position; it provides a probability distribution over all possible positions, reflecting the model’s confidence – or lack thereof – in its prediction. Similarly, Kalman Filters recursively estimate the system state while accounting for both process and measurement noise, offering a statistically robust estimate alongside a covariance matrix representing prediction uncertainty. This integration of uncertainty is crucial; it allows for more cautious and reliable decision-making in control systems, enabling the vehicle to anticipate potential hazards and react accordingly, rather than relying on potentially flawed, deterministic forecasts.

To rigorously evaluate and enhance the safety of autonomous systems, researchers are increasingly utilizing techniques that address the complexities of real-world driving. Dynamic Time Warping (DTW) offers a powerful solution for comparing vehicle trajectories, effectively accounting for variations in speed and timing that would confound simpler comparison methods. This allows for a more nuanced assessment of near-miss events and subtle deviations from safe behavior. Complementing this, Generative Adversarial Networks (GANs) are being employed to create synthetic, yet highly realistic, safety-critical scenarios-situations that might be rare in real-world data but are crucial for thoroughly testing the system’s response. By generating diverse and challenging simulations, GANs enable proactive identification of vulnerabilities and refinement of control algorithms, ultimately contributing to more robust and dependable autonomous vehicle operation.

The pursuit of predicting multi-agent trajectories, as detailed in the paper, inherently demands a willingness to challenge established norms. It’s a process of dissecting complex interactions to identify deviations – anomalies that signal potential risk. This aligns perfectly with Alan Turing’s assertion: “Sometimes people who are untrustworthy are dramatically more predictable than those who are trustworthy.” The framework proposed doesn’t seek perfect prediction, but rather a robust understanding of what shouldn’t happen. By modeling interactions and pinpointing statistical anomalies, the system effectively exploits comprehension – revealing vulnerabilities in the predicted behavior of agents, much like uncovering a flaw in a complex system. The focus on unsupervised learning is a testament to the principle of reverse-engineering reality, finding order within the chaos of agent behavior without relying on predefined labels.

What Lies Ahead?

The current work demonstrates a capacity to identify statistical outliers within multi-agent trajectory data, and correlate those with rudimentary risk assessments. However, this is merely a surface scan. Reality is open source – the code governing these interactions exists, but this framework, like most, currently only deciphers fragments. The challenge isn’t simply detecting that something unusual is occurring, but why. A statistically anomalous swerve could indicate an impending collision, or merely an aggressive lane change. Disambiguation demands a deeper understanding of intention – a level of predictive modeling that moves beyond simple extrapolation of observed behavior.

Future iterations must grapple with the problem of context. This model, while adept at flagging deviations, remains largely agnostic to environmental factors – weather, road conditions, even the social norms governing driving behavior. Integrating these variables isn’t merely about adding more data; it’s about restructuring the model’s core assumptions. Perhaps a shift away from purely attention-based mechanisms towards architectures that explicitly represent causal relationships will prove fruitful.

Ultimately, the true test won’t be accuracy on benchmark datasets, but robustness in the face of genuine unpredictability. Human drivers, and indeed all complex systems, occasionally exhibit behavior that defies prediction. A truly intelligent system won’t simply flag these events as anomalies; it will treat them as opportunities to refine its understanding of the underlying code – to rewrite its assumptions and improve its ability to anticipate the unexpected.

Original article: https://arxiv.org/pdf/2601.20367.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- TON PREDICTION. TON cryptocurrency

- 10 Hulu Originals You’re Missing Out On

- Sandisk: A Most Peculiar Bloom

- Here Are the Best Movies to Stream this Weekend on Disney+, Including This Week’s Hottest Movie

- MP Materials Stock: A Gonzo Trader’s Take on the Monday Mayhem

- Actresses Who Don’t Support Drinking Alcohol

- Black Actors Who Called Out Political Hypocrisy in Hollywood

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- Ethereum Classic: A Fool’s Gold in 2026?

2026-01-29 19:37