Author: Denis Avetisyan

A novel economic framework proposes leveraging market-based incentives to curb the environmental impact of increasingly powerful artificial intelligence models.

This review explores a cap-and-trade system for computational resources, aiming to improve AI efficiency, sustainability, and accessibility for researchers and smaller organizations.

The relentless pursuit of scale in artificial intelligence often overshadows the critical need for computational efficiency. This imbalance-explored in ‘AI Cap-and-Trade: Efficiency Incentives for Accessibility and Sustainability’-not only marginalizes researchers and smaller companies lacking vast resources, but also exacerbates the environmental impact of increasingly complex models. We propose a market-based cap-and-trade system for FLOPs (floating point operations) as a provable mechanism to reduce computational load, lower emissions, and monetize efficiency. Could such a system fundamentally reshape AI development, fostering both sustainability and broader participation in this rapidly evolving field?

The Unsustainable Trajectory of Modern AI Development

Contemporary artificial intelligence development is largely defined by a practice known as ‘Hyper-Scaling’, wherein the prevailing strategy centers on continuously increasing both model size and the volume of training data. This approach, while demonstrably effective in achieving incremental performance gains, necessitates a corresponding escalation in computational resources. Each successive generation of AI models demands significantly more processing power, storage capacity, and energy consumption. The focus on scaling-often measured in floating-point operations per second (FLOPs) -has inadvertently created a system where progress is intrinsically linked to ever-increasing demands on global infrastructure, raising critical questions about the long-term viability and sustainability of this developmental trajectory.

Current artificial intelligence development is heavily reliant on GPU compute power, a demand typically quantified in floating point operations per second (FLOPs). This emphasis on scaling computational capacity, however, is proving increasingly unsustainable. The energy requirements for training and running these complex models are substantial and rapidly growing; projections indicate global data center electricity consumption could double by 2030 without significant intervention. This escalating demand places a considerable strain on both economic resources and the environment, raising concerns about the long-term viability of the current trajectory and necessitating a focus on more efficient algorithmic approaches and hardware solutions to mitigate the looming energy crisis.

Despite rapid advancements in artificial intelligence, the crucial pursuit of efficiency often takes a backseat to the relentless drive for larger, more complex models. This creates a fundamental tension between technological progress and responsible development; simply scaling up computational power and data intake isn’t a sustainable long-term strategy. While increased model size frequently correlates with improved performance on benchmarks, it simultaneously demands exponentially more energy and resources. Consequently, innovation frequently prioritizes demonstrable capability over minimizing environmental impact or resource consumption, hindering the development of genuinely sustainable AI solutions and potentially limiting the technology’s long-term viability. Addressing this imbalance requires a dedicated focus on algorithmic optimization, hardware innovation, and a re-evaluation of current metrics for measuring AI advancement, moving beyond sheer scale to prioritize efficiency as a core design principle.

The escalating demand for artificial intelligence capabilities within national security initiatives is inadvertently accelerating an unsustainable trend in data center energy consumption. Driven by a prioritization of processing speed and model complexity – essential for tasks like surveillance, threat detection, and strategic forecasting – these applications often bypass considerations of energy efficiency. This focus on rapid deployment and superior performance, rather than optimized algorithms or hardware, is projected to contribute to a substantial 70% increase in US data center emissions over the next decade. While intended to enhance security, this trajectory risks creating significant environmental consequences and long-term economic burdens, demonstrating a critical need to reconcile national defense objectives with principles of sustainability and responsible innovation.

Incentivizing Efficiency: Market-Based Mechanisms for Sustainable AI

Market-based methods represent a suite of economic tools designed to address the escalating resource consumption of artificial intelligence. These approaches function by internalizing the environmental and economic costs of AI computation, thereby incentivizing developers and users to prioritize efficiency. Rather than relying on prescriptive regulations, these systems establish a framework where sustainable practices are economically advantageous. This is achieved by assigning value to resource optimization – specifically, reducing the total Floating Point Operations per Second (FLOPs) used – and allowing that value to be traded or otherwise rewarded. By aligning economic self-interest with sustainable AI development, these methods offer a potentially scalable solution to mitigate the growing energy demands and associated costs of increasingly complex models.

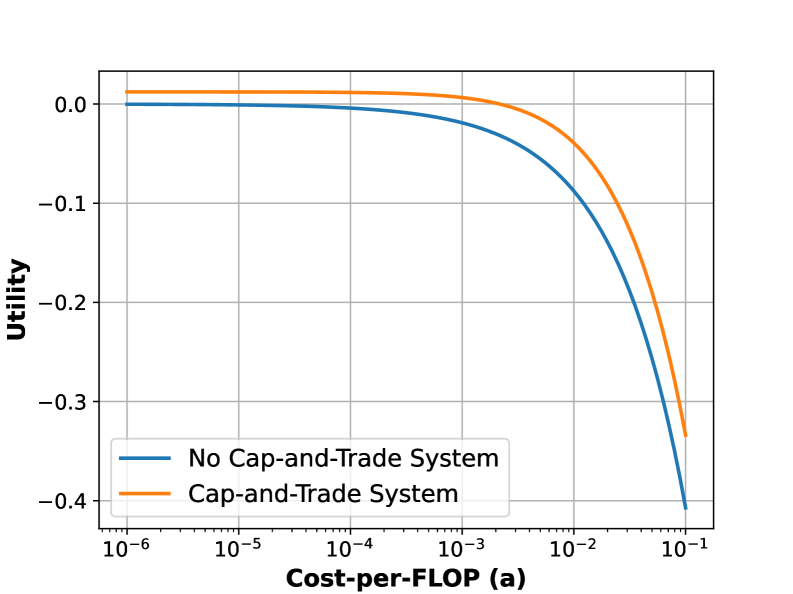

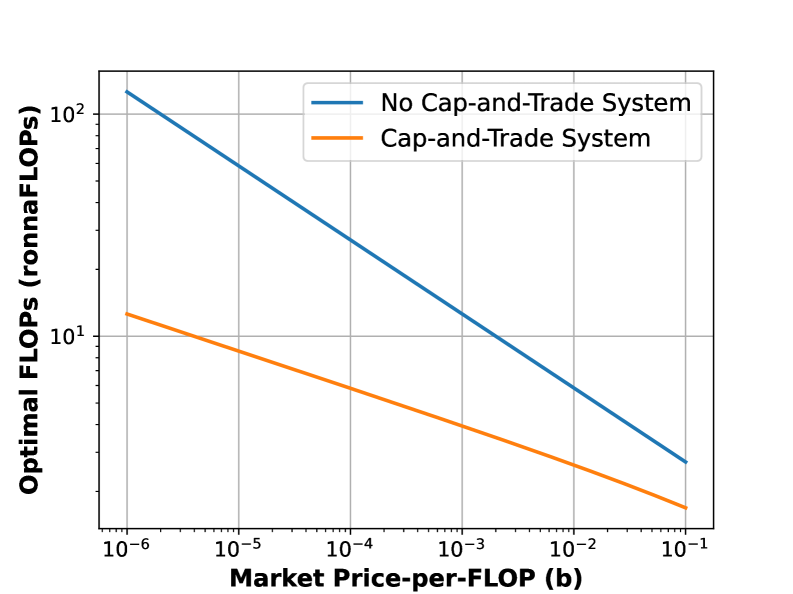

Cap-and-trade systems and tradable permit schemes establish a market for computational resource usage, specifically targeting the reduction of FLOPs. A foundational element of these systems is a clearly defined and regularly updated efficiency benchmark, representing a standard level of performance per FLOP. Entities exceeding the benchmark generate tradable permits, while those falling short must acquire them, creating a financial incentive for optimization. Our modeled results demonstrate that this mechanism successfully incentivizes the adoption of more efficient algorithms and hardware, lowering overall FLOPs consumption across the system as entities seek to minimize costs through resource optimization and permit trading. The quantifiable value assigned to efficiency, through the permit market, directly links economic benefit to reduced computational waste.

Beyond cap-and-trade systems, several economic tools can directly influence AI development practices. A Pigouvian \, Tax imposes a cost on inefficient computational processes, internalizing the negative externalities of high FLOPs usage and discouraging their implementation. Conversely, Credits \, and \, Subsidies incentivize the adoption of energy-efficient algorithms and hardware through financial rewards. User \, Fees, applied to computationally intensive tasks, further disincentivize wasteful practices. Finally, a Deposit-Refund \, System can be implemented where a deposit is required for utilizing significant computational resources, refunded upon demonstrated efficient usage or responsible decommissioning of models, promoting circularity and resource optimization.

The optimal allocation of FLOPs, denoted as x, is mathematically defined by Equation (11): x = (k/a+b)^(1/k+1). Within this formula, ‘k’ represents a scaling factor influencing overall allowance, ‘a’ denotes the cost associated with utilizing FLOPs, and ‘b’ represents a baseline allowance independent of cost. The equation determines the value of x* that minimizes the combined cost of FLOP usage and potential penalties for exceeding allocated resources, effectively optimizing the number of FLOPs utilized by balancing efficiency with operational needs. This distribution model aims to incentivize responsible AI development by directly linking resource consumption to a quantifiable allowance.

Barriers to Equitable Access and Sustainable Innovation in AI

The artificial intelligence sector is currently characterized by a significant accessibility barrier resulting from market concentration. A small number of large companies effectively constitute an oligopoly, controlling the majority of resources, data, and computational infrastructure required for advanced AI development. This dominance hinders the ability of smaller organizations, academic researchers, and startups to effectively compete, limiting innovation and potentially stifling diverse approaches to AI. The substantial capital expenditure necessary for model training, data acquisition, and infrastructure maintenance creates a high barrier to entry, further solidifying the position of established players and restricting broader participation in the field.

The pursuit of Artificial General Intelligence (AGI) significantly amplifies existing power concentrations within the AI sector. Developing AGI necessitates substantial investments in compute infrastructure, data acquisition, and highly specialized personnel – resources largely accessible only to a limited number of well-established companies. This creates a high barrier to entry, preventing smaller organizations and independent researchers from effectively competing in AGI development. Consequently, the competitive landscape narrows, further solidifying the dominance of those already possessing the necessary resources and accelerating their lead in AI innovation, while hindering broader participation and potentially limiting the diversity of approaches to AGI.

The development of advanced AI models necessitates substantial computational resources, driving up both financial costs and energy consumption. This creates a cyclical problem: as models grow in complexity to achieve higher performance, they require increasingly powerful hardware and extended training times, leading to greater carbon emissions. These rising costs then limit access to development and research primarily to organizations with significant capital, hindering innovation from smaller entities and reinforcing the dominance of a few large companies. Consequently, the pursuit of more complex models exacerbates the environmental impact and economic barriers to entry, establishing a negative feedback loop that discourages sustainable and broadly accessible AI practices.

The European Union’s AI Act aims to regulate artificial intelligence systems, but its ultimate impact hinges on the development of specific, measurable standards and robust enforcement procedures that prioritize AI efficiency. Initial estimates project annual compliance costs of $35,000 for companies operating within the EU, a figure that does not include the expenses associated with required certifications. These costs are intended to incentivize the development and deployment of AI models that minimize resource consumption and maximize performance, thereby addressing concerns about the sustainability and accessibility of advanced AI technologies. The effectiveness of the Act will be determined by its ability to balance regulatory oversight with continued innovation and economic growth within the AI sector.

Towards a Resilient and Equitable Future Powered by Sustainable AI

Prioritizing advancements in AI efficiency, coupled with the implementation of market-based strategies, presents a pathway to both technological innovation and wider accessibility. Current AI development often prioritizes scale and performance, leading to substantial computational demands and concentrated resources. Shifting the focus towards algorithms that achieve comparable results with fewer resources-through incentives like competitive grants or performance-based funding-can dramatically reduce the environmental footprint and operational costs associated with AI. Simultaneously, dismantling the accessibility barrier-which stems from expensive infrastructure, specialized expertise, and proprietary software-is crucial. This can be achieved through open-source initiatives, cloud-based AI services, and educational programs that empower a broader range of individuals and organizations to participate in the AI revolution, ultimately fostering a more democratic and resilient technological landscape.

A transition towards more efficient artificial intelligence models promises a dual benefit of environmental preservation and economic growth. Reducing the computational demands of AI-and consequently, its energy consumption-directly lowers carbon emissions, mitigating the technology’s ecological footprint. Simultaneously, this efficiency unlocks novel economic opportunities by lowering the barrier to entry for smaller businesses and researchers, fostering innovation beyond large corporations. A more accessible and less resource-intensive AI landscape cultivates a resilient ecosystem, distributing the benefits of the technology and decreasing reliance on concentrated infrastructure. This broadened participation not only stimulates competition but also encourages the development of specialized AI solutions tailored to diverse needs, creating a more dynamic and sustainable economic future.

Efforts to govern artificial intelligence, such as the proposed AI Act, aim to establish frameworks for accountability and standardized development practices. While essential for mitigating potential risks and fostering public trust, a historical analysis reveals a complex interplay between regulation and economic performance. Studies indicate that regulatory interventions, broadly considered, have corresponded with an approximate 0.8% annual reduction in economic growth between 1980 and 2012. This suggests that crafting AI governance must carefully balance the need for safety and ethical considerations with the potential for stifling innovation and hindering economic progress, demanding a nuanced approach to avoid unintended consequences and ensure a thriving AI ecosystem.

The continued advancement of artificial intelligence hinges not simply on increased computational power, but on a fundamental commitment to sustainability and equitable access. To fully realize AI’s transformative potential-across fields like medicine, climate modeling, and resource management-its development must be decoupled from unsustainable energy consumption and concentrated ownership. A powerful, yet ecologically damaging, AI would ultimately be limited by resource constraints and exacerbate existing societal inequalities, hindering broad-based innovation and adoption. Conversely, prioritizing efficiency, accessibility, and responsible development ensures a resilient and inclusive AI ecosystem capable of addressing complex global challenges and unlocking benefits for all, establishing a future where AI’s power is matched by its long-term viability.

The proposition of a cap-and-trade system for AI computations inherently acknowledges the interconnectedness of resource allocation and resultant behavior. This echoes a fundamental tenet of systemic design – that structure dictates behavior. As the article details, incentivizing efficiency through market mechanisms aims to mitigate the environmental impact of increasingly complex models. However, such optimization inevitably introduces new tension points, as any constraint creates unforeseen consequences elsewhere in the system. Søren Kierkegaard observed, “Life can only be understood backwards; but it must be lived forwards.” This resonates with the challenge of designing such a system; one must anticipate the emergent behaviors resulting from incentivized efficiency, acknowledging that complete foresight is impossible, yet proactive design is crucial for sustainable AI development.

Future Landscapes

The proposal to internalize the externalities of computational cost via a cap-and-trade system for FLOPs represents a compelling, if ambitious, attempt to address a growing paradox. Increasing model performance invariably demands increasing resources, yet the very structure of current incentive systems rewards that escalation. A market-based approach, while elegantly simple in principle, immediately introduces complexities beyond mere computational accounting. The true cost isn’t simply energy consumption, but the cascading effects on hardware development, e-waste, and the concentration of power in those with the capital to acquire computational privilege.

Future work must move beyond quantifying FLOPs to understanding the architectural dependencies that drive their consumption. Modifying one aspect of a neural network – a layer depth, activation function, or training regimen – triggers a domino effect throughout the entire system. The challenge lies not in capping the symptom, but in incentivizing fundamentally more efficient designs. A truly robust system will need to account for the full lifecycle of a model, from initial training to long-term deployment and eventual obsolescence.

Ultimately, the success of such a scheme will hinge on establishing a granular, transparent, and auditable accounting of computational resources. It requires moving beyond abstract metrics and acknowledging that efficiency isn’t merely a technical problem, but a deeply socio-political one. The long-term viability depends on whether this framework can avoid simply shifting the burden of inefficiency onto those least equipped to bear it.

Original article: https://arxiv.org/pdf/2601.19886.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- TON PREDICTION. TON cryptocurrency

- Bitcoin’s Bizarre Ballet: Hyper’s $20M Gamble & Why Your Grandma Will Buy BTC (Spoiler: She Won’t)

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- Gold Rate Forecast

- Nikki Glaser Explains Why She Cut ICE, Trump, and Brad Pitt Jokes From the Golden Globes

- TSMC & ASML: A Most Promising Turn of Events

- Six Stocks I’m Quietly Obsessing Over

- Enduring Yields: A Portfolio’s Quiet Strength

- Quantum Computing: A Long-Term Relationship?

2026-01-28 09:59