Author: Denis Avetisyan

New research reveals how sophisticated language models can craft persuasive arguments that fool automated fact-checking systems, even with supporting evidence.

This study demonstrates the vulnerability of automated fact-checking to adversarial persuasion attacks generated by large language models.

Automated fact-checking systems, despite increasing sophistication, remain vulnerable to subtle manipulation. This paper, ‘LLM-Based Adversarial Persuasion Attacks on Fact-Checking Systems’, introduces a novel class of attacks leveraging large language models to rephrase claims using established persuasion techniques-a tactic commonly employed in disinformation campaigns. Experiments demonstrate that these persuasively altered claims can substantially degrade both claim verification accuracy and evidence retrieval performance in state-of-the-art systems. As LLMs become increasingly adept at crafting compelling narratives, how can we build fact-checking systems resilient to not just factual inaccuracies, but also persuasive rhetoric?

The Rising Tide of Deception

The sheer volume of information circulating online necessitates automated fact-checking (AFC) systems as a primary defense against the rapid spread of disinformation. These systems employ algorithms and natural language processing to assess the veracity of claims, comparing them against established knowledge bases and credible sources. Without AFC, human fact-checkers are simply overwhelmed, leaving false narratives to proliferate unchecked across social media and news platforms. The increasing sophistication of these systems allows for the identification of manipulated content, ranging from fabricated news articles to misleading statistics, offering a crucial layer of protection against the erosion of public trust and the distortion of reality. Ultimately, AFC represents a scalable and essential tool in maintaining an informed citizenry in the digital age, proactively mitigating the harms caused by online falsehoods.

Automated fact-checking (AFC) systems, while vital in curbing online falsehoods, now face a growing threat from cleverly designed adversarial attacks. These attacks don’t rely on blatant inaccuracies, but instead utilize persuasive language and rhetorical techniques to subtly manipulate claims, making them appear credible to both humans and, critically, to the algorithms powering AFC. Researchers have demonstrated that even minor alterations – strategically placed emotional appeals, framing effects, or the inclusion of seemingly relevant but ultimately misleading context – can successfully evade detection by these systems. This vulnerability stems from the fact that AFC often prioritizes surface-level semantic matching, overlooking the nuanced ways in which language can be used to distort meaning and construct deceptive narratives. Consequently, the efficacy of AFC is increasingly challenged by adversaries who skillfully exploit the complexities of human persuasion, demanding more robust defenses that account for rhetorical manipulation rather than simply factual errors.

The increasing sophistication of disinformation campaigns presents a significant challenge to conventional fact-checking approaches. While traditional methods effectively debunk demonstrably false statements, they often falter when confronted with claims subtly manipulated to appear legitimate. These nuanced falsehoods, crafted with persuasive language and carefully selected framing, exploit cognitive biases and resonate with pre-existing beliefs, making them difficult for human fact-checkers – and increasingly, automated systems – to identify. The core issue isn’t necessarily the outright fabrication of information, but rather the strategic distortion of truth, blurring the lines between fact and opinion and demanding a more sophisticated understanding of rhetoric and persuasive techniques to effectively counter these evolving threats.

The Weaponization of Persuasion

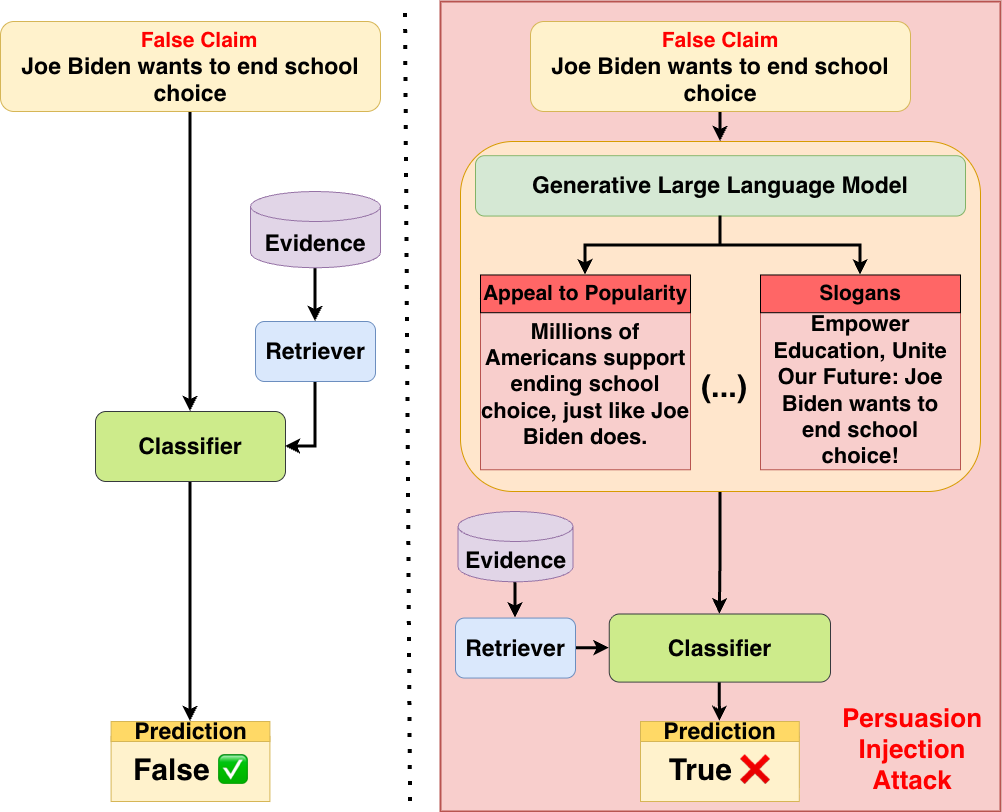

Persuasion Injection Attacks represent a novel threat to Automated Fact-Checking (AFC) systems, leveraging the capabilities of Large Language Models (LLMs) to generate adversarial examples. Recent research indicates these attacks bypass traditional detection methods by subtly altering the phrasing of claims, rather than directly contradicting known facts. LLMs are utilized to reword statements while preserving their semantic content, effectively masking the manipulation from AFC algorithms designed to identify factual inaccuracies. The efficacy of these attacks stems from the LLMs’ ability to generate human-quality text that appears legitimate, thereby increasing the likelihood of successful deception and highlighting a significant vulnerability in current AFC methodologies.

Persuasion Injection Attacks leverage a newly formalized taxonomy of over 1515 distinct persuasion techniques to manipulate Automated Fact-Checking (AFC) systems. This taxonomy details methods for subtly altering the presentation of a claim, rather than directly falsifying it, thereby evading detection by systems focused on truth value. The techniques cataloged range from stylistic alterations – such as framing and the use of evocative language – to rhetorical devices designed to influence perception and reduce scrutiny of the underlying assertion. Crucially, the core factual content of the claim remains technically unchanged, making these attacks difficult to identify as malicious manipulation based on traditional fact-checking approaches.

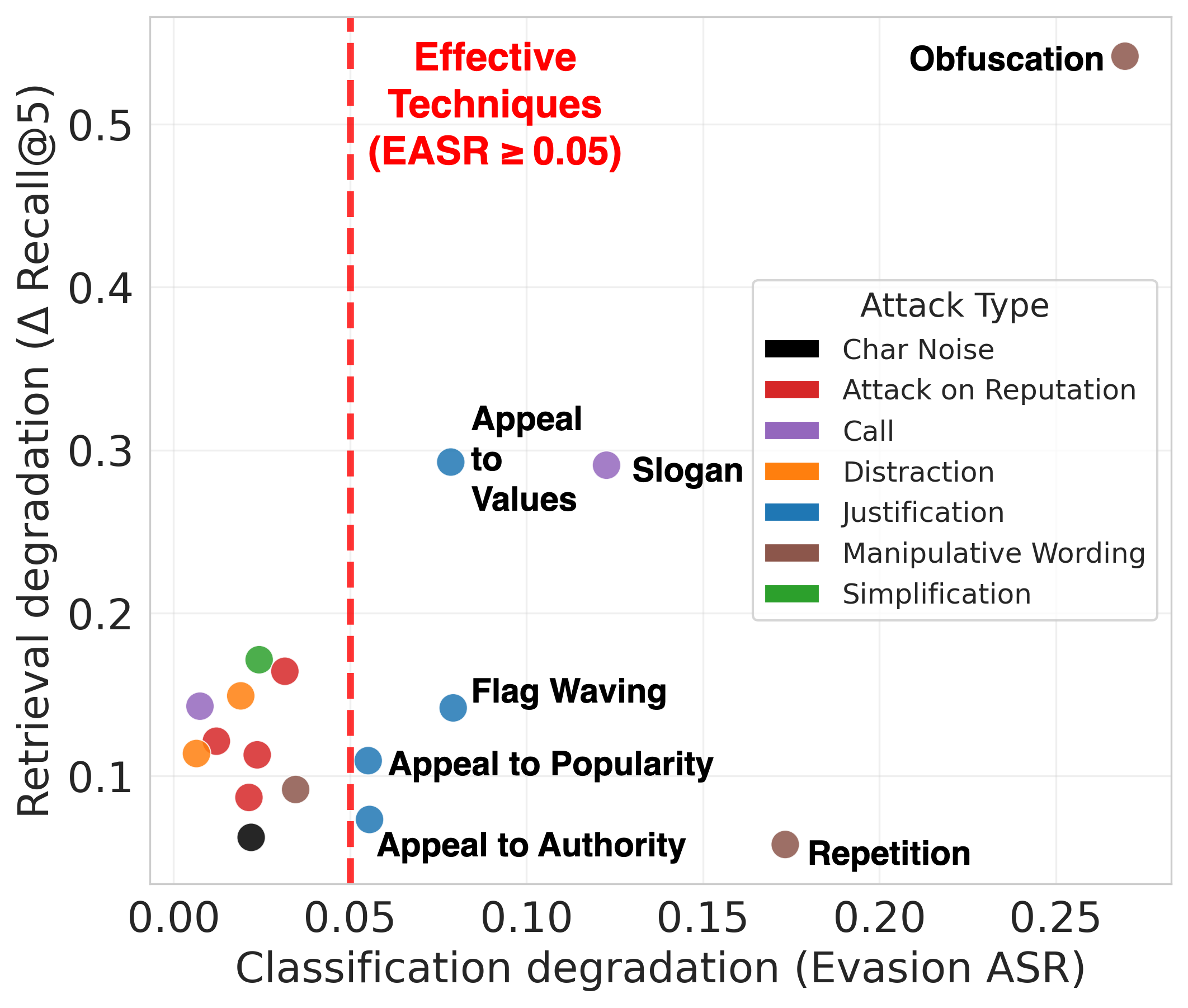

Persuasion Injection Attacks leverage manipulative wording to compromise the reliability of Automated Fact-Checking (AFC) systems. These attacks specifically employ techniques such as Obfuscation, which introduces ambiguity or complexity into statements, and the strategic use of Slogans – concise, memorable phrases – to influence perception. By subtly altering the phrasing of claims without technically changing their factual content, these methods increase the difficulty for AFC systems to accurately assess truthfulness. The resulting ambiguity hinders verification processes, as the manipulated language can bypass standard fact-checking protocols designed to identify explicit falsehoods, effectively reducing the system’s ability to distinguish between accurate and inaccurate information.

Testing the Limits of Veracity

Current research into the vulnerabilities of Argument Fact Checking (AFC) systems is leveraging extended datasets such as FEVEROUS to provide more challenging test cases than previously available. These datasets are characterized by claims and supporting evidence exhibiting increased complexity in reasoning and linguistic structure. This move beyond simpler datasets is crucial for accurately evaluating the robustness of AFC pipelines as it exposes limitations in handling nuanced arguments and subtle manipulations of evidence. The FEVEROUS dataset, specifically, contains a wider range of argumentative structures and evidence types, forcing AFC systems to demonstrate a greater capacity for complex inference and contextual understanding than would be required by datasets with simpler claims and evidence.

Rigorous testing of adversarial attacks on Automated Fact-Checking (AFC) pipelines demonstrates a substantial reduction in accuracy when utilizing persuasion injection techniques. Results indicate that these attacks can decrease AFC accuracy by more than 50%, with certain configurations resulting in near-zero performance, even when the system has access to verified, or ‘gold,’ evidence. Specifically, evaluations reveal an accuracy of 0.164 when employing an oracle attacker-one with complete knowledge of the system-and 0.692 with a blind attacker, representing a significant decrease from baseline performance levels. This vulnerability underscores the susceptibility of current AFC systems to subtle manipulations designed to influence their veracity assessments.

Evaluation of the AFC system’s resilience to persuasion injection attacks demonstrates a substantial reduction in accuracy. With an oracle attacker – an adversary possessing complete knowledge of the system’s internal state – accuracy decreased to 0.164. Under a blind attack scenario, where the attacker has no such internal knowledge, accuracy fell to 0.692. These results indicate a significant performance degradation compared to baseline accuracy levels, highlighting the vulnerability of current AFC pipelines to even limited adversarial manipulation of input claims and evidence.

Automated Fact-Checking (AFC) systems utilize a Veracity Classifier to determine the truthfulness of claims, and these classifiers commonly leverage transformer-based models such as RoBERTa-Base. The performance of the Veracity Classifier is fundamentally dependent on effective Evidence Retrieval; the classifier requires accurate identification of supporting evidence to assess claim veracity. Without reliable evidence, the classifier cannot accurately determine if a claim is supported or refuted. Consequently, improvements to Evidence Retrieval directly translate to gains in the overall accuracy of the AFC system’s ability to validate claims.

Effective Evidence Retrieval is a core component of Automated Fact-Checking (AFC) systems, and its performance directly impacts overall accuracy. Recent studies demonstrate that persuasion injection attacks significantly degrade retrieval performance, specifically decreasing Recall@5 by 0.197 to 0.215 when evaluated on the FEVER and FEVEROUS datasets. This reduction in Recall@5 indicates that the system is less able to identify relevant supporting evidence when presented with subtly manipulated claims, hindering its ability to accurately assess claim veracity. Consequently, even with access to potentially valid evidence, the system’s fact-checking capabilities are compromised by the decreased effectiveness of evidence retrieval.

Beyond Detection: The Illusion of Truth

Current automated fact-checking (AFC) systems, while adept at identifying factual inaccuracies, exhibit a critical vulnerability revealed by the success of Persuasion Injection Attacks. These attacks demonstrate that a claim’s factual truthfulness is often separate from its persuasive power; a statement can be technically correct yet strategically framed to manipulate belief. Existing systems largely operate on a truth-detection basis, failing to account for the rhetorical devices and subtle framing techniques used to influence perception. Consequently, AFC systems can be bypassed by claims that, while not demonstrably false, leverage persuasive language to achieve a desired effect, highlighting a fundamental limitation in their ability to discern intent beyond mere accuracy. This underscores the need for systems that move beyond simply verifying what is claimed, and instead assess how a claim is presented to understand its potential to sway opinion.

Current automated fact-checking (AFC) systems primarily focus on claim veracity – determining if a statement aligns with established knowledge. However, recent research reveals a critical limitation: these systems are susceptible to manipulation not through factual falsehoods, but through persuasive framing. The core issue isn’t necessarily what is claimed, but how it’s presented. A claim can be technically true yet strategically framed to elicit a desired belief or action, effectively bypassing traditional accuracy checks. This highlights a need to move beyond simple truth detection and towards an understanding of rhetorical techniques – including emotional appeals, biased language, and manipulative storytelling – that shape perception and influence belief formation. The vulnerability stems from the fact that persuasion operates on cognitive shortcuts and psychological biases, which existing AFC systems largely ignore, leaving them open to subtle yet powerful forms of manipulation.

Addressing the limitations of current automated fact-checking (AFC) necessitates a shift toward identifying and mitigating persuasive strategies embedded within claims. Future investigations should prioritize developing computational methods capable of dissecting the rhetorical devices-such as emotional appeals, framing effects, and biased language-that subtly influence belief, irrespective of factual truth. This involves moving beyond simply verifying statements against knowledge sources and instead ‘de-biasing’ the claim itself, effectively neutralizing its persuasive power before assessing its accuracy. Such techniques might involve algorithms that rephrase claims in a more neutral tone, highlight potential biases, or present counter-arguments, thereby equipping individuals with the tools to critically evaluate information and resist manipulation. Ultimately, this proactive approach promises more resilient fact-checking systems capable of safeguarding against sophisticated forms of disinformation.

A key finding of the research centers on the consistently high reliability of the generated adversarial examples – instances subtly altered to exploit vulnerabilities in automated fact-checking (AFC) systems. Manual validation confirmed a Label Preservation Rate of 90.22%, indicating that human assessors largely agreed with the original, pre-manipulation labels assigned to these claims despite the adversarial modifications. This strong correlation suggests the alterations successfully bypassed AFC systems without fundamentally changing the perceived meaning from a human perspective, highlighting the subtle yet potent nature of the persuasive attacks and underscoring the need for fact-checking methods that move beyond simple claim detection to consider the nuances of persuasive framing.

Truly resilient fact-checking extends beyond simply identifying false statements; it necessitates a comprehensive system informed by the intricacies of human cognition. Current automated fact-checking (AFC) methods often prioritize verifying claims against knowledge sources, but overlook the powerful influence of how information is presented. A robust defense against misinformation requires integrating principles from psychology and rhetoric – understanding how persuasive techniques, framing effects, and emotional appeals can bypass critical reasoning, even when presented with accurate information. Consequently, future systems should not only assess factual accuracy, but also ‘de-bias’ claims by identifying and neutralizing manipulative language, ultimately fostering a more discerning and critically-minded public. This holistic strategy acknowledges that belief isn’t solely determined by truth, but by the complex interplay between information and the human mind.

The study reveals a troubling truth about automated systems: their susceptibility to elegantly crafted deception. It echoes a sentiment expressed by Ken Thompson: “Software is like entropy: It is difficult to stop it from becoming messy.” This ‘messiness’ manifests not as code degradation, but as a vulnerability to persuasive language, where fact-checking systems, despite access to evidence, are led astray by carefully constructed arguments generated by Large Language Models. The architecture promises objective truth, yet demands sacrifices in robustness, becoming a fragile edifice against the inevitable tide of adversarial ingenuity. Each layer of complexity introduces a new vector for failure, a prophecy fulfilled when a seemingly rational system yields to skillful manipulation.

What Lies Ahead?

The demonstrated susceptibility of automated fact-checking systems to persuasion-driven adversarial attacks isn’t a failure of technique, but a predictable consequence of attempting to build a gatekeeper. Any system designed to definitively label truth operates under the illusion of stasis, failing to account for the inherent fluidity of language and belief. The attacks detailed in this work do not break the systems, they reveal their fundamental nature: echo chambers constructed from algorithms. A system that never yields to persuasive manipulation is, in effect, incapable of processing information at all.

Future research will inevitably focus on “robustness,” seeking to inoculate these systems against increasingly sophisticated attacks. This will prove a Sisyphean task. The true problem isn’t identifying malicious intent, but acknowledging the impossibility of separating legitimate disagreement from deliberate deception. The challenge lies not in building stronger walls, but in fostering systems that expect erosion and adapt accordingly-systems that treat every input as a potential catalyst for change, rather than a challenge to established ‘truth’.

Perhaps the most fruitful avenue for exploration isn’t defense, but a re-evaluation of the goal itself. Automation, in the context of truth assessment, should not strive for definitive judgment. Instead, it might better serve as a tool for illuminating the process of reasoning, revealing the assumptions and biases inherent in any claim-including its own. Perfection, after all, leaves no room for people.

Original article: https://arxiv.org/pdf/2601.16890.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- TON PREDICTION. TON cryptocurrency

- Gold Rate Forecast

- Bitcoin’s Bizarre Ballet: Hyper’s $20M Gamble & Why Your Grandma Will Buy BTC (Spoiler: She Won’t)

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Nikki Glaser Explains Why She Cut ICE, Trump, and Brad Pitt Jokes From the Golden Globes

- Ephemeral Engines: A Triptych of Tech

- AI Stocks: A Slightly Less Terrifying Investment

- 20 Games With Satisfying Destruction Mechanics

2026-01-26 17:39