Author: Denis Avetisyan

A new generative model tackles the challenge of identifying objects in synthetic aperture radar (SAR) imagery when labeled training data is scarce.

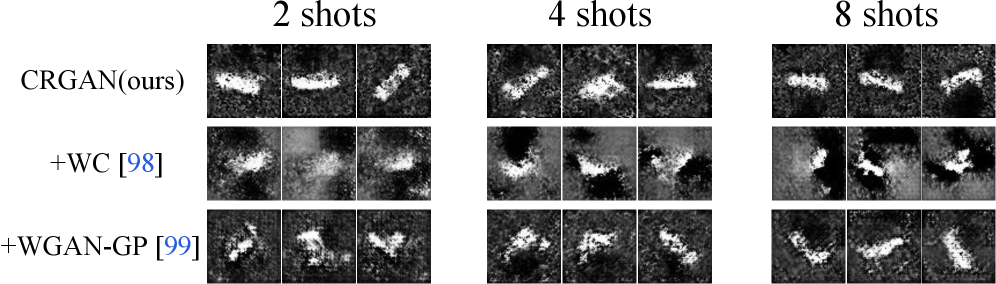

Researchers introduce Cr-GAN, a consistency-regularized generative adversarial network that leverages feature augmentation and a dual-branch discriminator to achieve state-of-the-art few-shot SAR target recognition.

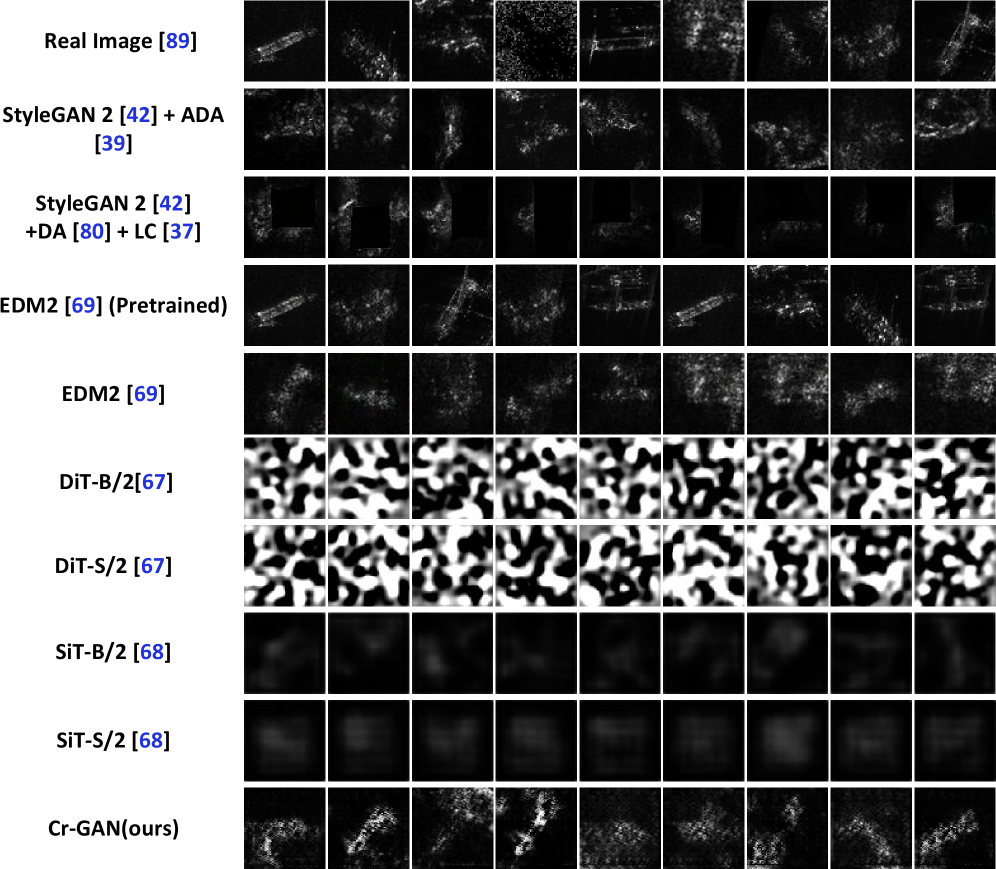

Addressing the scarcity of labeled data in synthetic aperture radar (SAR) imagery presents a fundamental challenge for real-world target recognition. This paper introduces ‘Consistency-Regularized GAN for Few-Shot SAR Target Recognition’, a novel generative framework designed to overcome this limitation by synthesizing high-fidelity samples even with limited training data. The core of our approach, Cr-GAN, leverages a dual-branch discriminator and feature consistency to effectively augment datasets and boost self-supervised learning algorithms, achieving state-of-the-art accuracy with significantly fewer parameters than current diffusion models. Can this consistency-regularized approach unlock new possibilities for robust and efficient SAR image analysis in data-constrained scenarios?

Decoding the Signal: The Challenge of Limited Data in SAR Imaging

Effective identification of objects using Synthetic Aperture Radar (SAR) imagery is paramount in diverse applications, ranging from environmental monitoring and disaster response to defense and security; however, a significant impediment to progress lies in the limited availability of meticulously labeled training data. Unlike optical imagery where vast datasets are readily accessible, acquiring and annotating SAR data is considerably more complex and resource-intensive. This scarcity poses a fundamental challenge for machine learning algorithms, particularly those reliant on supervised learning techniques, which demand copious amounts of labeled examples to achieve robust and accurate target recognition. Consequently, the performance of these systems often suffers from poor generalization capabilities, hindering their reliability when applied to real-world scenarios with previously unseen targets or varying environmental conditions.

Conventional supervised learning techniques, while powerful, often falter when applied to Synthetic Aperture Radar (SAR) imagery due to the inherent difficulty in acquiring sufficiently large, accurately labeled datasets. These methods typically require extensive training examples to establish robust patterns and effectively differentiate between target classes; a scarcity of data leads to overfitting, where the algorithm memorizes the training set instead of learning generalizable features. Consequently, performance degrades significantly when presented with real-world SAR images, which invariably exhibit variations in environmental conditions, target orientation, and imaging geometry – resulting in unreliable target recognition and hindering practical applications of this remote sensing technology. The inability to generalize beyond the limited training data poses a substantial challenge to deploying SAR-based systems in dynamic and unpredictable environments.

The advancement of synthetic aperture radar (SAR) target recognition is fundamentally limited by a critical shortage of meticulously labeled training data, a pervasive issue within the remote sensing field. Unlike optical imagery where vast datasets are often available, acquiring and annotating SAR images is both expensive and time-consuming, demanding specialized expertise to accurately identify targets amidst complex radar returns. This scarcity directly impedes the development of reliable machine learning algorithms; systems trained on insufficient data frequently exhibit poor generalization capabilities, struggling to accurately classify targets in unseen, real-world scenarios. Consequently, the deployment of robust, automated SAR-based target recognition systems – crucial for applications ranging from environmental monitoring to defense – remains significantly hindered, demanding innovative approaches to data augmentation, transfer learning, or unsupervised learning to overcome this persistent bottleneck.

Forging New Data: Cr-GAN as a Generative Solution

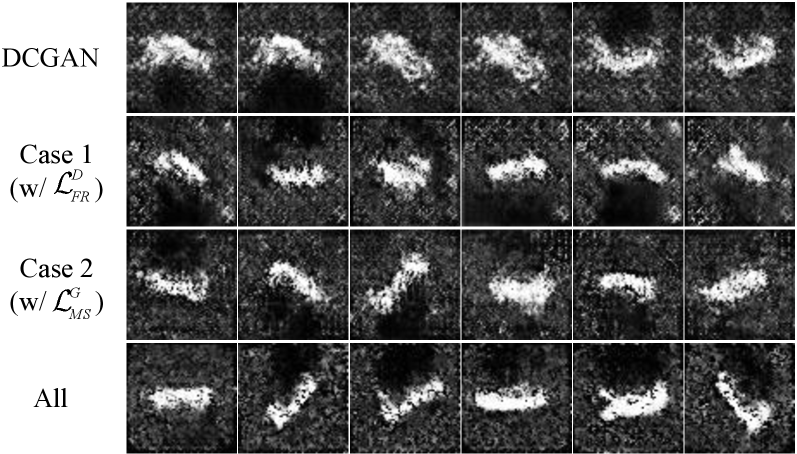

Consistency-Regularized Generative Adversarial Networks (Cr-GAN) address the challenge of limited Synthetic Aperture Radar (SAR) data availability for training machine learning models. Traditional GANs can suffer from instability and mode collapse, leading to unrealistic or repetitive generated samples. Cr-GAN mitigates these issues by incorporating a consistency regularization term into the training process. This regularization encourages the generator to produce samples that are not only indistinguishable from real SAR data, as assessed by the discriminator, but also consistent with slight perturbations of the input noise vector. This approach stabilizes training and improves the diversity and realism of the augmented dataset, effectively increasing the quantity of training data without requiring additional real-world data acquisition.

Cr-GAN builds upon the Generative Adversarial Network (GAN) framework by incorporating consistency regularization to improve the quality and reliability of generated Synthetic Aperture Radar (SAR) data. Standard GANs can suffer from mode collapse and instability during training, leading to unrealistic or inconsistent synthetic samples. Consistency regularization addresses these issues by enforcing that small perturbations to the input noise vector result in correspondingly small changes in the generated SAR image. This constraint encourages the generator to learn a smoother, more stable mapping from the latent space to the data space, yielding generated samples that are not only visually realistic but also statistically consistent with the characteristics of the real SAR data used for training. This enhanced consistency is crucial for improving the performance of machine learning models trained on the augmented dataset.

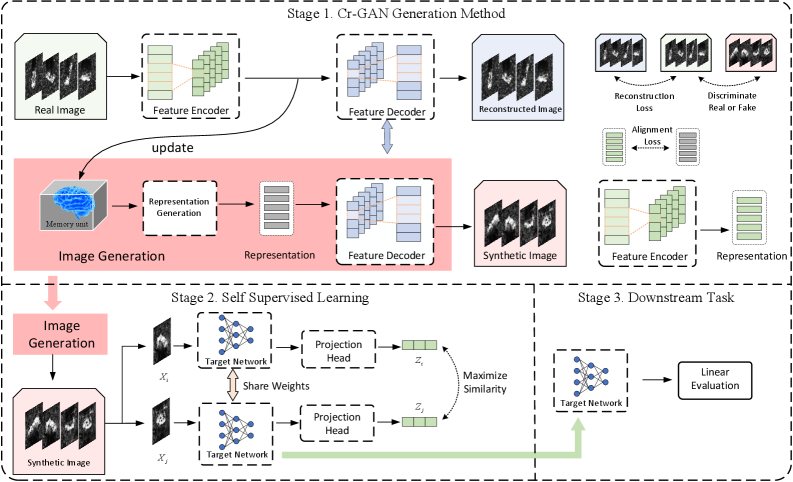

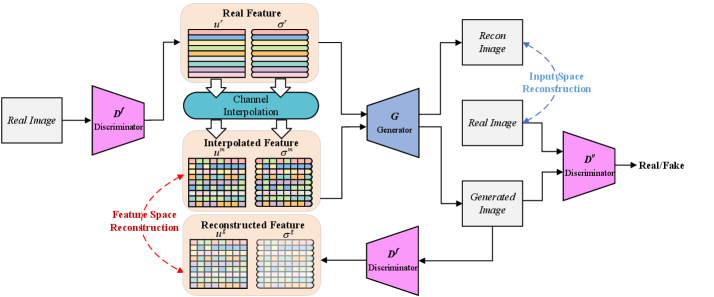

The Cr-GAN architecture employs a dual-branch discriminator to improve feature extraction and subsequent SAR image generation. This discriminator consists of two parallel branches, each responsible for analyzing the input image from a different perspective – one focusing on global features and the other on local details. By comparing the outputs of these branches, the discriminator provides more robust and nuanced feedback to the generator. This dual-branch approach facilitates the identification of subtle inconsistencies and artifacts in generated images, compelling the generator to produce higher-quality outputs that more accurately reflect real SAR data.

Feature interpolation within the Cr-GAN framework operates by creating new synthetic SAR images through the linear combination of feature vectors extracted from existing, real SAR images. Specifically, a latent feature vector z_i is randomly sampled, and another feature vector z_j is selected from the training data. A new feature vector z_{new} = \lambda z_i + (1 - \lambda) z_j is then generated, where λ is a weighting factor between 0 and 1. This interpolated feature vector is subsequently passed through the generator network to produce a novel SAR image, effectively expanding the dataset with samples that represent a smooth transition between learned feature representations and increasing the diversity of the training data available for subsequent machine learning tasks.

Unveiling Hidden Patterns: Pre-training with SimCLR

Cr-GAN incorporates pre-training utilizing the SimCLR (Simple Framework for Contrastive Learning of Representations) framework, a method categorized as self-supervised learning. SimCLR operates by learning representations from unlabeled data through the maximization of agreement between differently augmented views of the same sample. This involves constructing positive pairs from multiple augmentations of a single image and contrasting them with negative pairs derived from different images within a batch. The resulting learned representations capture essential features of the input data without requiring manual annotation, and these representations are then transferred to the Cr-GAN architecture to initialize its weights prior to supervised fine-tuning. This transfer improves performance, particularly when labeled data is scarce.

Self-supervised learning (SSL) is a technique that enables models to extract useful features from data without requiring explicit human-provided labels. This is achieved by creating artificial labels from the data itself, typically through pretext tasks such as predicting rotations, color distortions, or missing parts of an image. By training on these self-generated labels, the model learns robust and transferable representations of the underlying data distribution. The resulting representations capture inherent data characteristics, improving the model’s ability to generalize to unseen data and reducing the reliance on large, labeled datasets which are often costly and time-consuming to obtain. Consequently, models pre-trained with SSL demonstrate improved performance on downstream tasks, even with limited labeled examples.

Cr-GAN utilizes pre-training on extensive, unlabeled Synthetic Aperture Radar (SAR) imagery datasets to establish a robust foundational understanding of SAR data characteristics prior to supervised fine-tuning. This initial pre-training phase allows the model to learn inherent patterns and features within SAR imagery without reliance on labeled examples. Consequently, when subsequently fine-tuned with the comparatively smaller labeled dataset, Cr-GAN requires fewer labeled examples to achieve optimal performance, demonstrating improved data efficiency and generalization capability. The pre-trained weights serve as a strong starting point, reducing the need for extensive learning during the supervised phase and accelerating convergence.

Pre-training with SimCLR enhances data augmentation by establishing a robust feature space prior to supervised fine-tuning. The initial self-supervised phase allows the model to learn meaningful representations from unlabeled Synthetic Aperture Radar (SAR) data, resulting in more effective transformations during augmentation. Specifically, the pre-trained model is better equipped to generate augmented samples that preserve crucial SAR characteristics, preventing the introduction of noise or artifacts. This improved augmentation process reduces the need for extensive hyperparameter tuning and allows Cr-GAN to achieve higher performance with a limited quantity of labeled training data, as the model already possesses a strong understanding of relevant feature distributions.

Demonstrating Impact: Performance Validation and Generalization

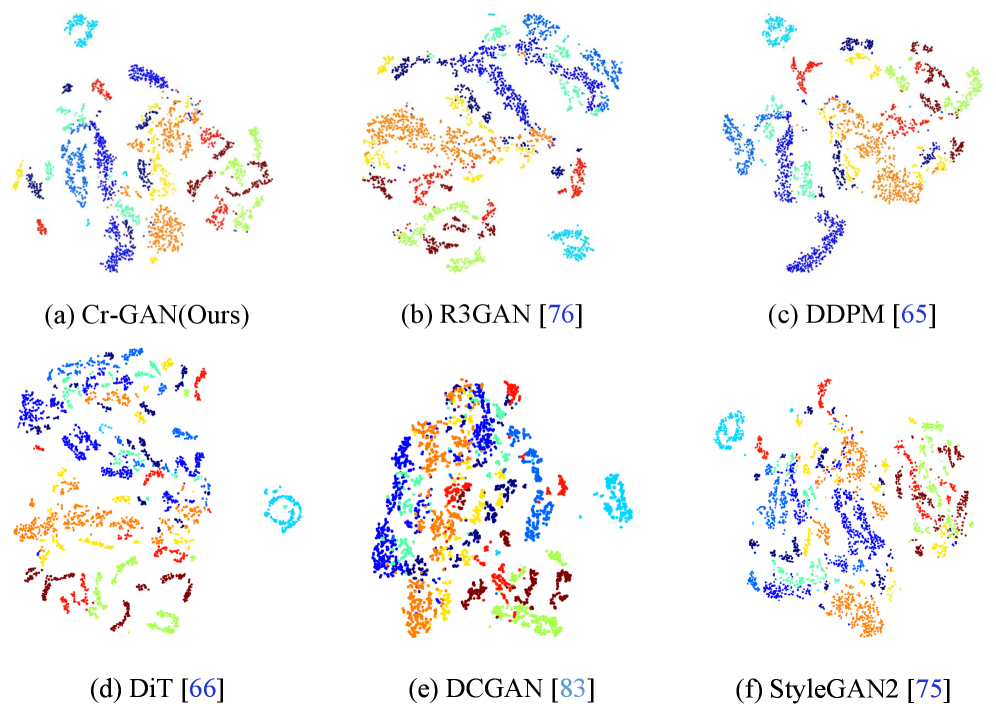

Evaluations of Cr-GAN, following pre-training with the SimCLR framework, reveal its robust capabilities in synthetic aperture radar (SAR) image analysis. The model’s performance was rigorously tested using both the MSTAR and SRSDD datasets, standard benchmarks for assessing algorithms designed for target recognition and ship detection. Results demonstrate Cr-GAN’s effectiveness in discerning objects within complex SAR imagery, highlighting its potential for practical applications in remote sensing, surveillance, and defense. This successful validation across diverse datasets underscores the model’s generalization capability and positions it as a promising solution for real-world SAR image interpretation tasks.

The Cr-GAN demonstrated substantial performance in synthetic aperture radar (SAR) target recognition, achieving an accuracy of 71.21% on the challenging MSTAR dataset under an 8-shot learning scenario. This result signifies a significant advancement, as the Cr-GAN surpassed the performance of all previously established generative adversarial network (GAN)-based and diffusion-based methodologies when tested under the same conditions. This capability highlights the model’s potential for effective target identification even with limited training examples, offering a practical solution for applications where data acquisition is costly or time-consuming.

The efficacy of Cr-GAN in synthetic aperture radar (SAR) target recognition extends beyond simple accuracy metrics, as evidenced by a robust F1-score of 71.23% achieved on the challenging MSTAR dataset. This score, representing the harmonic mean of precision and recall, indicates a consistently high level of performance across all classes and minimizes the impact of imbalanced datasets. A high F1-score confirms that Cr-GAN not only correctly identifies targets but also avoids false positives, demonstrating a reliable and balanced capability in distinguishing between different SAR targets – a crucial attribute for practical applications like defense and environmental monitoring.

Cr-GAN distinguishes itself through remarkable computational efficiency, achieving strong performance with a mere 13.71 million model parameters. This streamlined architecture represents a substantial reduction in complexity when contrasted with contemporary generative models such as EDM2, which requires approximately 280 million parameters to function. The significantly lower parameter count not only reduces computational demands during both training and inference, but also facilitates easier deployment on resource-constrained platforms – a critical advantage for real-world applications demanding rapid processing and minimal energy consumption. This efficient design allows Cr-GAN to deliver competitive results without the substantial overhead typically associated with larger, more complex models.

The efficiency of Cr-GAN extends beyond accuracy, manifesting in remarkably swift training times. Empirical results demonstrate that Cr-GAN completes its training cycle in just 0.27 hours – a substantial reduction compared to the 6.55 hours required by R3GAN. This accelerated training is a key advantage, enabling faster iteration and experimentation for researchers and developers working with synthetic aperture radar (SAR) data. The speed of Cr-GAN’s training, coupled with its competitive performance, positions it as a practical and efficient solution for both target recognition and ship detection tasks, minimizing computational costs and maximizing productivity.

Charting Future Directions: Beyond GANs and Towards Advanced Generation

The synergistic potential of combining Diffusion Models with Contrastive Regularized Generative Adversarial Networks (Cr-GAN) represents a promising avenue for future research in Synthetic Aperture Radar (SAR) data augmentation. While Cr-GAN effectively enhances data diversity and realism through contrastive learning, Diffusion Models excel at generating high-fidelity samples by progressively refining noise into coherent images. Integrating these approaches could leverage the strengths of both – Cr-GAN’s efficient data diversification and Diffusion Models’ superior image quality – to produce augmented datasets that significantly outperform those created by either method alone. This integration would likely involve using a Diffusion Model to refine the outputs of Cr-GAN, or conversely, utilizing Cr-GAN as a discriminator within a Diffusion Model framework, ultimately pushing the boundaries of realism and utility in generated SAR imagery and enabling more robust performance in downstream applications.

The performance of Cr-GAN, a conditional generative adversarial network, can be significantly boosted through the strategic implementation of Transfer Learning. This approach leverages knowledge gained from training on datasets in related, but distinct, domains – for example, applying insights from optical image generation to refine synthetic aperture radar (SAR) image creation. By pre-training components of Cr-GAN on larger, more readily available datasets, the network can develop a robust foundational understanding of image characteristics. This pre-trained knowledge is then transferred and fine-tuned using the target SAR dataset, requiring less data and computational resources to achieve optimal performance. The result is a more efficient and accurate generative model, capable of producing high-quality augmented SAR imagery even when faced with limited labeled data, ultimately broadening the applicability of SAR technology across diverse scientific and commercial fields.

The challenge of limited labeled data in Synthetic Aperture Radar (SAR) image analysis can be significantly mitigated by combining few-shot learning with data augmentation techniques like Cr-GAN. Few-shot learning algorithms are designed to generalize from a very small number of examples, but their performance is greatly enhanced when paired with artificially expanded datasets. Cr-GAN’s capacity to generate realistic and diverse SAR images effectively increases the size of the training set, allowing these algorithms to learn more robust features and achieve higher accuracy even with scarce labeled data. This synergy is particularly valuable in specialized applications where acquiring extensive labeled SAR imagery is costly, time-consuming, or impractical, opening doors to broader adoption of SAR technology in domains like environmental monitoring and disaster response.

The future of Synthetic Aperture Radar (SAR) imagery analysis hinges on continued progress in generative modeling. While current techniques like Cr-GAN demonstrate significant potential for data augmentation and enhanced performance, further innovation promises to unlock entirely new applications. These advancements will not only refine existing capabilities – such as object detection, scene classification, and change monitoring – but also enable solutions for challenges previously considered intractable due to data scarcity. Improved generative models will facilitate the creation of realistic SAR datasets, crucial for training robust machine learning algorithms and supporting time-sensitive applications like disaster response, environmental monitoring, and precision agriculture. Ultimately, the ability to synthesize high-fidelity SAR data will democratize access to this powerful remote sensing technology, fostering innovation across diverse scientific and commercial fields.

The pursuit of robust SAR target recognition, as demonstrated by Cr-GAN, hinges on discerning underlying patterns within limited datasets. The framework’s emphasis on feature consistency directly addresses the challenge of extracting meaningful information from sparse data, a cornerstone of few-shot learning. This aligns with Yann LeCun’s observation: “Backpropagation is the dark art of deep learning, and we need to understand it better.” Understanding how information propagates through Cr-GAN’s dual-branch discriminator, and how feature consistency acts as a regularizer, illuminates the system’s behavior and reveals potential vulnerabilities. The method’s success isn’t simply about achieving high accuracy; it’s about building a model that reliably generalizes from minimal examples, demanding a rigorous assessment of data boundaries and the impact of noise.

Future Directions

The presented Cr-GAN, while demonstrating notable advances in few-shot SAR target recognition, implicitly acknowledges the persistent challenge of translating limited observation into robust pattern identification. The framework’s reliance on feature consistency suggests a broader trend: the pursuit of internal, self-derived constraints to compensate for sparse external data. Future work should carefully check data boundaries to avoid spurious patterns arising from over-interpretation of the augmented samples; simply generating more data is not equivalent to generating better data.

A logical extension involves exploring alternative consistency regularizations, perhaps moving beyond feature-level constraints to incorporate contextual or relational consistency. The dual-branch discriminator, while effective, presents a computational trade-off. Investigating more efficient discriminator architectures, or even entirely novel adversarial training strategies, could unlock further gains in both accuracy and speed. It would be prudent to examine how well these techniques generalize to significantly different sensor modalities or target classes – a truly robust system must resist overfitting to specific data characteristics.

Ultimately, the success of few-shot learning hinges on developing representations that capture the essence of a target, independent of specific instances. The current approach represents a step towards this goal, but a deeper understanding of how to distill meaningful information from limited observations remains a crucial, and perhaps perpetually elusive, pursuit.

Original article: https://arxiv.org/pdf/2601.15681.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- TON PREDICTION. TON cryptocurrency

- Gold Rate Forecast

- Bitcoin’s Bizarre Ballet: Hyper’s $20M Gamble & Why Your Grandma Will Buy BTC (Spoiler: She Won’t)

- Nikki Glaser Explains Why She Cut ICE, Trump, and Brad Pitt Jokes From the Golden Globes

- Dividends: A Most Elegant Pursuit

- Venezuela’s Oil: A Cartography of Risk

- AI Stocks: A Slightly Less Terrifying Investment

- The Apple Card Shuffle

2026-01-24 23:24