Author: Denis Avetisyan

This review explores how deep learning and mobile edge computing can be combined to create a self-managing system for real-time threat detection in next-generation wireless networks.

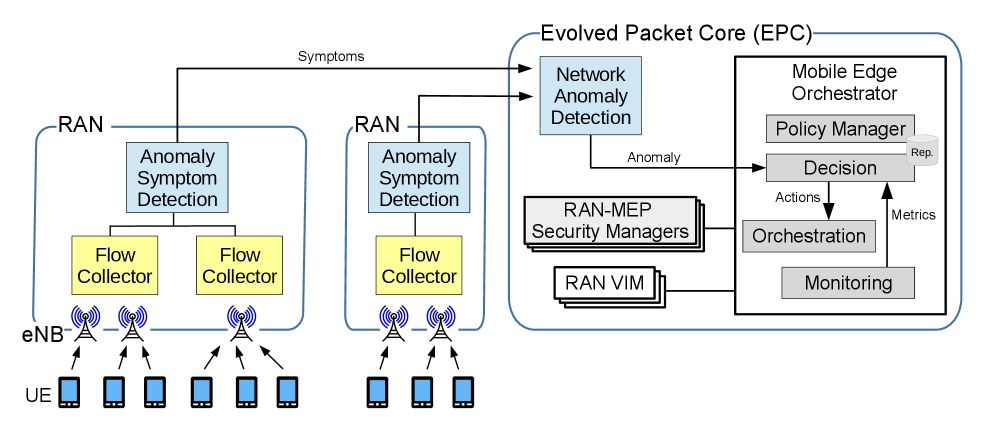

A policy-driven, MEC-based architecture leverages deep learning for dynamic anomaly detection and enhanced cybersecurity in 5G networks.

The increasing complexity of 5G networks presents a paradox: while promising unprecedented connectivity, it simultaneously amplifies vulnerabilities to evolving cyber threats. Addressing this challenge, this paper introduces ‘Dynamic Management of a Deep Learning-Based Anomaly Detection System for 5G Networks’, a novel Mobile Edge Computing (MEC)-oriented architecture that leverages deep learning for real-time, autonomous anomaly detection. The proposed system dynamically manages computing resources through policy-based control, enhancing both network security and operational efficiency. Will this approach pave the way for truly self-defending 5G infrastructures capable of proactively mitigating emerging threats?

Unveiling the Cracks: The Evolving Threat Landscape

The escalating frequency and complexity of cyberattacks are rapidly outpacing the capabilities of conventional security systems. Historically, defenses centered on identifying known threats through signatures and pre-defined rules, a strategy proving increasingly ineffective against modern adversaries. Attackers now employ techniques like advanced persistent threats, fileless malware, and sophisticated social engineering to bypass these static defenses. Furthermore, the sheer volume of attacks-often automated and launched from distributed networks- overwhelms traditional intrusion detection systems, creating significant blind spots. This combination of increased sophistication and scale necessitates a fundamental shift towards more adaptive and intelligent security architectures capable of anticipating and responding to evolving threats in real-time, rather than simply reacting to past events.

Traditional cybersecurity defenses heavily leaned on signature-based detection, a method comparing incoming data against a database of known malicious patterns. However, this approach falters dramatically against contemporary threats like zero-day exploits – attacks leveraging previously unknown vulnerabilities – and polymorphic malware. These advanced threats actively evade signature detection by constantly altering their code, presenting a different ‘face’ with each iteration. This dynamic obfuscation renders static signature lists obsolete almost immediately, creating a critical detection gap. Consequently, systems protected solely by signature-based methods remain vulnerable to novel attacks, as the defenses are inherently reactive and unable to recognize malicious behavior outside of pre-defined patterns. The increasing prevalence of these evasive techniques necessitates a shift toward more adaptive and proactive security measures.

Contemporary cybersecurity increasingly prioritizes the anticipation of threats rather than simply reacting to them. Traditional methods, focused on recognizing known malicious signatures, falter against novel attacks and constantly evolving malware. A behavior-based approach, however, establishes a baseline of normal system activity and flags deviations that suggest malicious intent, even if the specific threat is previously unknown. This proactive stance allows for the identification of zero-day exploits and polymorphic malware by focusing on how a program operates, rather than what it is. By analyzing patterns of behavior – such as unusual network traffic, unauthorized file modifications, or suspicious process interactions – systems can detect and neutralize threats before they escalate into significant breaches, offering a crucial advantage in the ever-evolving digital landscape. The emphasis shifts from post-incident response to pre-emptive defense, safeguarding assets and data with a more resilient security posture.

Flow and Insight: Fueling Intelligence with Network Data

Network flow collection provides a detailed, high-fidelity representation of network activity by recording metadata about network traffic – including source and destination IP addresses, ports, protocols, and traffic volumes – without capturing the payload data itself. This approach offers significant advantages for anomaly detection due to its low overhead and comprehensive coverage. Unlike packet capture, flow collection generates substantially less data, enabling analysis of network-wide traffic at scale. The resulting flow records provide a statistical profile of communication patterns, which is essential for establishing baselines of normal behavior and subsequently identifying deviations indicative of malicious activity or network compromise. Robust flow collection, utilizing technologies like NetFlow, sFlow, or IPFIX, is therefore a critical prerequisite for effective anomaly detection systems.

Deep Learning algorithms demonstrate superior performance in anomaly detection due to their ability to automatically learn complex, non-linear relationships within high-dimensional datasets. Traditional methods, such as signature-based detection or statistical thresholding, often rely on pre-defined rules or assumptions about normal network behavior, limiting their effectiveness against novel or evolving threats. Deep Learning models, particularly those utilizing multi-layer neural networks, can identify subtle patterns indicative of malicious activity that would be missed by these conventional approaches. This is achieved through feature learning, where the algorithm autonomously extracts relevant characteristics from the data, eliminating the need for manual feature engineering and improving both detection rates and the ability to generalize to unseen attacks.

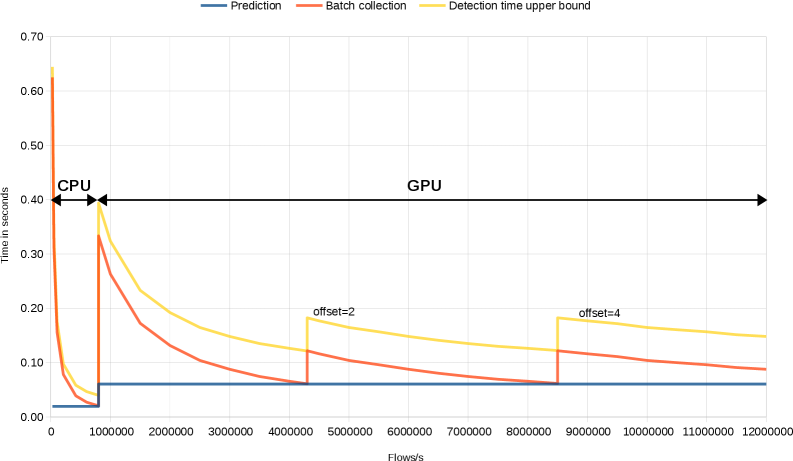

The integration of network flow collection with Deep Learning techniques facilitates the development of security systems capable of real-time threat detection. Utilizing GPU-based virtual machines, these systems achieve a detection time of less than 0.06 seconds. This performance is enabled by the high-fidelity data provided by flow collection, which allows Deep Learning algorithms to rapidly analyze network activity and identify anomalous patterns indicative of malicious behavior. The low latency achieved is critical for responding to threats before significant damage occurs, particularly in high-volume network environments.

The implemented deep learning system, when evaluated against a dataset of known botnet traffic, achieves a classification accuracy of 95.37%. Importantly, the system also demonstrates the ability to generalize to previously unseen botnet families, achieving an accuracy of 68.61% in identifying malicious activity from these novel threats. This performance indicates the system’s capacity to not only recognize established malware signatures but also to detect anomalous behaviors indicative of new or evolving botnet variants, offering a degree of proactive threat detection.

Orchestrating Defenses: The Power of Policy-Driven Security

Management policies establish a centralized framework for security implementation, ensuring consistent application of rules and configurations across all network segments and devices. These policies define acceptable use, access controls, data handling procedures, and response protocols, thereby minimizing configuration drift and reducing the attack surface. By codifying security requirements, organizations can enforce a standardized security posture, streamline compliance efforts, and facilitate automated security operations. The efficacy of these policies relies on their comprehensive scope, regular review, and consistent enforcement through automated tools and processes.

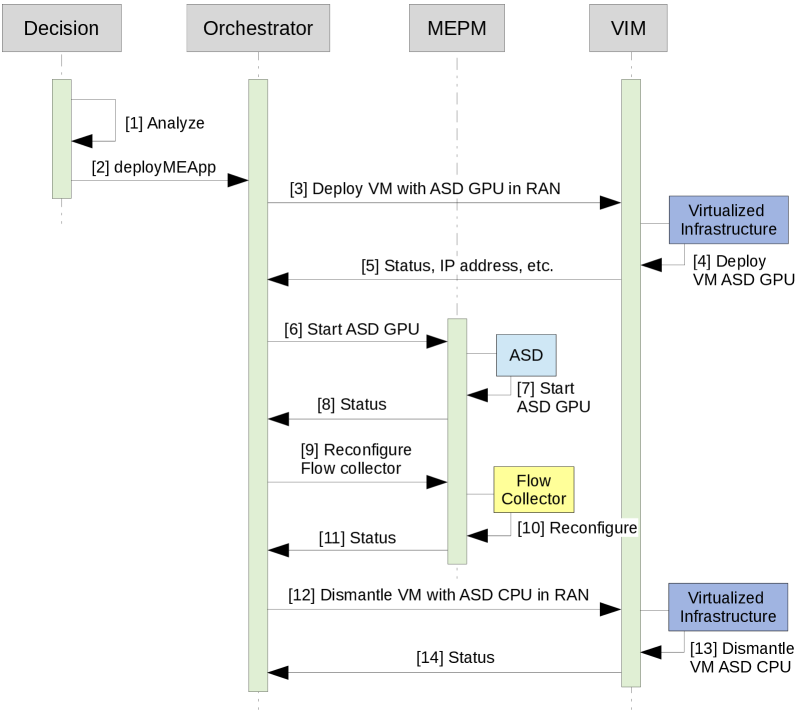

Policy-driven security extends beyond core network protections to encompass specialized deployments. Virtual Infrastructure Policy governs security within cloud environments, addressing concerns specific to virtualized workloads and the shared responsibility model. Simultaneously, Mobile Edge Application Policy manages security for applications deployed at the network edge – a distributed computing paradigm – requiring policies tailored to the unique constraints and threat landscape of those locations. These specialized policies ensure consistent security enforcement across diverse and evolving infrastructure architectures, supplementing and refining broader network-level policies.

Anomaly Detection Function Policy governs the behavior of deployed anomaly detection modules, establishing parameters to guarantee operation consistent with broader security goals. This policy dictates thresholds for anomaly scoring, acceptable false positive rates, and data sources utilized for analysis. Configuration includes defining the types of anomalies to be detected – such as deviations from baseline network traffic, unusual user behavior, or unexpected system calls – and specifying the appropriate response actions, ranging from logging and alerting to automated mitigation. Effective implementation of this policy ensures that anomaly detection efforts contribute directly to the organization’s overall security posture, preventing disparate or misconfigured modules from undermining defenses.

The system’s anomaly detection feature processing capability demonstrates significant performance variation based on the selected deep learning framework and hardware acceleration. Utilizing TensorFlow on a CPU, the system achieves a throughput of 16,384 features per second. However, employing Caffe2 on a GPU results in a substantially improved throughput of 262,144 features per second. This represents a 16x performance increase, highlighting the benefits of GPU acceleration and the efficiency of the Caffe2 framework for this specific workload.

Beyond Reaction: Charting the Course for Adaptive Network Security

Organizations are increasingly leveraging the synergy between advanced analytics and policy-driven security to achieve real-time threat detection and response. This integration moves beyond traditional signature-based systems by employing machine learning algorithms to establish baseline network behaviors and subsequently identify anomalous activity indicative of malicious intent. When deviations from the norm occur, pre-defined security policies are automatically triggered, enacting containment measures like isolating compromised systems or blocking suspicious traffic. This automated response dramatically reduces the window of opportunity for attackers, minimizing potential damage and freeing security personnel to focus on more complex investigations. The result is a proactive security posture that adapts to evolving threats, rather than simply reacting to them after the fact.

Automated security responses represent a paradigm shift in network defense, alleviating the strain on increasingly burdened security teams. Traditionally, identifying and mitigating threats required significant manual intervention, a process both time-consuming and prone to error. Current systems now leverage the speed of machine analysis to enact pre-defined actions upon threat detection – isolating compromised systems, blocking malicious traffic, or triggering forensic investigations – all without direct human involvement. This automation not only accelerates response times, minimizing the window of opportunity for attackers, but also frees up security professionals to focus on proactive threat hunting, vulnerability management, and the development of more robust security strategies, ultimately enhancing overall organizational efficiency and resilience.

The evolution of network security increasingly relies on a synergistic blend of anomaly detection, deep learning, and intelligently crafted policies, marking a substantial advancement toward robust and adaptable defenses. Traditional signature-based systems struggle against novel threats; however, anomaly detection identifies deviations from established baseline network behavior, flagging potentially malicious activity. This process is significantly enhanced by deep learning algorithms, which can discern complex patterns and predict future attacks with greater accuracy than conventional methods. Crucially, these insights aren’t simply alerts; intelligent policies automate appropriate responses, ranging from isolating compromised systems to dynamically adjusting firewall rules. This automated, data-driven approach not only minimizes the impact of successful breaches but also proactively strengthens the network’s overall resilience, enabling it to learn and adapt to the ever-changing threat landscape.

The research detailed within this paper inherently embodies a spirit of calculated disruption. It isn’t enough to simply deploy a deep learning anomaly detection system; the architecture must be dynamically managed, adapting to evolving threats and network conditions. This proactive approach aligns perfectly with Ada Lovelace’s observation: “That brain of mine is something more than merely mortal; as time will show.” The system, much like Lovelace’s vision of the Analytical Engine, doesn’t passively receive data; it actively tests the boundaries of acceptable network behavior – essentially ‘breaking the rules’ to define and defend against anomalies. By leveraging policy-based management within a MEC framework, the system moves beyond static security measures, embracing a more fluid and intelligent response to the complexities of 5G networks.

Where Do We Go From Here?

The presented architecture, while demonstrating a functional approach to anomaly detection in 5G networks, inherently shifts the focal point. The system doesn’t merely detect deviation; it necessitates a rigorous definition of ‘normal’-a moving target in any complex, evolving system. The true challenge lies not in building more sophisticated detectors, but in understanding the limitations of categorization itself. What is flagged as anomalous may, in fact, be the emergent behavior of a network adapting to unforeseen demands – a beneficial mutation stifled by overzealous security protocols.

Further work must address the inherent opacity of deep learning models. Policy-based management, as proposed, is only effective if the policies themselves are auditable and justifiable. Transparency isn’t about revealing the inner workings of the algorithm – that’s a fool’s errand – but about understanding why a particular action was taken. A ‘black box’ that consistently delivers correct results is less concerning than one whose reasoning remains inscrutable, particularly when dealing with critical infrastructure.

Ultimately, the pursuit of perfect anomaly detection is a paradox. A truly secure system isn’t one that eliminates all deviation, but one that anticipates and accommodates it. The next step isn’t simply to refine the algorithms, but to design networks that are inherently resilient, adaptable, and capable of self-diagnosis-systems that view anomalies not as threats, but as opportunities for learning and improvement.

Original article: https://arxiv.org/pdf/2601.15177.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- TON PREDICTION. TON cryptocurrency

- Bitcoin’s Bizarre Ballet: Hyper’s $20M Gamble & Why Your Grandma Will Buy BTC (Spoiler: She Won’t)

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- TV Leads Dropped After Leaked Demanding Rider Lists

- Gold Rate Forecast

- Here Are the Best TV Shows to Stream this Weekend on Paramount+, Including ‘48 Hours’

- Senate’s Crypto Bill: A Tale of Delay and Drama 🚨

- Lumentum: A Signal in the Static

- Actors Who Jumped Ship from Loyal Franchises for Quick Cash

2026-01-22 09:40