Author: Denis Avetisyan

Researchers have developed a new meta-learning framework that rapidly adapts to changing conditions, improving the resilience of power grids facing increasing renewable energy integration.

This work introduces a gradient-free meta-reinforcement learning framework for faster critical load restoration in distribution systems under forecast uncertainty, offering improved adaptation and regret bounds.

Maintaining distribution grid resilience following disruptive events is increasingly challenging due to the inherent uncertainty of renewable generation and the complexity of dynamic system responses. This paper introduces a meta-guided gradient-free reinforcement learning (MGF-RL) framework-detailed in ‘Toward Adaptive Grid Resilience: A Gradient-Free Meta-RL Framework for Critical Load Restoration’-designed to rapidly adapt to unseen outage scenarios and renewable energy patterns. By combining first-order meta-learning with evolutionary strategies, MGF-RL achieves improved critical load restoration performance across reliability, speed, and adaptation efficiency without relying on gradient computation. Could this approach unlock more robust and scalable solutions for real-time grid management in the face of growing renewable integration and increasingly frequent extreme weather events?

The Inevitable Cascade: Adapting to Systemic Stress

Following a power system disruption, the swift restoration of critical loads – those essential services like hospitals and emergency networks – is paramount. However, conventional restoration techniques, particularly those relying on Mixed-Integer Linear Programming (MILP), often prove inadequate for the speed and scale required in modern grids. These methods, while theoretically optimal, demand significant computational resources and time, becoming increasingly impractical as grid complexity grows. The inherent difficulty in solving large-scale MILP problems creates a bottleneck, hindering the ability to rapidly assess system status and implement effective restoration plans. This computational burden is especially problematic during cascading failures or widespread outages, where timely action is crucial to prevent prolonged service interruptions and maintain grid stability. Consequently, a shift towards more efficient and scalable restoration strategies is essential to address the challenges of increasingly complex and dynamic power systems.

The growing integration of renewable energy sources, while crucial for sustainability, presents a significant challenge to maintaining grid stability and necessitates a re-evaluation of traditional restoration strategies. Unlike predictable baseload power, sources like solar and wind are inherently intermittent, leading to Renewable Forecast Error – the discrepancy between predicted and actual power generation. This volatility dramatically increases the frequency and complexity of grid disruptions, demanding Critical Load Restoration (CLR) techniques that can adapt in real-time. Consequently, static, computationally intensive CLR methods are proving inadequate; a dynamic approach capable of swiftly responding to unpredictable fluctuations in renewable output is essential to minimize service interruptions and ensure continued reliability for consumers. Addressing this challenge is not merely about improving existing systems, but about building a more resilient and responsive grid prepared for the future of energy.

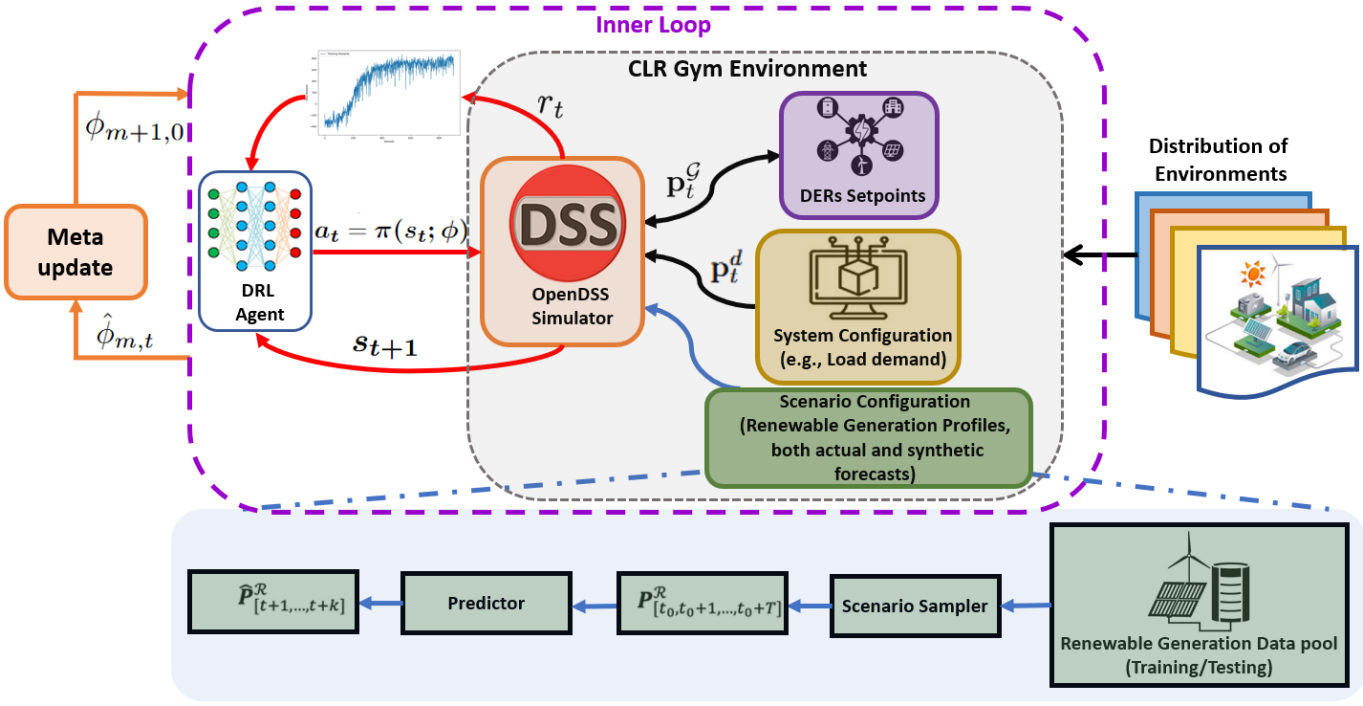

Critical Load Restoration, the process of rapidly recovering essential services after a disruptive event, benefits significantly from a shift in modeling approach. Traditional optimization techniques often struggle with the dynamic and uncertain nature of modern power grids. Recognizing this, researchers are increasingly framing CLR as a sequential decision-making problem – a series of choices made over time, where each action influences future states. The Markov Decision Process (MDP) provides a powerful mathematical framework for this, allowing for the explicit representation of system states, possible actions, and the probabilistic consequences of those actions. By formulating CLR as an MDP, algorithms can learn optimal restoration strategies that account for uncertainty and adapt to changing grid conditions, ultimately improving system resilience and minimizing service interruptions. This approach moves beyond static solutions towards intelligent, adaptive control crucial for integrating variable renewable energy sources and maintaining grid stability in the face of increasing complexity.

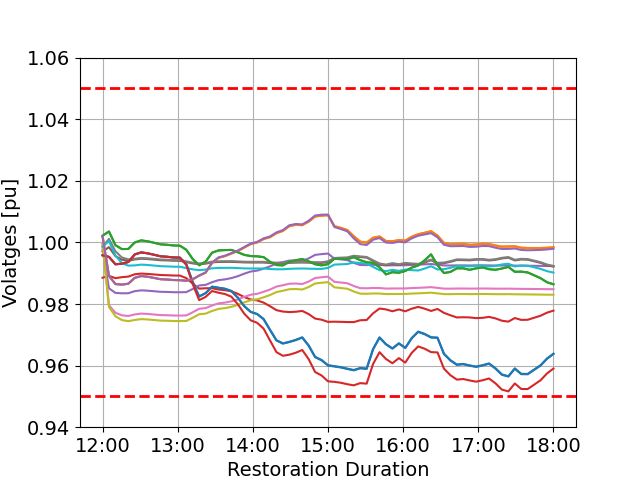

Minimizing service interruption is paramount for modern power grids, and improvements in this area translate directly into enhanced reliability metrics such as the System Average Interruption Duration Index (SAIDI). This metric, a key performance indicator for utility companies, quantifies the average duration of outages experienced by customers. The developed framework addresses this critical need by strategically managing power restoration following disruptions, demonstrably reducing both the frequency and duration of outages. Through simulations and analysis, the approach consistently outperforms traditional methods in minimizing cumulative interruption time, leading to a measurable decrease in SAIDI values and ultimately bolstering grid resilience for end-users. This improvement isn’t merely theoretical; it represents a tangible benefit in terms of reduced economic losses associated with power outages and increased customer satisfaction.

Learning to Adapt: Meta-Learning for System Resilience

Meta-learning addresses the challenge of rapid adaptation in continuous learning reinforcement learning (CLR) by enabling agents to accumulate experience not simply about how to perform tasks, but about how to learn new tasks. This is achieved by treating a distribution of tasks as the training data, allowing the agent to identify commonalities and leverage prior knowledge when encountering novel, yet related, challenges. Instead of starting from random initialization for each new CLR task, a meta-learned agent can effectively initialize its policy based on its experience with previously seen tasks, significantly reducing the time and data required to achieve proficient performance. This ‘learning to learn’ capability is distinct from traditional transfer learning, as it focuses on optimizing the learning process itself, rather than simply transferring a learned policy.

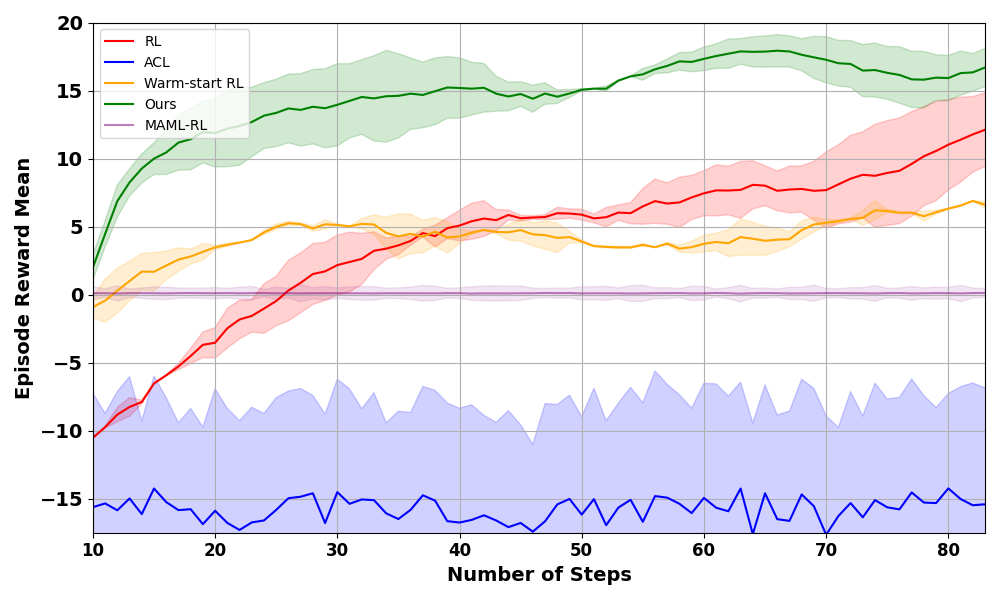

MGF-RL is a meta-learning framework designed to leverage the benefits of both gradient-free reinforcement learning, specifically Evolution Strategies-Reinforcement Learning (ES-RL), and first-order meta-update techniques. ES-RL provides robustness and simplicity, excelling in noisy or non-differentiable environments, while first-order meta-updates enable efficient adaptation by learning a policy initialization that is sensitive to task changes. MGF-RL combines these approaches by utilizing ES-RL for policy optimization within each task and employing first-order meta-updates to adjust the initial policy parameters based on performance across a distribution of tasks. This allows the agent to rapidly adapt to new continuous learning tasks by starting from an informed initialization, rather than random weights, effectively accelerating the learning process and improving overall performance.

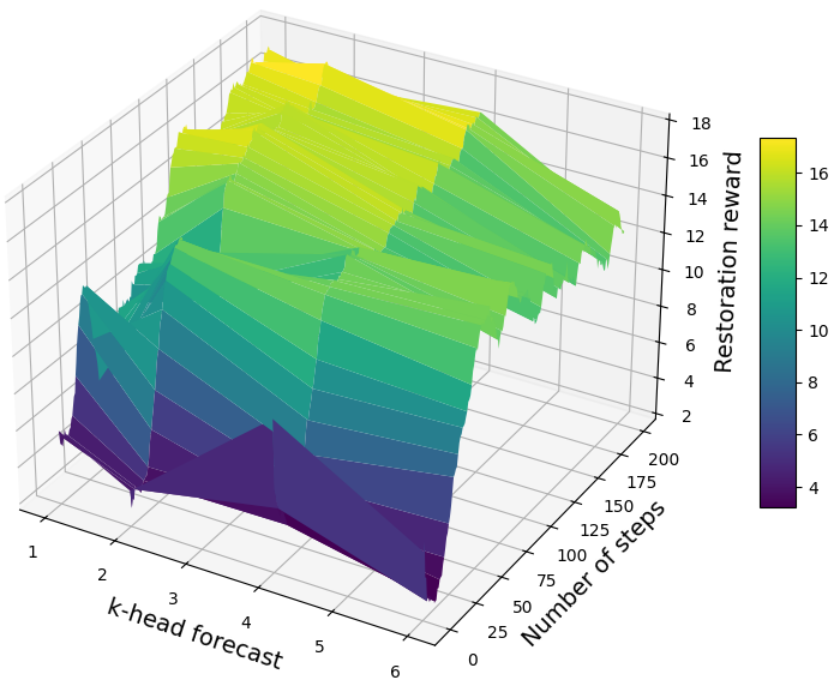

MGF-RL demonstrably enhances policy initialization within continuous learning reinforcement learning (CLR) environments. Empirical results indicate that MGF-RL achieves faster convergence rates and improved control performance compared to four baseline algorithms: Evolution Strategies-Reinforcement Learning (ES-RL), warm-start Reinforcement Learning, Model-Agnostic Meta-Learning-Reinforcement Learning (MAML-RL), and Actor-Critic-Reinforcement Learning (AC-RL). Specifically, MGF-RL consistently yields higher cumulative reward across a range of CLR tasks, indicating a more effective initial policy capable of adapting to new conditions. These performance gains are attributed to the combination of gradient-free exploration via ES-RL and the efficiency of first-order meta-updates, allowing for more robust and rapid adaptation.

The effectiveness of meta-learning in Continuous Learning Reinforcement (CLR) is significantly impacted by the similarity between tasks used during meta-training and those encountered during adaptation. Higher similarity allows for more effective knowledge transfer, as the learned meta-knowledge – encompassing initial policies and learning strategies – is directly applicable to the new task. Conversely, substantial differences in task dynamics, reward functions, or state spaces can lead to negative transfer, diminishing the benefits of meta-learning and potentially resulting in performance inferior to that of training from scratch. Measuring task similarity – often through metrics based on state or reward function overlap – is therefore crucial for curating effective meta-training datasets and predicting the potential for successful adaptation to novel CLR tasks.

Validation Through Standardized Systems: A Measure of Resilience

MGF-RL was subjected to performance evaluation using the widely adopted IEEE-13 Bus System and the larger IEEE-123 Bus System, both established benchmarks for testing and validating power system algorithms. The IEEE-13 Bus System, comprising 13 nodes representing a simplified power network, allowed for initial validation of the algorithm’s core functionalities. Subsequent testing was performed on the IEEE-123 Bus System, a more complex network with 123 nodes, to assess MGF-RL’s scalability and performance under conditions more representative of real-world power grids. Utilizing these standardized testbeds ensures comparability with existing power system restoration techniques and facilitates objective performance assessment.

Evaluations of MGF-RL on standard IEEE test systems demonstrated performance improvements over conventional power system restoration methods. Specifically, MGF-RL achieved faster system restoration times and enhanced overall grid stability following simulated disturbances. These improvements are quantitatively reflected in a reduction of the System Average Interruption Duration Index (SAIDI), a key metric for assessing power system reliability. Lower SAIDI values indicate fewer and shorter interruptions for consumers, confirming MGF-RL’s potential to improve service quality in power distribution networks.

Task-Averaged Regret (TAR) serves as a performance metric to evaluate the cumulative loss incurred by MGF-RL across a diverse set of Contingency Load Relief (CLR) tasks. Unlike single-scenario evaluations, TAR calculates the average difference between the cost achieved by MGF-RL and the optimal cost for each CLR task, summed over all tasks and then averaged. This aggregation provides a more robust assessment of the algorithm’s generalization capability, effectively quantifying the total performance degradation experienced when applied to unseen contingencies. A lower TAR value indicates better generalization and a more consistent ability to minimize system costs across a wider range of operational scenarios.

Evaluation on the IEEE-13 and IEEE-123 bus systems demonstrates the scalability of MGF-RL to complex power grid topologies. Performance remained stable under conditions simulating renewable energy forecast inaccuracies of up to 25%, indicating robustness in real-world operating environments where prediction errors are inevitable. This sustained functionality, combined with demonstrated improvements in restoration speed and SAIDI metrics, validates the potential for practical deployment of MGF-RL in operational power grids requiring adaptive control and resilience to forecast uncertainty.

The pursuit of resilient systems, as demonstrated by this meta-learning framework for critical load restoration, reveals a fundamental truth about complexity. While the MGF-RL approach aims to accelerate adaptation to renewable energy forecast uncertainty, it implicitly acknowledges the inevitable drift from initial optimality. As Barbara Liskov observed, “It’s one thing to program something; it’s another thing to build a system that will last.” This framework doesn’t prevent decay-the constant need to restore critical loads suggests a dynamic, not static, equilibrium-but rather seeks to manage it, to ensure graceful aging in the face of unpredictable disturbances. The system’s capacity to learn and adapt, even with gradient-free optimization, is a testament to the possibility of prolonging functionality, though not halting the relentless march of time and its impact on even the most carefully designed infrastructure.

What Lies Ahead?

The presented meta-learning framework, while promising in its capacity to accelerate adaptation within distribution systems, merely addresses one iteration of an infinitely regressing problem. Resilience isn’t a destination; it’s a constant negotiation with entropy. Versioning the restoration process – learning how to learn – is a form of memory, a necessary but insufficient bulwark against unforeseen disturbances. The true challenge isn’t minimizing regret bounds within a defined parameter space, but expanding that space to encompass the genuinely novel.

Current approaches, even those employing meta-learning, tend to optimize for known unknowns – forecast uncertainty, component failure rates. But the arrow of time always points toward refactoring, toward the emergence of problems that haven’t yet been conceived. Future work must grapple with the unknown unknowns, perhaps by incorporating techniques from continual learning or anomaly detection, not merely to react to failures, but to anticipate them.

Ultimately, the field must move beyond incremental improvements in restoration speed and focus on systemic robustness. A grid that learns to anticipate its own decay, to gracefully shed load and redistribute resources before catastrophic failure, is not simply a more resilient grid – it’s a different kind of grid altogether. It acknowledges that perfect stability is an illusion, and that the most effective defense is not prevention, but adaptation.

Original article: https://arxiv.org/pdf/2601.10973.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- Gold Rate Forecast

- The Hidden Treasure in AI Stocks: Alphabet

- If the Stock Market Crashes in 2026, There’s 1 Vanguard ETF I’ll Be Stocking Up On

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- Lumentum: A Signal in the Static

- Actors Who Jumped Ship from Loyal Franchises for Quick Cash

- Berkshire After Buffett: A Fortified Position

- Celebs Who Fake Apologies After Getting Caught in Lies

- AI Stocks: A Slightly Less Terrifying Investment

2026-01-20 23:40