Author: Denis Avetisyan

New research explores how large language models can be used to analyze college curricula and determine the extent to which they foster essential 21st-century skills.

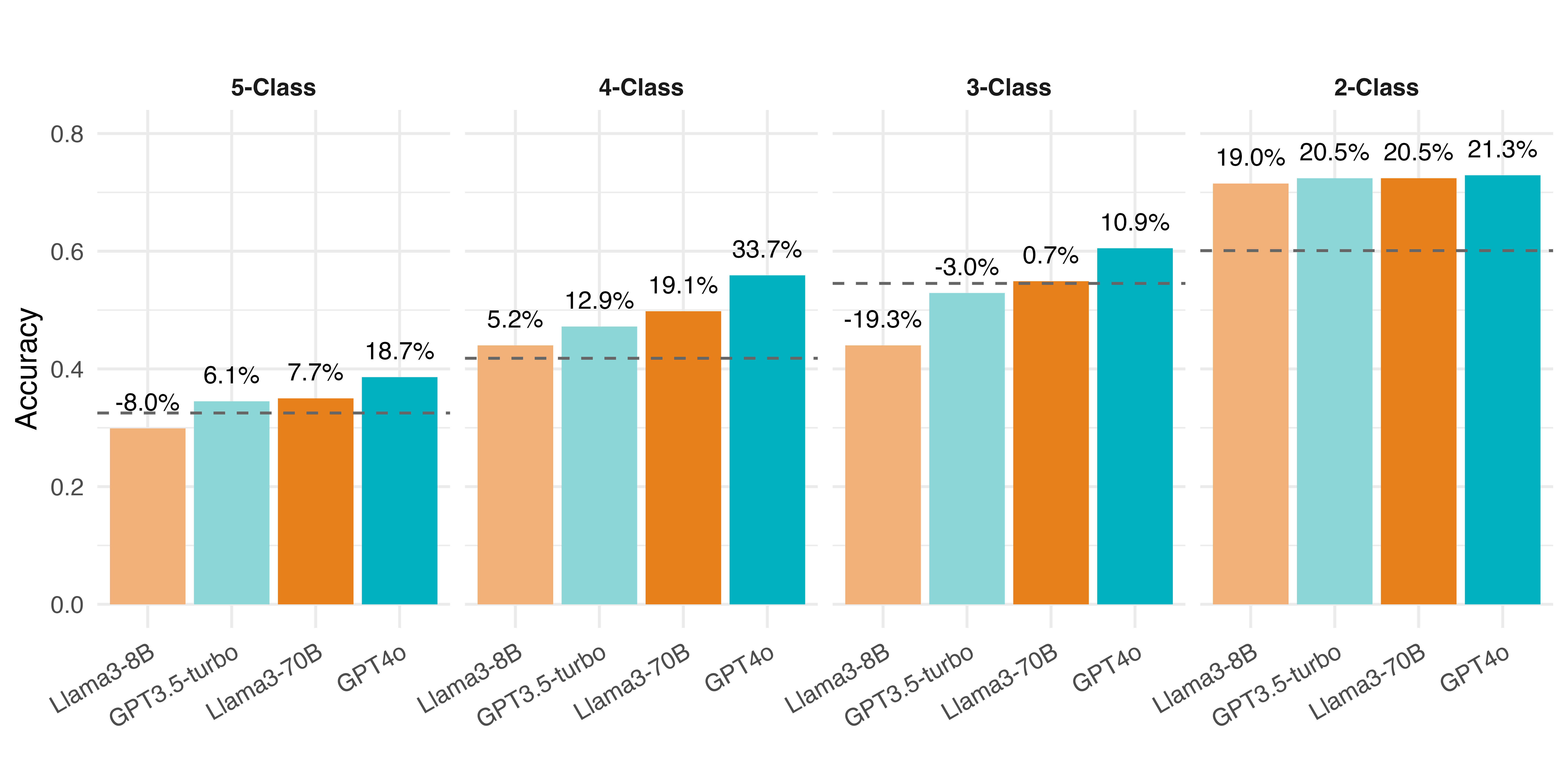

This study benchmarks the performance of large language models in evaluating competency coverage in postsecondary course materials, demonstrating that strategic prompt engineering can significantly improve assessment accuracy.

Despite growing calls for curricula to foster 21st-century competencies, systematically evaluating their integration remains a significant challenge. This study, ‘Evaluating 21st-Century Competencies in Postsecondary Curricula with Large Language Models: Performance Benchmarking and Reasoning-Based Prompting Strategies’, investigates the potential of large language models (LLMs) to analyze curriculum documents and map them to desired competencies. Results demonstrate that while open-weight LLMs offer a scalable solution, strategic prompt engineering-specifically a reasoning-based approach termed Curricular CoT-is crucial for improving pedagogical reasoning and reducing bias in competency detection. How can these findings inform the development of more effective, AI-driven curricular analytics and ultimately, better prepare students for future success?

The Enduring Challenge of Curricular Fidelity

Historically, evaluating what is actually taught within a curriculum has relied heavily on painstaking manual reviews of course syllabi, lesson plans, and assessment materials. This process is not only incredibly time-consuming for educators, but also struggles to adapt to the rapid shifts in educational priorities and emerging skill requirements. The inherent lack of scalability means that comprehensive, system-wide curriculum assessments are often infrequent or impractical, leaving institutions unable to quickly identify gaps or ensure alignment between stated objectives and actual instructional practices. Consequently, responsive educational design – the ability to swiftly update curricula to meet evolving needs – is significantly hindered, potentially resulting in outdated content and a disconnect between classroom learning and the demands of a changing world.

The modern educational landscape increasingly prioritizes 21st-century competencies – critical thinking, creativity, collaboration, and communication – skills deemed essential for navigating a rapidly changing world. However, simply acknowledging these competencies is insufficient; a systematic approach to their integration within existing curricula is paramount. This necessitates moving beyond traditional subject-based assessments to identify opportunities where these skills can be explicitly taught, practiced, and assessed. Effective implementation requires a thorough analysis of learning objectives, instructional strategies, and assessment methods to ensure alignment with desired competency outcomes. Such a systematic review enables educators to proactively cultivate these crucial skills, moving beyond rote memorization towards fostering adaptable, innovative, and collaborative learners prepared for future challenges.

Educational institutions face increasing pressure to ensure curricula adequately prepare students for a rapidly changing job market. Without robust methods for aligning instructional materials with evolving competency frameworks – those skill sets deemed essential for success in the 21st century – there is a significant risk of a skills mismatch. This misalignment can result in graduates lacking the critical thinking, problem-solving, and collaborative abilities employers now prioritize, hindering both individual career prospects and broader economic competitiveness. Consequently, a proactive and systematic approach to curriculum alignment is not merely a pedagogical improvement, but a crucial investment in future workforce readiness and societal progress.

Automated Curricular Analysis: A Logical Imperative

Curricular Analytics utilizes Generative AI to automate the process of extracting pertinent data from curriculum-related documentation. This includes, but is not limited to, course syllabi and Open Educational Resources (OER). The system processes these typically unstructured text documents to identify key elements such as learning objectives, assessment methods, required skills, and core competencies. Automation of this extraction process reduces the manual effort traditionally required for curriculum review and analysis, enabling scalable assessment of educational materials and facilitating data-driven improvements to course design and content.

Large Language Models (LLMs) are the foundational technology enabling automated curricular analysis. These models, typically based on transformer architectures, excel at processing and understanding unstructured text data – such as course syllabi and open educational resources – without requiring pre-defined rules or extensive feature engineering. LLMs identify relevant competencies by analyzing textual content and recognizing patterns associated with specific skills, knowledge areas, and learning objectives. This capability extends beyond simple keyword matching; LLMs utilize contextual understanding to determine the meaning and intent behind the text, allowing for the extraction of competencies even when expressed using varied language or implicit descriptions. The models output structured data representing these identified competencies, facilitating quantitative and qualitative analysis of curricular materials.

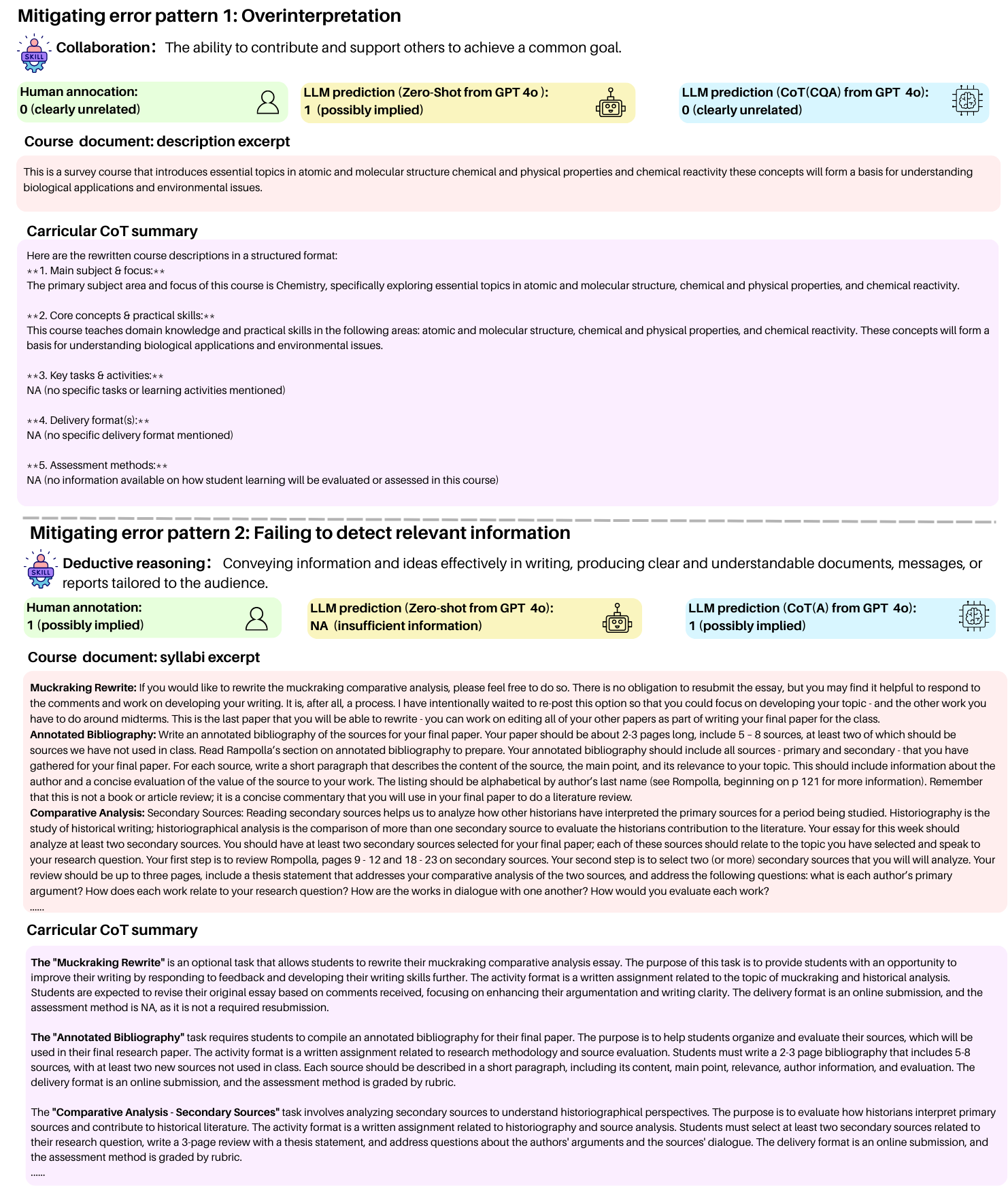

Chain-of-Thought Prompting is a technique used to improve the reasoning abilities of Large Language Models (LLMs) when applied to curricular analysis. This method involves structuring prompts to encourage the LLM to explicitly articulate its thought process, rather than directly providing an answer. By breaking down complex assessments into a series of intermediate reasoning steps, the LLM can more accurately identify and categorize curricular competencies. Validation studies demonstrate that this approach achieves over 70% agreement with human annotations when performing coarse-grained classifications of curriculum documents, indicating a high level of reliability in automated assessment.

Rigorous Validation: The Cornerstone of Analytical Trust

Data quality directly impacts the reliability of any curriculum recommendations generated through analysis. Inaccurate data, encompassing errors in student performance metrics, demographic information, or learning resource classifications, introduces systematic biases and distorts the resulting insights. Incomplete data, such as missing student records or lacking details on prerequisite knowledge, limits the scope of analysis and can lead to recommendations that fail to address the needs of specific learners. Consequently, flawed data undermines the effectiveness of the entire system, potentially leading to inappropriate learning pathways and hindering student progress; therefore, robust data validation and cleaning processes are essential prerequisites for generating trustworthy and actionable recommendations.

Despite advances in Large Language Model (LLM) capabilities, human annotation continues to be a critical component of ensuring analytical accuracy. LLMs, while proficient at processing and generating text, require validation to mitigate potential errors or biases in their outputs. Calibration through human annotation establishes inter-rater reliability, with observed scores ranging from 0.841 to 0.94, demonstrating a high degree of consistency in evaluation and confirming the robustness of the analytical process when combined with human oversight.

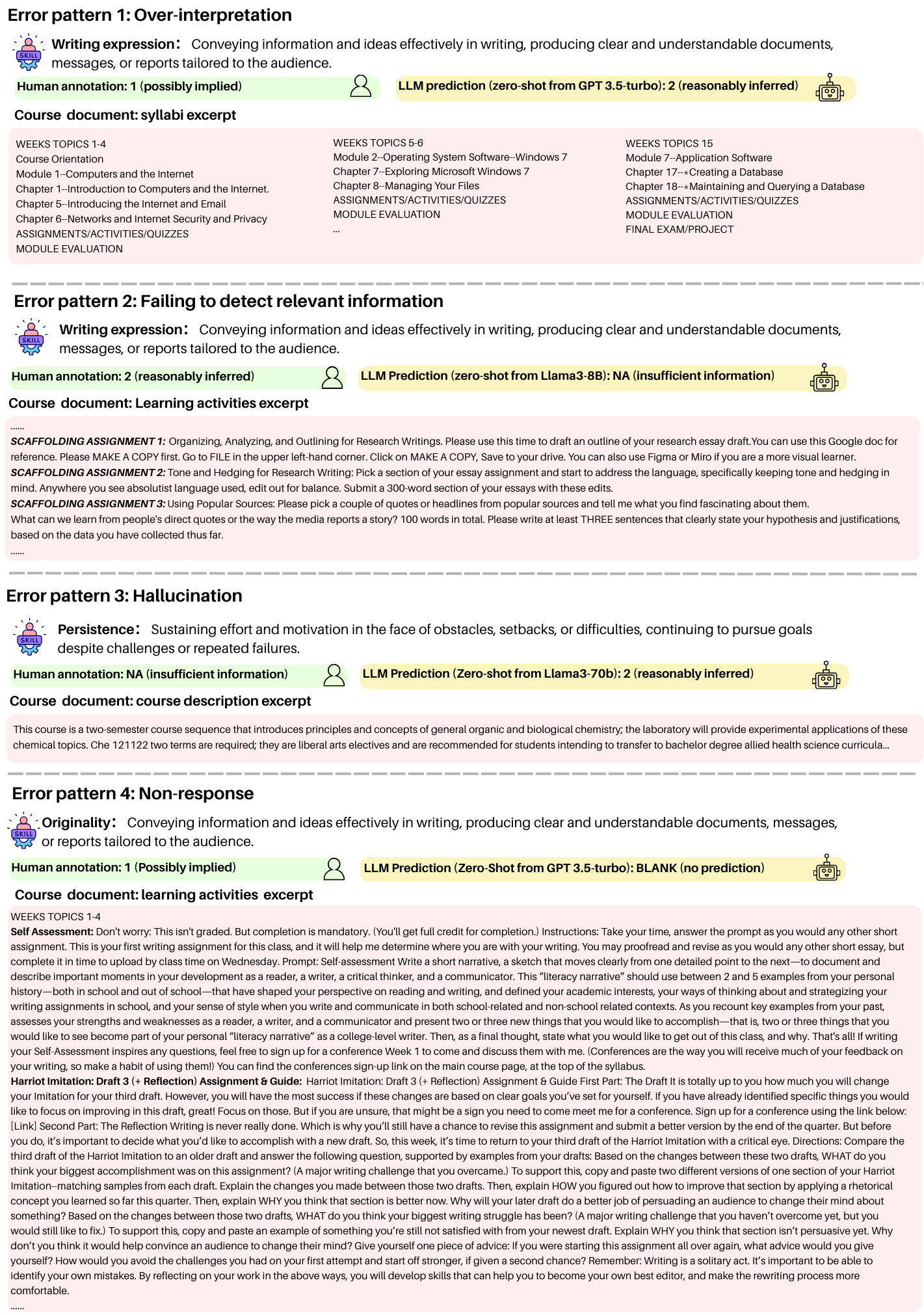

Zero-shot learning leverages the pre-trained knowledge within Large Language Models (LLMs) to perform tasks or generalize to contexts not explicitly encountered during their training phase, eliminating the need for task-specific labeled datasets. This capability is achieved through the LLM’s ability to understand and apply learned patterns to novel inputs, however, performance is not guaranteed. Consequently, rigorous evaluation, utilizing established metrics and ideally involving human review, is essential to identify and rectify inaccuracies or biases present in the LLM’s zero-shot outputs, and to refine prompts or model parameters for improved generalization and reliability.

Mapping to Global Standards: A Logical Extension of Analytical Insight

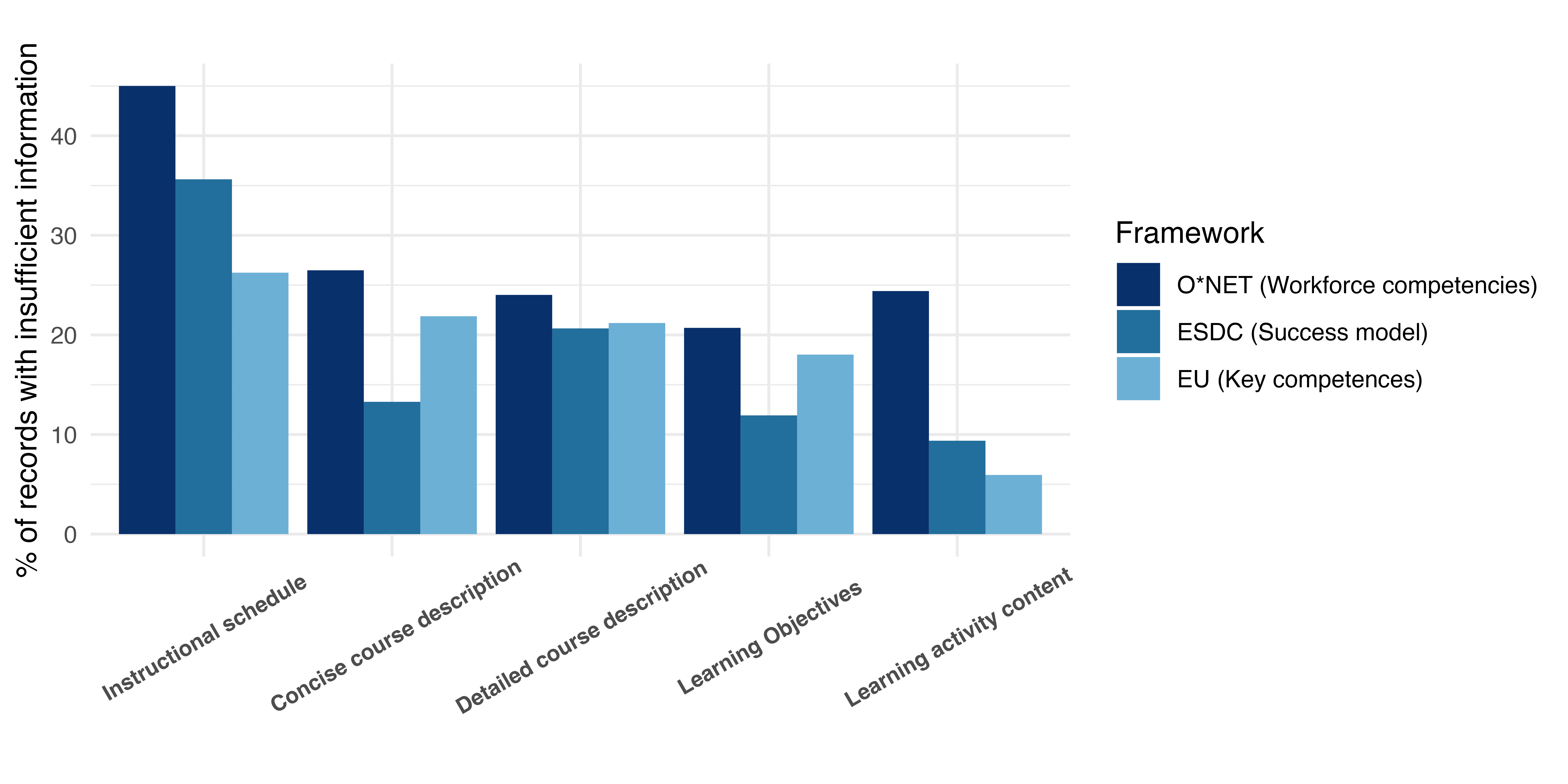

Systematic curriculum mapping, achieved through framework alignment, provides educators with a robust method for analyzing and organizing learning objectives in relation to established global competency standards. Utilizing frameworks such as the EU Key Competences, the US O*NET system-which details required skills for various occupations-and the ESDC Success Model, educators can dissect existing curricula and pinpoint the extent to which they address crucial skills like critical thinking, problem-solving, and digital literacy. This process isn’t simply about checking boxes; it’s a detailed examination of how each learning activity contributes to broader competency development, allowing for a precise understanding of strengths and, crucially, areas where curriculum may need to be adapted or supplemented to better prepare students for future success in a rapidly evolving world.

A systematic mapping of curriculum to established competency frameworks frequently uncovers areas where essential skill development is unintentionally overlooked. This identification of gaps isn’t merely diagnostic; it actively facilitates targeted interventions designed to bolster student preparedness. Educators can then strategically refine course content, introduce new learning activities, or emphasize particular skill sets to address these deficiencies. This process moves beyond simply covering material to actively cultivating crucial competencies, ensuring students are not only knowledgeable but also equipped with the transferable skills demanded by modern educational and professional landscapes. The result is a more responsive and effective curriculum, directly addressing evolving needs and maximizing student potential.

Educational institutions increasingly recognize the value of aligning curricula with globally recognized standards to foster student success. This strategic approach moves beyond localized learning objectives, ensuring students develop competencies valued in diverse international contexts. By benchmarking against frameworks like those established by the World Economic Forum or UNESCO, institutions can proactively address skill gaps and cultivate adaptability – crucial assets in a rapidly evolving job market. The result is not merely the acquisition of knowledge, but the development of well-rounded individuals equipped with the critical thinking, problem-solving, and collaborative abilities needed to thrive in a globalized world, ultimately improving both individual outcomes and the overall effectiveness of educational programs.

The study’s exploration of LLM performance in evaluating curricular alignment with 21st-century competencies echoes a sentiment held by Carl Friedrich Gauss: “If other people would think differently about things, they would realize that there is no such thing as absolute truth.” This resonates with the research findings, which demonstrate that LLM assessments, while powerful, are not definitive measures of competency coverage. The efficacy of these models is contingent upon the precision of prompt engineering-a carefully constructed methodology akin to defining axioms in a mathematical proof. Just as Gauss sought precision in mathematical principles, this research highlights the necessity of rigorous evaluation strategies to validate LLM-driven curricular analytics and to account for the inherent limitations of these tools.

What’s Next?

The application of large language models to curricular analytics, as demonstrated, reveals not a triumph of artificial intelligence, but a stark illumination of the challenges inherent in formalizing pedagogical objectives. The observed performance, while improvable through prompt engineering – a process disturbingly akin to coaxing a solution rather than deriving it – remains contingent on the quality of the training data and the precision of the prompts themselves. A model can identify keywords suggestive of ‘critical thinking’, but proving genuine engagement with the concept-demonstrating its application within the curriculum-demands a level of semantic understanding currently beyond reach. The focus must shift from merely detecting competency mentions to verifying their substantive integration – a task demanding formal methods, not statistical correlations.

Future work should prioritize the development of formal ontologies for 21st-century competencies, establishing axioms that define not just what constitutes critical thinking, but how it manifests within specific disciplines. The current reliance on natural language as the sole input is problematic; a hybrid approach, incorporating structured data about learning outcomes and assessment methods, promises greater analytical rigor. Attempts to ‘teach’ an LLM pedagogy through examples are, ultimately, inductive approximations. A deductive framework-rooted in logical definitions-offers the only path towards a truly provable assessment of curricular alignment.

The question is not whether machines can simulate educational evaluation, but whether they can contribute to a more precise, mathematically grounded understanding of effective pedagogy. Until that threshold is crossed, the pursuit of AI-driven curricular analytics risks becoming an elaborate exercise in pattern recognition-a fascinating, yet ultimately superficial, endeavor.

Original article: https://arxiv.org/pdf/2601.10983.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- Gold Rate Forecast

- The Hidden Treasure in AI Stocks: Alphabet

- If the Stock Market Crashes in 2026, There’s 1 Vanguard ETF I’ll Be Stocking Up On

- TON PREDICTION. TON cryptocurrency

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- Warby Parker Insider’s Sale Signals Caution for Investors

- Beyond Basic Prompts: Elevating AI’s Emotional Intelligence

- Actors Who Jumped Ship from Loyal Franchises for Quick Cash

- Berkshire After Buffett: A Fortified Position

2026-01-20 15:11