Author: Denis Avetisyan

Researchers introduce a new dataset and framework for improving autonomous vehicle prediction and planning in densely populated city environments.

The DeepUrban dataset provides realistic aerial imagery and scenarios for benchmarking trajectory prediction and collision avoidance systems.

Robust autonomous driving necessitates accurate trajectory prediction and planning, yet current benchmarks lack sufficient complexity in dense, interactive urban scenarios. To address this limitation, we introduce DeepUrban-a novel drone-captured dataset detailed in ‘DeepUrban: Interaction-Aware Trajectory Prediction and Planning for Automated Driving by Aerial Imagery’-providing high-resolution 3D traffic data extracted from urban intersections. Our experiments demonstrate that incorporating DeepUrban alongside existing datasets like nuScenes significantly enhances prediction and planning accuracy, yielding improvements of up to 44.1%/44.3% on standard metrics. Will this richer data environment accelerate the development of truly robust and socially-aware autonomous navigation systems?

The Intricate Dance of Urban Prediction

The implementation of self-driving vehicles within bustling cityscapes introduces a formidable set of predictive difficulties stemming from the sheer density and intricacy of interactions. Unlike highway driving, urban environments are characterized by unpredictable pedestrian behavior, cyclists weaving through traffic, and frequent starts and stops-all compounded by occlusions from buildings and other vehicles. These conditions demand that autonomous systems not only perceive the immediate surroundings, but also anticipate the future actions of numerous agents simultaneously. Successfully navigating this complexity requires algorithms capable of modeling nuanced social behaviors, understanding implicit communication cues, and accounting for the high degree of uncertainty inherent in real-world urban settings, pushing the boundaries of current trajectory prediction capabilities.

Current trajectory prediction methods often falter when attempting to anticipate the movements of agents – pedestrians, cyclists, and other vehicles – over extended periods in urban settings. These systems typically rely on short-term observations and struggle to extrapolate future behavior accurately, particularly when faced with the nuanced and often unpredictable actions characteristic of city life. A core limitation lies in deciphering intent; simply tracking observed motion isn’t enough when an agent might change course based on unobservable factors like anticipating a traffic light or reacting to another pedestrian. This inability to reliably forecast long-term trajectories and accurately model underlying intentions poses a significant hurdle for autonomous vehicles, demanding more sophisticated predictive algorithms capable of navigating the complexities of urban autonomy and ensuring safe, efficient operation.

The successful integration of autonomous vehicles into cityscapes hinges on the capacity to accurately anticipate the movements of all actors – pedestrians, cyclists, and other vehicles – within a dynamic environment. Path planning algorithms, crucial for navigating these complex scenarios, are fundamentally reliant on predictive modeling; even minor inaccuracies in forecasting can lead to inefficient maneuvers or, critically, safety hazards. Unpredictable urban settings, characterized by occlusions, erratic human behavior, and constantly changing traffic patterns, demand a robust prediction capability that extends beyond short-term horizons. Consequently, advancements in trajectory forecasting aren’t merely about improving algorithmic performance, but rather about establishing a foundational element for truly safe and efficient autonomous navigation in the real world, allowing vehicles to proactively adapt to evolving situations and avoid potential collisions.

Decomposing Complexity: Scene-Aware Prediction

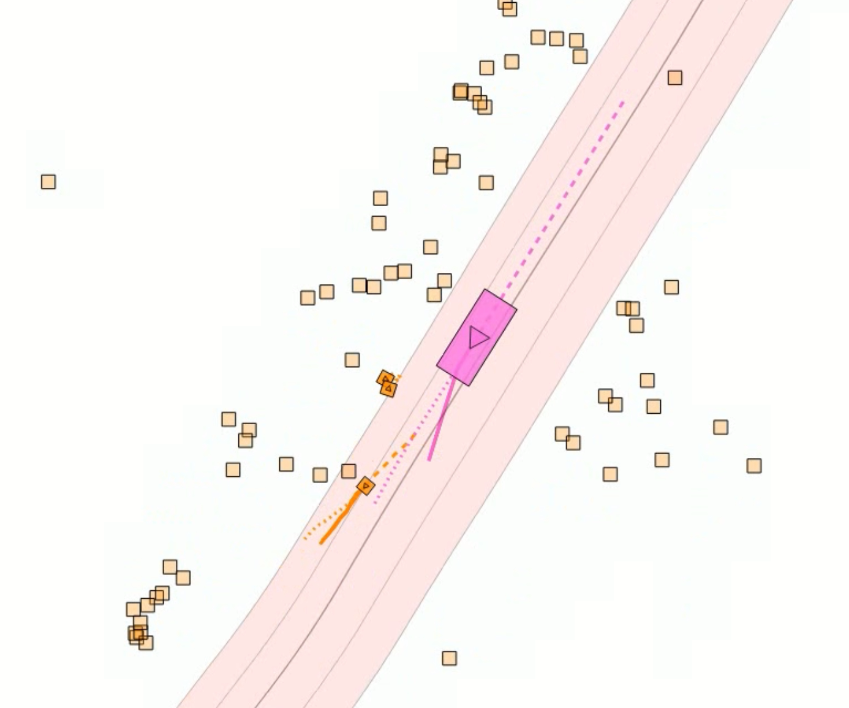

ScePT employs a scene prediction transformer to address the challenges of trajectory forecasting in complex urban environments by decomposing the scene into discrete, interacting agent groups. This decomposition is achieved through the transformer’s attention mechanism, which identifies relationships between agents and clusters them based on predicted interactions. Rather than treating all agents as independent entities, ScePT models the collective behavior of these groups, allowing for a more accurate prediction of individual trajectories based on the anticipated actions of nearby agents within the same group. This grouping strategy reduces computational complexity and enables the model to focus on the most relevant interactions for forecasting, particularly in dense and dynamic scenarios.

ScePT enhances trajectory prediction by explicitly modeling agent interactions within groups, moving beyond individual-centric forecasting. This approach recognizes that the movement of one agent is highly correlated with the movements of others in its immediate vicinity. By representing scenes as collections of these interactive groups, ScePT captures complex dependencies and reduces uncertainty in predictions. Quantitative results demonstrate that this group-focused methodology leads to improved accuracy, particularly for longer forecasting horizons, as the system effectively extrapolates coordinated behaviors rather than relying solely on individual agent histories. This is especially crucial in dense urban environments where collective maneuvers – such as pedestrian groups crossing intersections or vehicles merging into traffic – dominate scene dynamics.

The Scene-Aware Trajectory Prediction (ScePT) system employs a Conditional Variational Autoencoder (CVAE) as its primary trajectory generation module. The CVAE architecture allows ScePT to model the inherent uncertainty in future agent movement by learning a latent distribution over possible trajectories, conditioned on observed scene context and agent states. Specifically, an encoder network maps the input observation – including agent history and surrounding environment – to a probabilistic latent space. A decoder network then samples from this latent space and generates multiple plausible future trajectories. This probabilistic approach facilitates the creation of diverse predictions, enabling ScePT to move beyond single, deterministic forecasts and better represent the range of likely outcomes for each agent.

DeepUrban: A Benchmark for Realistic Autonomy

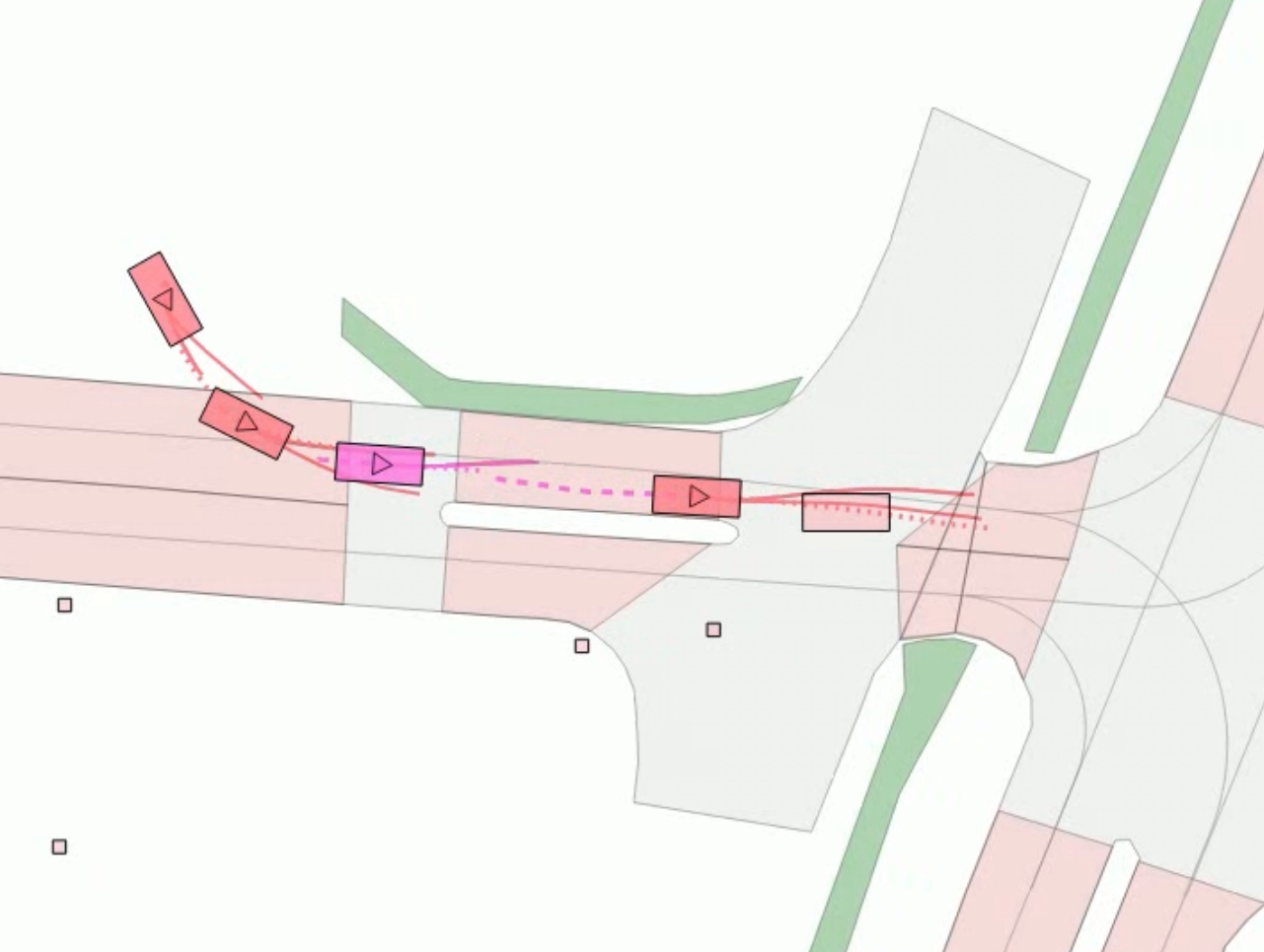

The DeepUrban dataset is designed to facilitate the development and evaluation of autonomous vehicle algorithms operating in complex urban environments. It comprises a large-scale collection of real-world driving scenarios captured through aerial drone surveys and integrated with high-definition OpenDRIVE maps. This combination yields a detailed and accurate representation of road layouts, traffic participants, and surrounding infrastructure. The dataset’s scale-significantly larger than existing benchmarks-and fidelity to real-world conditions enable robust testing of trajectory prediction and planning systems under challenging conditions, addressing limitations in prior datasets which often lack the complexity and scale needed for comprehensive algorithm validation.

The DeepUrban dataset’s environmental representation was constructed through a multi-stage data collection process. Aerial drone surveys captured high-resolution imagery and LiDAR data of urban scenes, providing a detailed point cloud of static and dynamic elements. This raw data was then meticulously integrated with precise OpenDRIVE map data, which provides semantic information about road layouts, lane markings, traffic signals, and other critical infrastructure. The combination of these data sources resulted in a comprehensive and geometrically accurate 3D reconstruction of the urban environment, enabling realistic simulation and evaluation of autonomous vehicle algorithms. This integration ensured accurate positioning of agents within the simulated world and provided a ground truth for assessing prediction and planning performance.

Evaluations using the DeepUrban dataset demonstrate significant improvements in trajectory prediction accuracy when compared to existing benchmarks. Specifically, expanding upon the nuScenes dataset with DeepUrban data yielded a 44.1% reduction in Average Displacement Error (ADE), a metric representing the average distance between predicted and ground truth trajectories. Final Displacement Error (FDE), measuring the distance to the final position, improved by 44.3%. Furthermore, the dataset’s realism contributed to a 49.6% improvement in the collision score, indicating a substantial increase in the ability of algorithms to accurately predict potential collisions involving vehicles.

Harmonizing Foresight with Action: Integrated Planning

Recent advancements in autonomous navigation increasingly focus on unifying trajectory prediction with motion planning, moving beyond sequential approaches where one system operates independently of the other. Methods such as the Planning-Driving Model (PDM), Hoplan, and DiffStack exemplify this integration, directly incorporating predicted future states of dynamic agents into the planning process. These systems don’t simply react to current observations; instead, they proactively anticipate potential interactions and adjust planned trajectories to avoid collisions or optimize maneuvers. PDM, for instance, learns a joint distribution over future trajectories and driving behaviors, while Hoplan utilizes a differentiable planning framework to directly optimize for collision avoidance based on predicted trajectories. DiffStack further refines this process with a differentiable stack architecture, enabling efficient and accurate prediction-aware planning – all representing a shift towards more robust and intelligent autonomous systems capable of navigating complex environments.

Contemporary robotic systems increasingly prioritize safety through the proactive anticipation of potential collisions. Rather than reacting to immediate threats, advanced planning algorithms now incorporate trajectory prediction to forecast the future movements of dynamic obstacles – pedestrians, vehicles, or other robots. This foresight allows the system to adapt its planned path before a conflict arises, effectively circumventing dangerous situations. By continuously assessing predicted trajectories and recalculating optimal routes, these systems demonstrate a significant improvement in collision avoidance compared to traditional reactive approaches. The result is a more fluid and secure navigation experience, particularly crucial in complex and unpredictable environments where instantaneous responses may prove insufficient.

The development and rigorous testing of algorithms that seamlessly blend trajectory prediction with motion planning heavily relies on high-fidelity simulation environments. Platforms like the Carla Simulator provide a vital virtual proving ground, allowing researchers to evaluate the performance of these integrated systems in a controlled and repeatable manner. These simulations generate realistic scenarios – including diverse road layouts, pedestrian behaviors, and weather conditions – that challenge the predictive and planning capabilities of autonomous agents. By subjecting algorithms to millions of simulated miles, developers can identify potential failure points, refine predictive models, and ultimately enhance the safety and reliability of autonomous vehicles before deployment in real-world conditions. This virtual validation process significantly accelerates development cycles and reduces the risks associated with on-road testing.

The pursuit of robust autonomous navigation, as detailed in this work concerning the DeepUrban dataset, echoes a fundamental design principle: beauty scales – clutter doesn’t. The dataset’s contribution isn’t merely an increase in quantity, but a refinement of quality, focusing on the intricacies of urban environments often overlooked. This focused approach-augmenting existing resources like nuScenes rather than reinventing the wheel-exemplifies elegant engineering. Geoffrey Hinton aptly stated, “The more data you give a neural network, the more it will generalize.” DeepUrban’s contribution lies in providing precisely the nuanced data needed to enhance generalization in complex urban scenarios, moving beyond simple obstacle avoidance towards truly predictive and safe autonomous driving. It’s editing, not rebuilding, that yields the most impactful results.

Beyond the Concrete: Charting a Course for Realistic Autonomy

The introduction of DeepUrban, and its conscientious augmentation of existing datasets, feels less like a destination and more like a necessary calibration. The field has, for some time, pursued increasing complexity in models while often operating on data divorced from the messy reality of truly dense urban life. The gains demonstrated through dataset fusion suggest a principle often forgotten: consistency is empathy. A system that ‘understands’ the nuances of interaction – the unspoken negotiations between pedestrians, cyclists, and vehicles – requires exposure to those very nuances, not merely a scaling up of geometric detail.

However, the problem isn’t simply one of data volume. Current benchmarks, even when enriched, largely assess reactive collision avoidance. True autonomy demands proactive anticipation-modeling not just what might happen, but why. This requires a shift towards generative models capable of simulating plausible future scenarios, informed by a deeper understanding of human intent and social context. The elegance of a solution will not be measured by its computational efficiency, but by its ability to fade into the background, a silent partner in the complex dance of city life.

Future work should prioritize the development of metrics that move beyond simple trajectory error, towards quantifying the ‘social acceptability’ of predicted and planned behaviors. Beauty does not distract, it guides attention. A truly intelligent system will not simply avoid collisions; it will navigate urban spaces with a grace that inspires trust and minimizes disruption-a subtle quality rarely captured by a loss function.

Original article: https://arxiv.org/pdf/2601.10554.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- Gold Rate Forecast

- Here’s Whats Inside the Nearly $1 Million Golden Globes Gift Bag

- The Hidden Treasure in AI Stocks: Alphabet

- TV Pilots Rejected by Networks

- The Labyrinth of JBND: Peterson’s $32M Gambit

- The Worst Black A-List Hollywood Actors

- You Should Not Let Your Kids Watch These Cartoons

- Mendon Capital’s Quiet Move on FB Financial

- Live-Action Movies That Whitewashed Anime Characters Fans Loved

2026-01-17 21:27