Author: Denis Avetisyan

New research demonstrates a deep learning approach to enhance the visibility of analog gauges in challenging conditions like smoke and haze, paving the way for reliable automated inspection.

A synthetic data and deep learning methodology improves image quality and enables accurate gauge reading in degraded visual environments.

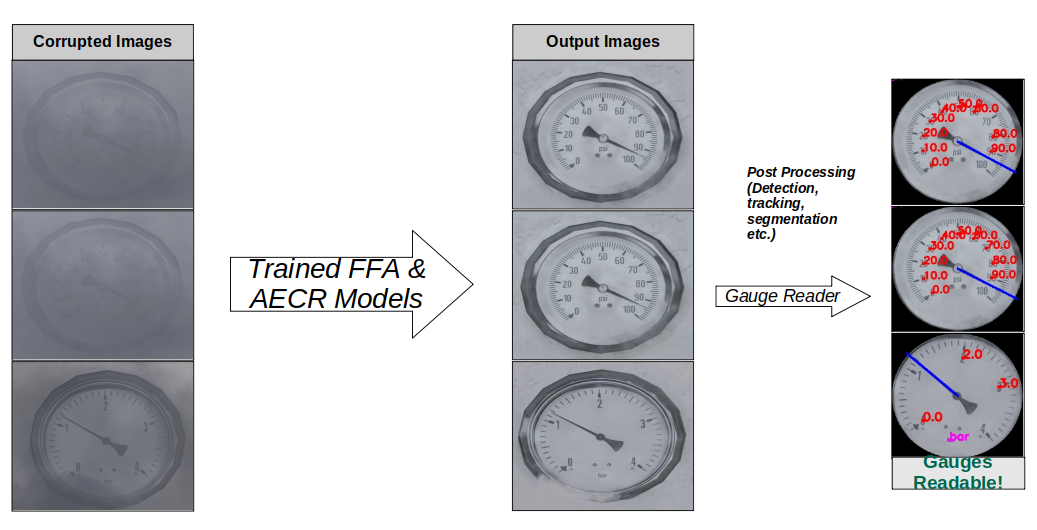

Maintaining clear visibility in obscured environments remains a persistent challenge for critical infrastructure monitoring and emergency response. This is addressed in ‘Enhancing the quality of gauge images captured in smoke and haze scenes through deep learning’, which investigates deep learning architectures for automated enhancement of analog gauge readability under smoky and hazy conditions. By utilizing a novel synthetic dataset generated with Unreal Engine, the study demonstrates significant improvements in image quality achieved with FFA-Net and AECR-Net models, enabling potential for autonomous gauge reading. Could this approach pave the way for more robust and reliable remote monitoring systems in challenging visual environments?

The Inevitable Haze: Why Gauges Still Need Humans

Despite the increasing prevalence of digital instrumentation, analog gauges continue to serve as indispensable components within numerous industrial environments. These devices offer a direct, visually-readable indication of critical parameters – pressure, temperature, flow rate, and more – without reliance on complex digital systems or power sources. Their robustness and simplicity make them particularly well-suited for harsh conditions where digital sensors might fail, and their immediate feedback is crucial for real-time process control and safety monitoring. While often integrated with automated vision systems for data logging, the gauges themselves remain the primary source of information, ensuring operational oversight even in the event of technological disruption. This continued reliance underscores the practical advantages of analog technology in applications demanding unwavering reliability and immediate data accessibility.

The effectiveness of automated industrial inspection systems is heavily reliant on clear visual data, but particulate matter – encompassing haze, smoke, and dust – poses a significant challenge. These atmospheric conditions scatter and absorb light, reducing image contrast and obscuring critical details within the visual field. Consequently, algorithms designed for precise gauge reading or defect detection experience diminished accuracy, as the degraded imagery introduces noise and makes it difficult to reliably identify key features. This phenomenon isn’t merely a matter of reduced resolution; it fundamentally alters the information available to the system, often requiring substantial pre-processing or, in severe cases, rendering automated readings impossible and necessitating manual intervention.

Automated gauge reading systems, vital for monitoring industrial processes, frequently encounter difficulties when faced with substantial visual interference. Conventional image processing techniques, such as edge detection and optical character recognition, rely on clear visual distinctions; however, dense haze or smoke severely compromises image contrast and introduces noise, rendering these algorithms ineffective. This obscuration leads to inaccurate readings, false positives, and ultimately, unreliable data-compromising the systems’ ability to provide timely alerts or facilitate informed decision-making. Researchers are actively investigating robust algorithms and advanced imaging techniques to mitigate these effects, but current solutions often require significant computational resources or are limited in their ability to handle extremely poor visibility conditions, highlighting a critical challenge for widespread adoption of automated monitoring in demanding industrial environments.

The Synthetic Fix: When Reality Isn’t Cooperative

The performance of deep learning models is directly correlated to the quantity and quality of their training data; however, acquiring sufficiently large and representative real-world datasets can be challenging due to cost, privacy concerns, or the rarity of specific events. When real-world data is limited or contains inherent biases, synthetic data generation provides a viable alternative. By programmatically creating datasets with controlled variations and balanced representations, developers can overcome these limitations and train models to generalize more effectively. This approach allows for the creation of datasets tailored to specific scenarios, enabling the training of robust models even in the absence of comprehensive real-world examples. Furthermore, synthetic data can be accurately labeled, eliminating the need for costly and time-consuming manual annotation processes.

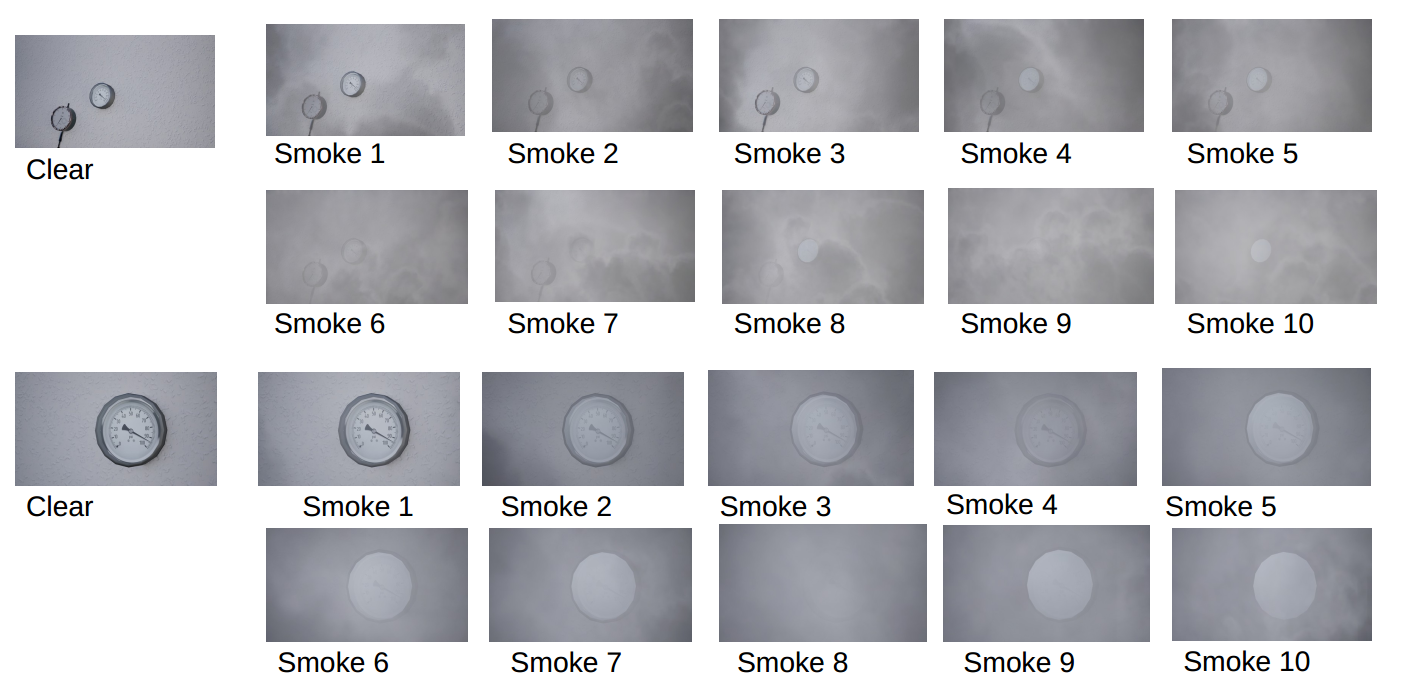

Game engines, specifically Unreal Engine, provide a platform for generating photorealistic synthetic data by simulating controlled environments. These engines allow precise manipulation of environmental variables, including the density and distribution of atmospheric effects like haze and smoke. This capability enables the creation of datasets with granular control over obscuration levels, offering a repeatable and scalable method for producing diverse training data that would be difficult or costly to acquire from real-world sources. Parameters such as particle density, color, and light scattering can be adjusted to simulate varying visibility conditions, facilitating the training of algorithms designed to operate effectively in degraded visual environments.

The creation of large synthetic datasets focused on analog gauges, with varying degrees of obscuration from conditions like haze and smoke, directly addresses the need for model robustness in computer vision applications. These datasets are generated by systematically altering visual parameters-specifically, the level and type of obscuration-across numerous simulated gauge images. This process yields a significantly diverse training set, exposing the model to a wide range of potential real-world conditions that might otherwise be underrepresented or absent in a naturally collected dataset. The resulting model demonstrates improved performance and reliability when processing images of analog gauges in environments with partial visual obstruction, mitigating the risk of inaccurate readings or failures due to real-world impairments.

FFA-Net and AECR-Net: Algorithms Battling the Blur

FFA-Net utilizes a Convolutional Neural Network (CNN) architecture enhanced with feature fusion attention mechanisms. These mechanisms operate by selectively weighting and combining feature maps generated by the CNN, allowing the network to prioritize and amplify image features most relevant for dehazing. This process involves learning attention weights that indicate the importance of each feature, effectively suppressing noise and irrelevant details while emphasizing features indicative of scene depth and atmospheric light transmission. By adaptively focusing on the most informative features, FFA-Net improves the network’s ability to reconstruct clear images from hazy inputs.

AECR-Net utilizes a combination of contrastive learning and autoencoders to facilitate robust representation learning, directly addressing the challenges of reconstructing images degraded by haze or smoke. Contrastive learning within the network encourages the model to learn discriminative features by minimizing the distance between representations of similar, yet obscured, image patches and maximizing the distance between dissimilar patches. Simultaneously, the autoencoder component is trained to efficiently encode and decode image data, effectively learning a compressed representation that captures essential image features. This combined approach enables AECR-Net to generate clearer reconstructions from obscured inputs by focusing on the most salient features and reducing the impact of noise introduced by atmospheric distortion.

Performance evaluations on standard datasets demonstrate the efficacy of both FFA-Net and AECR-Net in image restoration. Specifically, AECR-Net achieves a Peak Signal-to-Noise Ratio (PSNR) of 44 dB when applied to the Haze dataset and 37 dB on the Smoke dataset. These results represent a substantial improvement over baseline methodologies, which yielded PSNR scores of only 12 dB (Haze) and 9 dB (Smoke) under identical testing conditions. This indicates a significant enhancement in the reconstructed image quality, measured by reduced distortion and improved fidelity to the original, un-obscured image.

AECR-Net demonstrates significant improvement in structural similarity, as measured by the Structural Similarity Index (SSIM). On the Haze dataset, AECR-Net achieved an SSIM score of 0.98, compared to a baseline score of 0.55. Similarly, performance on the Smoke dataset yielded an SSIM of 0.96 for AECR-Net, a substantial increase from the baseline value of 0.55. These results indicate that AECR-Net effectively preserves the structural integrity of images even when dealing with significant obscuration, surpassing the performance of the compared baseline methods in maintaining perceived visual quality.

The Long View: Reliable Readings and the Inevitable Human Factor

Recent innovations in image processing and machine learning are driving the creation of increasingly dependable and precise gauge reader detection systems. These systems demonstrate enhanced performance even within the complexities of demanding industrial environments-characterized by poor lighting, glare, obstructions, and varying angles. Through techniques like adaptive noise reduction and robust feature extraction, these advancements mitigate the challenges previously hindering automated readings. Consequently, industries can now leverage automated gauge readings with greater confidence, achieving consistent data capture irrespective of environmental conditions and paving the way for real-time process control and predictive maintenance strategies.

The transition to automated gauge reading systems demonstrably minimizes the potential for human error, a frequent source of inaccuracies in manual data collection. This reduction in errors translates directly into improved efficiency across industrial processes, as fewer readings require verification or correction. Consequently, businesses experience significant cost savings through reduced labor requirements, minimized material waste stemming from faulty measurements, and enhanced overall productivity. The implementation of these systems allows for continuous, real-time monitoring of critical parameters, enabling proactive adjustments and preventing costly downtime – ultimately fostering a more streamlined and reliable operational environment.

Ongoing research endeavors are directed toward enhancing the robustness and precision of these image restoration techniques, with a particular emphasis on adapting them for a wider range of industrial applications. Investigations are underway to determine the efficacy of these methods in addressing image quality issues stemming from diverse sources of interference, such as varying lighting conditions, complex backgrounds, and diverse sensor technologies. The ultimate goal is to establish a versatile framework for automated visual inspection across numerous industrial sectors, extending beyond gauge reading to encompass defect detection, component identification, and overall quality control – ultimately fostering more resilient and efficient automated systems.

The pursuit of pristine synthetic data, as detailed in this work regarding gauge image enhancement, feels predictably optimistic. The methodology aims to circumvent the limitations of real-world conditions – smoke and haze – by fabricating ideal scenarios for training deep learning models. It’s a commendable effort, but one steeped in the illusion of control. As Fei-Fei Li once stated, “AI is not about replacing humans; it’s about augmenting our capabilities.” This paper augments the capability of a machine to see through conditions that degrade visual data, yet it also reveals a core truth: any elegantly constructed synthetic environment will inevitably diverge from the messy reality of production. The system might perform flawlessly on simulated haze, but the moment it encounters an unexpected particulate distribution, the cracks will begin to show. It’s not a failure of the technology, simply a reminder that even the most sophisticated models are built on assumptions, and assumptions are, by their very nature, fragile.

The Road Ahead

The demonstrated capacity to synthetically remediate gauge imagery, while promising, simply shifts the problem. Production environments rarely cooperate with neatly labeled datasets. The real challenge won’t be achieving peak signal-to-noise ratio on a benchmark; it will be maintaining acceptable performance as sensor calibration drifts, lighting conditions become truly adversarial, and the inevitable edge cases-reflections, partial obstructions, gauges manufactured with slight variations-accumulate. This work represents a sophisticated pre-processing step, not a solution.

Future iterations will undoubtedly focus on model robustness and generalization-transfer learning from broader image datasets, perhaps, or adversarial training techniques. However, one should remember that every architectural ‘innovation’ is just another layer of complexity to debug when the system inevitably fails in unexpected ways. A perfectly accurate gauge reader in a controlled laboratory is a comforting illusion.

Ultimately, the economic justification for this level of image enhancement must be considered. Is the marginal gain in automation accuracy worth the computational cost and ongoing maintenance? The history of applied machine learning suggests that ‘good enough’ often prevails, and that the pursuit of perfection is an expensive way to complicate everything. If the code looks perfect, no one has deployed it yet.

Original article: https://arxiv.org/pdf/2601.10537.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- Gold Rate Forecast

- You Should Not Let Your Kids Watch These Cartoons

- Here’s Whats Inside the Nearly $1 Million Golden Globes Gift Bag

- ‘Bugonia’ Tops Peacock’s Top 10 Most-Watched Movies List This Week Once Again

- The Hidden Treasure in AI Stocks: Alphabet

- The Worst Black A-List Hollywood Actors

- TV Pilots Rejected by Networks

- The Labyrinth of JBND: Peterson’s $32M Gambit

- USD RUB PREDICTION

2026-01-17 16:23