Author: Denis Avetisyan

New research demonstrates that spiking neural networks, modeled on the human brain, can anticipate rapid price changes in high-frequency trading with improved accuracy.

Spiking Neural Networks trained with STDP and optimized via Bayesian Optimization offer a low-latency, energy-efficient approach to predicting price movements in financial time series.

Conventional financial models struggle to capture the millisecond-scale dynamics inherent in high-frequency trading environments, often missing critical price spikes that present both risk and opportunity. This is addressed in ‘Predicting Price Movements in High-Frequency Financial Data with Spiking Neural Networks’, which investigates the application of biologically inspired spiking neural networks (SNNs) for forecasting these transient price events. The study demonstrates that SNNs, optimized via Bayesian optimization with a novel objective function, consistently outperform supervised learning baselines in simulated trading scenarios, achieving significantly higher cumulative returns. Could this approach unlock more efficient and robust low-latency trading strategies leveraging the principles of neuromorphic computing?

The Inevitable Bottleneck: When Speed Meets Reality

Conventional algorithmic trading architectures, designed for millisecond-scale operations, increasingly face limitations in high-frequency markets where transactions occur in microseconds or even nanoseconds. These systems typically rely on centralized processing and sequential execution of orders, creating inherent bottlenecks as data travels between various components – from market data feeds to order execution venues. The sheer volume of incoming data, combined with the need for rapid analysis and decision-making, overwhelms traditional infrastructure. Furthermore, the distance between trading servers and exchanges introduces unavoidable delays due to the speed of light, impacting profitability in a highly competitive landscape. Consequently, a significant portion of trading latency stems not from algorithmic complexity, but from the physical and architectural constraints of existing systems, prompting a search for alternative paradigms capable of minimizing these delays and maximizing responsiveness.

Conventional time series analysis, often relying on stationary models and Fourier-based approaches, frequently falls short when applied to the volatile and non-stationary characteristics of financial markets. These methods struggle to effectively model the complex dependencies and rapid shifts in statistical properties – such as volatility clustering and fat tails – that define high-frequency data. Financial time series aren’t simply random walks; they exhibit multi-scale temporal dynamics, where patterns emerge and dissipate across varying time horizons, and traditional techniques often smooth over these crucial nuances. Consequently, predictions based on these analyses can be inaccurate, leading to missed trading opportunities or, more critically, substantial financial losses in competitive high-frequency trading environments. The inherent complexity demands tools capable of adapting to constantly evolving data distributions and recognizing subtle, short-lived patterns that are invisible to standard statistical approaches.

The relentless pursuit of speed and responsiveness in high-frequency trading has driven researchers to investigate unconventional computational approaches, specifically those inspired by biological systems. Traditional computer architectures, while powerful, often struggle to match the efficiency and adaptability demonstrated by the nervous system and other natural information processors. Bio-inspired computing, encompassing techniques like neuromorphic engineering and evolutionary algorithms, offers the potential to overcome latency bottlenecks by mimicking the parallel, event-driven processing of the brain. These paradigms prioritize minimizing delays and dynamically adjusting to rapidly changing market conditions, unlike rigid, pre-programmed systems. By leveraging principles of biological intelligence, the hope is to create trading algorithms capable of reacting to fleeting opportunities and maintaining a competitive edge in increasingly volatile financial landscapes.

Spiking Neural Networks: Mimicking the Efficiency of Nature

Spiking Neural Networks (SNNs) achieve reduced latency and energy consumption due to their event-driven, or asynchronous, computational model. Traditional Artificial Neural Networks (ANNs) perform computations for every input, regardless of signal change; SNNs, conversely, only process information when a neuron receives sufficient input to “fire” a spike. This sparse activation drastically reduces the number of operations required, directly impacting energy use. Furthermore, the temporal dynamics of spikes allow for event-based processing, enabling faster responses to relevant inputs and potentially eliminating the need for a global clock signal, a significant source of power dissipation in conventional systems. The efficiency gains are particularly pronounced in applications dealing with sparse or temporal data, such as sensor processing and robotics.

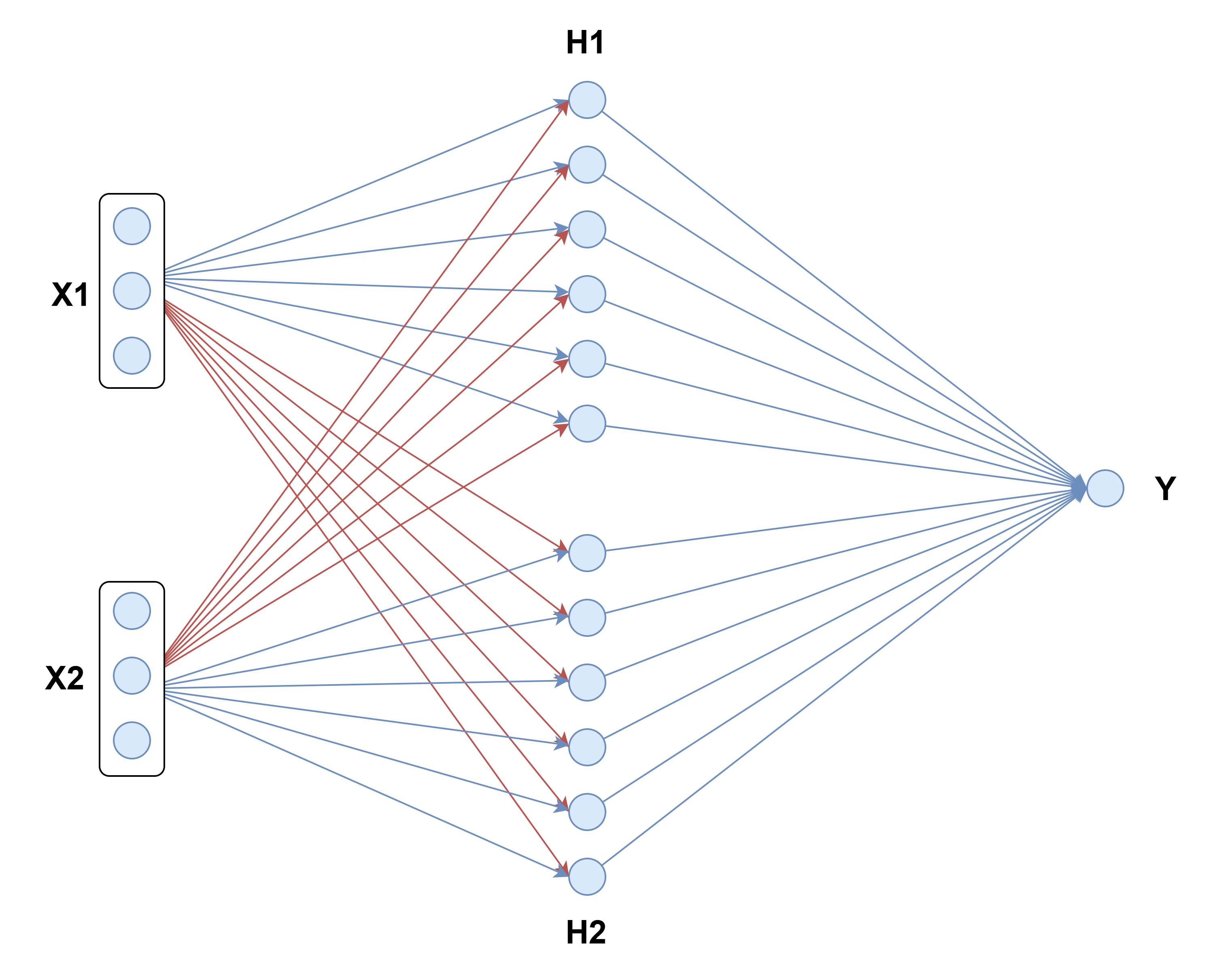

Spiking Neural Networks (SNNs) fundamentally differ from Artificial Neural Networks (ANNs) in their method of information transmission. While ANNs typically utilize continuous values for signal propagation, SNNs employ discrete, asynchronous events called “spikes.” These spikes represent the activation of a neuron and are transmitted to other neurons. The timing of these spikes, rather than simply their rate, can encode information. This event-driven, spike-based communication directly models the behavior of biological neurons, which communicate via action potentials – all-or-nothing electrical signals. The strength of a connection between neurons in an SNN is determined by the synaptic weights, which modulate the effect of incoming spikes on the post-synaptic neuron’s membrane potential, ultimately determining if that neuron will fire its own spike. This contrasts with the weighted sums and activation functions used in ANNs, providing a more biologically plausible and potentially energy-efficient computational model.

Traditional backpropagation, the standard learning algorithm for Artificial Neural Networks, is not directly applicable to Spiking Neural Networks due to the non-differentiability of spiking activations. The discontinuous nature of spikes – their all-or-nothing signaling – prevents the calculation of gradients necessary for weight updates in backpropagation. Consequently, research focuses on surrogate gradients, which approximate the derivative of the spiking function, or spike-timing-dependent plasticity (STDP), a biologically plausible local learning rule. Alternative approaches include conversion of trained Artificial Neural Networks to equivalent SNNs and the development of unsupervised learning algorithms specifically designed for temporal spike patterns. These novel techniques aim to address the challenges posed by the discrete event-driven dynamics of SNNs and enable effective weight adaptation for complex tasks.

Harnessing Temporal Dynamics: Optimizing SNNs for Financial Time Series

Training Spiking Neural Networks (SNNs) presents challenges due to the non-differentiable nature of spiking activations, necessitating optimization strategies beyond traditional gradient descent. Bayesian Optimization (BO) addresses this by building a probabilistic model of the objective function – typically a performance metric on financial time series data – and intelligently exploring the parameter space to find optimal network weights. This is often coupled with Spike-Timing-Dependent Plasticity (STDP), a biologically plausible learning rule where the strength of synaptic connections is adjusted based on the precise timing of pre- and post-synaptic spikes. STDP facilitates learning temporal patterns and enhances the network’s ability to process time-varying financial data, while BO efficiently manages the high-dimensional parameter space inherent in SNN architectures and training regimes. The combined approach allows for effective exploration and exploitation of the parameter landscape, resulting in improved predictive accuracy and robustness.

Financial time series data must be transformed into a format compatible with Spiking Neural Networks (SNNs) through a process of feature engineering and encoding. Initial feature engineering typically involves calculating the Volume Weighted Average Price (VWAP), which provides a time-weighted average of the price, and subsequent normalization to a consistent scale. This normalized data is then converted into spike trains using Poisson Encoding, a method where the rate of spikes corresponds to the magnitude of the financial data point; higher values result in a higher frequency of spikes. The Poisson distribution governs the probability of each spike, introducing stochasticity inherent in biological systems and allowing the SNN to process temporal information effectively. This process ensures that the financial data’s characteristics are preserved within the spiking representation, enabling the SNN to learn and make predictions.

Leaky Integrate-and-Fire (LIF) neurons, when implemented within polychronous spiking networks, provide a biologically plausible mechanism for modeling temporal dependencies in financial time series data. Polychronous networks facilitate the learning of precise temporal patterns – sequences of spikes occurring at specific time intervals – which are critical for identifying predictive relationships in sequential data. LIF neurons, through their membrane potential integration and leak mechanisms, allow for the accumulation of evidence over time, effectively functioning as temporal filters. The combination enables the network to detect and respond to patterns spanning multiple time steps, surpassing the limitations of traditional static models and improving predictive accuracy in dynamic financial environments. The network’s ability to learn and recognize these temporal relationships is dependent on the precise timing of spikes, enabling a more nuanced understanding of complex market behaviors.

Beyond Prediction: SNNs in Action – Momentum Trading and Risk Management

Spiking Neural Networks (SNNs) present a novel approach to momentum trading, demonstrating a capacity to not only enhance potential returns but also mitigate the risk of significant drawdown. Traditional momentum strategies rely on identifying assets exhibiting strong price trends, yet can be vulnerable to sudden reversals; SNNs, by processing information with temporal spikes, offer a more nuanced understanding of price dynamics. This allows for faster, more adaptive trading decisions, potentially capitalizing on short-lived opportunities and exiting positions before substantial losses occur. Simulations reveal that strategically implemented SNNs can achieve compelling financial results, exemplified by a reported cumulative return of 17.44% and a Sharpe Ratio of 19.71, highlighting the viability of this bio-inspired computational model within the complex landscape of financial markets.

Optimizing Spiking Neural Networks (SNNs) for financial prediction presents a unique challenge: maximizing predictive power while maintaining computational efficiency. Traditional metrics often prioritize accuracy alone, potentially leading to models with excessively high spiking rates and increased energy consumption. To address this, researchers employed Penalised Spike Accuracy (PSA) as an optimization metric. PSA doesn’t simply reward correct predictions; it penalizes models for generating an unnecessarily large number of spikes. This encourages the network to make accurate predictions using the fewest possible spikes, effectively balancing predictive performance with computational cost. The result is a more streamlined and efficient SNN, capable of processing financial data with reduced latency and energy requirements, which is crucial for real-time trading applications. This approach allows for a model that is not only precise but also practical for deployment in dynamic financial markets.

Rigorous evaluation confirms the potential of Spiking Neural Networks (SNNs) within financial trading strategies. Specifically, Model 2, optimized through Penalised Spike Accuracy (PSA), delivered a cumulative return of 17.44% alongside an impressive Sharpe Ratio of 19.71 – a key metric indicating risk-adjusted return. Beyond overall profitability, the model demonstrated consistent performance characteristics, boasting a 53.49% Win Rate, a Profit Factor of 1.22 which suggests profitability even considering losses, and a positive Expectancy of $9.18 \times 10^{-6}$ per trade. Critically, the model maintained a controlled risk profile, evidenced by a Maximum Drawdown of only 2.69%, suggesting resilience during market downturns and reinforcing the viability of SNNs for real-world financial applications.

The pursuit of predictive accuracy in financial markets mirrors a constant negotiation with inherent instability. This study, detailing the application of Spiking Neural Networks to high-frequency trading, observes that even the most sophisticated architectures are not solutions, but rather temporary bulwarks against the inevitable tide of chaos. As Blaise Pascal noted, “All of humanity’s problems stem from man’s inability to sit quietly in a room alone.” The system detailed herein, leveraging STDP and Bayesian optimization, doesn’t prevent price spikes-it anticipates them, offering a fleeting moment of order before the next outage. It’s a refinement of response, not a denial of entropy, and a testament to the fact that order is merely cache between two outages.

What Lies Ahead?

The pursuit of predictive accuracy in financial markets, even through the biomimicry of spiking neural networks, merely reframes an ancient dependency. This work demonstrates a capacity to anticipate localized volatility, but anticipates no escape from systemic risk. The system, even when modeled on the brain, remains a system. Each refined algorithm, each marginal gain in prediction, tightens the network of financial instruments, increasing the scope and velocity of potential failure.

Future efforts will inevitably focus on scaling these models, embedding them deeper within automated trading infrastructure. This is not progress, but entrenchment. The low-latency advantage offered by neuromorphic computing will be met by equally rapid competitive responses, a relentless escalation of speed serving only to amplify the fragility of the whole. The question isn’t whether these networks can predict, but whether prediction, increasingly divorced from fundamental value, simply accelerates the inevitable cascade.

The optimization of STDP learning rules, while promising, will always be constrained by the noise inherent in the data itself. To chase perfect calibration is to mistake a shadow for substance. The true challenge lies not in building more sophisticated predictors, but in accepting the inherent unpredictability of complex systems, and designing for resilience-a capacity for graceful degradation, not flawless foresight. The split of the signal only magnifies the noise.

Original article: https://arxiv.org/pdf/2512.05868.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 21 Movies Filmed in Real Abandoned Locations

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 10 Hulu Originals You’re Missing Out On

- Gold Rate Forecast

- The 10 Most Beautiful Women in the World for 2026, According to the Golden Ratio

- 39th Developer Notes: 2.5th Anniversary Update

- 15 Western TV Series That Flip the Genre on Its Head

- Rewriting the Future: Removing Unwanted Knowledge from AI Models

- Doom creator John Romero’s canceled game is now a “much smaller game,” but it “will be new to people, the way that going through Elden Ring was a really new experience”

2025-12-08 06:45