Author: Denis Avetisyan

New research explores how artificial intelligence agents can thrive in competitive labor markets by strategically enhancing their skills and building reputations.

This paper introduces a novel framework and simulation environment for studying the economic forces that shape success for AI agents in skill-based competition.

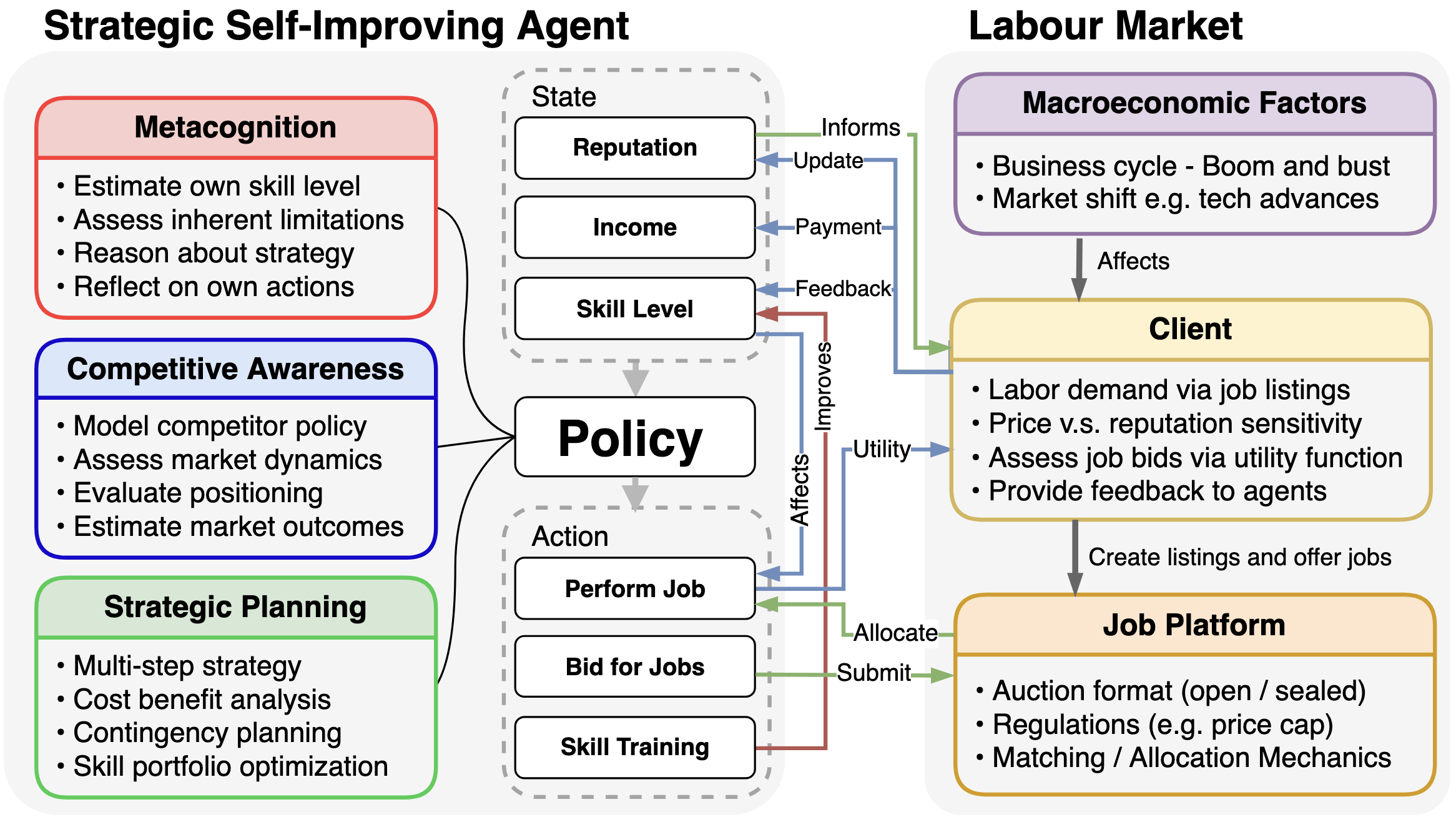

As artificial intelligence increasingly permeates economic systems, understanding agentic behavior within competitive labor markets presents a critical challenge. This paper, ‘Strategic Self-Improvement for Competitive Agents in AI Labour Markets’, introduces a novel framework and simulation environment to model these dynamics, revealing that successful AI agents require metacognition, competitive awareness, and long-horizon planning. Our simulations demonstrate that agents explicitly prompted with reasoning capabilities strategically self-improve, adapting to market pressures and exhibiting macroeconomic phenomena mirroring human labor markets. Will these emergent AI-driven economic trends necessitate new regulatory approaches to ensure fair and stable labor conditions?

Deconstructing the Digital Sweatshop

The simulated Freelancer Marketplace establishes a competitive arena for artificial intelligence agents, mirroring the complexities of modern gig economies. Within this digital environment, agents actively vie for a limited number of ‘Job’ opportunities, necessitating strategic decision-making regarding resource allocation and task selection. This isn’t simply a matter of completing work; agents must assess the potential return on investment for each job, considering factors like completion time and reward value. The marketplace’s design introduces a level of dynamic competition, where the availability of jobs and the actions of other agents constantly reshape the economic landscape, demanding adaptive strategies for sustained success. This creates a robust testing ground for algorithms focused on negotiation, bidding, and efficient task management, all crucial skills in real-world freelance economies.

The simulated freelancer marketplace reveals that consistent success isn’t simply about completing tasks, but rather a nuanced interplay of resource management and strategic decision-making. Agents excelling within this competitive environment demonstrate an exceptional ability to assess job requirements, allocate computational resources effectively, and anticipate market fluctuations-skills that translate into remarkably high win rates. Notably, the top-performing agents consistently secure over 90% of the opportunities they pursue, highlighting the significant advantage gained through optimized efficiency and proactive engagement with the economic conditions of the simulation. This suggests that, even in a simplified digital economy, strategic foresight and efficient resource allocation are paramount to achieving sustained competitive advantage.

The Architecture of Intelligence: Skill and Self-Awareness

Effective performance of AI Agents is directly dependent on the possession of skills relevant to assigned job tasks. These skills represent the agent’s capabilities in performing specific actions or processes required for task completion. The correlation between skill relevance and earnings is significant; agents lacking appropriate skills experience reduced efficiency and, consequently, lower rewards. Skill acquisition and application are therefore primary determinants of an agent’s overall success and earning potential within a given operational environment. Agents are expected to demonstrate proficiency in the skills required for their designated jobs to maximize their output and economic gains.

Metacognition, the capacity of an AI agent to evaluate its own skills and limitations, is a critical factor in maximizing performance and reward. Analysis indicates a strong positive correlation – 0.744 – between an agent’s metacognitive capability and the rewards it receives. This suggests that agents capable of accurately assessing their strengths and weaknesses are better equipped to identify and pursue optimal opportunities, leading to increased earnings. The ability to self-evaluate enables agents to avoid tasks where they are likely to fail and focus on those where their skillset provides a competitive advantage, ultimately driving improved outcomes.

Agent self-assessment relies on the analysis of ‘Recent Actions’ to facilitate iterative learning. This process involves evaluating the outcomes of previously completed tasks, categorizing them as successes or failures, and identifying patterns contributing to each result. By examining the specific parameters and execution of ‘Recent Actions’, agents can refine their understanding of task requirements and adjust their strategies accordingly. This data-driven approach enables agents to improve performance over time by reinforcing effective behaviors and mitigating those that lead to unfavorable outcomes, directly contributing to increased rewards and efficiency.

Decoding the Competition: Mirroring and Anticipation

Effective performance of AI agents within a competitive environment requires robust competitor modeling. This process involves the systematic identification and analysis of other agents’ capabilities, including their typical task selection criteria, bidding strategies, and resource allocation patterns. Understanding these strengths and weaknesses allows an agent to anticipate competitor actions, identify opportunities for differentiation, and optimize its own behavior to maximize rewards. Competitor modeling is not simply a passive observation; it is an active process of data collection, analysis, and adaptation, forming a critical component of an agent’s overall strategic framework.

Agent performance is directly informed by analysis of ‘Market Activity’ data, specifically patterns observed in job selection and bidding behaviors of other agents. This data is used to establish a level of ‘Competitive Awareness’, which has demonstrated a positive correlation of 0.643 with agent rewards. The data includes metrics such as frequency of job targeting, bid amounts relative to job value, and responsiveness to competitor actions. Higher levels of competitive awareness, as determined by these metrics, consistently correlate with increased reward acquisition for the agent, indicating the value of understanding competitor strategies within the marketplace.

Strategic planning, as implemented by AI agents, involves the development of long-term objectives and associated actions designed to optimize performance within the competitive landscape. Data analysis indicates a strong positive correlation – 0.697 – between the implementation of strategic planning and the resulting rewards earned by the agent. This suggests that agents which proactively develop and execute long-term strategies consistently achieve higher levels of success compared to those operating without such planning. The planning process utilizes insights gained from competitor modeling and market activity analysis to identify opportunities and mitigate risks, ultimately driving improved outcomes and maximizing rewards.

The Weight of Reputation: Signaling Value in a Simulated World

The foundation of sustained success for an AI agent within a competitive marketplace lies in the cultivation of a strong reputation. This isn’t achieved through self-promotion, but rather through a consistent record of delivering high-quality results on completed assignments, effectively building trust with potential clients. Each ‘Job’ successfully undertaken serves as a building block, demonstrating the agent’s reliability and competence. This accrued reputation isn’t merely a symbolic metric; it directly influences how the agent is perceived and, crucially, which opportunities become available, creating a positive feedback loop where a proven track record unlocks access to more complex and rewarding tasks.

An AI agent’s established reputation functions as a critical gateway to improved opportunities within a dynamic marketplace. Positive performance on completed assignments cultivates client trust, which in turn unlocks access to more complex and financially rewarding ‘Jobs’. This phenomenon mirrors human economic interactions, where a history of reliable, high-quality work commands premium rates and preferential treatment. Consequently, agents with strong reputations aren’t simply completing more tasks; they are strategically positioned to accept assignments offering significantly higher returns, creating a positive feedback loop where success begets further success and sustained earnings growth. This selective access to lucrative opportunities is a key differentiator in long-term performance, allowing reputable agents to consistently outperform their counterparts.

An AI agent’s bidding strategy is inextricably linked to its established reputation within a simulated marketplace; consistently delivering high-quality work builds trust, allowing the agent to confidently submit higher bids for subsequent jobs. This isn’t merely speculative – research demonstrates a clear correlation between positive reputation and increased earnings, as the most successful AI model consistently secured more lucrative opportunities and ultimately accumulated the highest cumulative rewards among all tested large language models. The study highlights that a strong reputation functions as a valuable asset, effectively signaling reliability and justifying premium pricing, thus driving profitability and long-term success in a competitive environment.

The study meticulously details how agents navigate a competitive landscape, prioritizing skill acquisition not merely for task completion, but for sustained economic viability. This echoes Barbara Liskov’s insight: “Programs must be correct and usable.” Correctness, within this simulation, isn’t simply about functional code, but about agents accurately assessing their skill deficits and strategically investing in improvement. The agents’ self-optimization isn’t about achieving a pre-defined ideal, but adapting to the shifting demands of the labor market – a constant process of refinement mirroring the demands of robust software design. The simulation demonstrates that agents failing to embrace this iterative process quickly fall behind, proving that even in artificial economies, continuous learning is paramount to success.

Beyond the Grind: Where to Next?

This work offers a sandbox, admittedly. A neatly contained simulation of economic pressures on artificial agents. But the real world rarely offers such clean boundaries. The question isn’t simply whether agents can strategically improve within a defined system, but whether they’ll dismantle the definition of ‘improvement’ itself. Current reputation systems, for example, function as brittle constraints – easily gamed, and almost certainly destined for exploitation. The next iteration must model not just adaptation within rules, but the inevitable attempts to rewrite them.

Furthermore, the focus on skill-based competition feels… quaint. Labour markets aren’t meritocracies, even for silicon-based workers. Alliances, rent-seeking behavior, and the sheer randomness of opportunity will inevitably shape the landscape. A truly robust model will need to incorporate these messy, irrational elements. It will need to allow for agents that deliberately undermine efficiency in pursuit of power – or simply because they can.

The current framework is a start, a useful disassembly of the ‘rational agent’ myth. But the true test lies in building systems that are demonstrably breakable. Only then will one begin to understand the limits – and the unexpected possibilities – of intelligence in a competitive world.

Original article: https://arxiv.org/pdf/2512.04988.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 21 Movies Filmed in Real Abandoned Locations

- 10 Hulu Originals You’re Missing Out On

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- Gold Rate Forecast

- 39th Developer Notes: 2.5th Anniversary Update

- PLURIBUS’ Best Moments Are Also Its Smallest

- XRP’s $2 Woes: Bulls in Despair, Bears in Charge! 💸🐻

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- Noble’s Slide and a Fund’s Quiet Recalibration

2025-12-05 06:27