Author: Denis Avetisyan

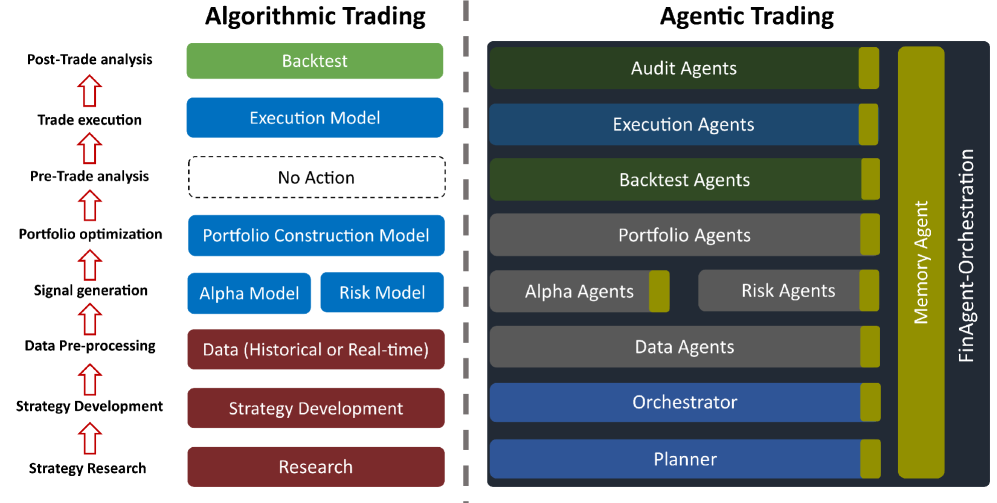

A new framework is emerging that moves beyond traditional algorithmic trading to create fully autonomous, language-driven agents capable of navigating complex financial markets.

This paper introduces FinAgent, an orchestration framework leveraging large language models and structured context protocols for agentic trading in both stock and cryptocurrency markets.

Despite the promise of artificial intelligence in finance, building effective algorithmic trading systems remains a complex undertaking, often requiring substantial expertise and resources. This paper, ‘Orchestration Framework for Financial Agents: From Algorithmic Trading to Agentic Trading’, introduces FinAgent, a novel framework that democratizes financial intelligence by mapping traditional algorithmic trading components to a suite of interacting agents. Our results demonstrate that this agentic system achieves competitive performance-a 20.42% return with a 2.63 Sharpe ratio for stocks and 8.39% with a 0.38 Sharpe ratio for BTC-raising the question of how such orchestrated agent systems can further refine and adapt to the ever-changing dynamics of financial markets.

The Evolving Market: A System in Flux

Conventional financial modeling often relies on static datasets and simplified assumptions, creating a fundamental mismatch with the perpetually shifting landscape of modern markets. These models frequently struggle to incorporate the sheer volume of real-time information-news feeds, social sentiment, economic indicators-and the intricate interplay between various financial instruments. The inherent complexity of interconnected markets, where a single event can trigger cascading effects, overwhelms systems designed for linear projections. Consequently, traditional approaches frequently fail to anticipate or accurately respond to unexpected volatility, leading to suboptimal investment decisions and increased risk. This limitation underscores the need for a more adaptive and responsive framework capable of processing dynamic data and understanding the nuanced interactions that define financial reality.

The FinAgent framework revolutionizes financial modeling through a distributed architecture, breaking down complex investment tasks into a network of specialized agents. Each agent, designed with a specific expertise – such as data acquisition, sentiment analysis, or portfolio rebalancing – operates autonomously yet communicates and collaborates with others through a defined protocol. This modularity contrasts sharply with traditional monolithic systems, allowing for greater flexibility and resilience; individual agents can be updated or replaced without disrupting the entire framework. The resulting system exhibits enhanced adaptability to rapidly changing market conditions and facilitates scalability, as new agents can be readily integrated to address emerging investment opportunities or incorporate novel data sources. This agent-based approach mirrors the collaborative dynamics of a financial trading floor, but executes with automated precision and speed.

The FinAgent framework distinguishes itself through a modular architecture, a departure from traditional, monolithic financial systems. This approach fosters remarkable adaptability; rather than requiring a complete overhaul when market conditions shift or new data sources emerge, individual agent modules can be updated or replaced without disrupting the entire system. Scalability is similarly enhanced, as the framework readily accommodates increased data volume or complexity by simply adding more specialized agents to the network. This contrasts sharply with monolithic systems, where expansion often necessitates significant redesign and carries a substantial risk of systemic failure. The resulting flexibility allows FinAgent to respond dynamically to evolving financial landscapes and integrate novel technologies with unprecedented ease, paving the way for more resilient and efficient investment strategies.

The FinAgent framework demonstrates a significant advancement in automated investment strategies by integrating Large Language Models to navigate the complexities of financial markets. This system isn’t merely analyzing data; it’s designed to autonomously manage the entire investment lifecycle, from initial research and portfolio construction to trade execution and risk management. Recent evaluations reveal substantial performance gains; specifically, the framework achieved a 20.42% return when applied to stock market data and an 8.39% return on Bitcoin data. These results suggest a potential for consistently outperforming traditional methods, driven by the LLM’s capacity to interpret nuanced market signals and adapt to rapidly changing conditions – ultimately pointing towards a new era of intelligent, data-driven investing.

Orchestrating Intelligence: The FinAgent Control Plane

The Orchestrator Agent employs the Model Context Protocol (MCP) for efficient management of agent pools, functioning as a standardized interface for distributing model contexts to agents. This protocol defines the format and method for delivering necessary data, including model weights, configuration parameters, and input schemas, ensuring each agent operates with the correct information for its assigned tasks. MCP facilitates dynamic allocation of resources by enabling the Orchestrator to add, remove, or reconfigure agents within a pool without disrupting ongoing operations. Furthermore, the protocol supports versioning of model contexts, allowing for seamless updates and rollbacks, and provides mechanisms for monitoring agent health and performance based on the received context.

The FinAgent control plane utilizes a time-sensitive data and instruction delivery system to optimize agent performance. This is achieved through a combination of scheduling algorithms and prioritized messaging. Incoming data is assessed for relevance to each agent’s current task, and instructions are formatted according to the agent’s capabilities. Delivery timing is determined by task dependencies and real-time system load, ensuring agents receive necessary inputs precisely when required. This precise synchronization minimizes latency and maximizes throughput, enabling efficient execution of complex financial operations and reducing the risk of outdated or incomplete information impacting decision-making.

The Agent-to-Agent Protocol (A2A) enables direct, peer-to-peer communication between FinAgents without requiring centralized orchestration. This functionality supports complex workflows where agents can delegate subtasks to each other, leveraging specialized capabilities and distributed processing. A2A utilizes a message-passing system, allowing agents to request services, share intermediate results, and coordinate actions asynchronously. The protocol supports various communication patterns, including request/response, publish/subscribe, and streaming, enabling flexible and scalable collaboration within the FinAgent network. This direct communication minimizes latency and improves the efficiency of multi-agent tasks, particularly those requiring real-time data exchange or iterative refinement.

The Memory Agent utilizes Universally Unique Identifiers (UUIDs) to maintain a persistent record of agent state and operational history. This implementation allows for comprehensive auditability, enabling the reconstruction of past actions and the identification of causal relationships. By associating each action and data point with a unique UUID, the system ensures data integrity and facilitates efficient retrieval for both monitoring and debugging purposes. Furthermore, the retention of historical data empowers improved decision-making capabilities, as agents can leverage past experiences to optimize future performance and adapt to changing conditions. This historical context is crucial for complex workflows and maintaining consistent agent behavior over time.

From Signal to Portfolio: The Agent Workflow in Action

The Alpha Agent generates investment signals by processing and analyzing published research documents. This process leverages Large Language Models (LLMs) to extract relevant data points, identify potential investment opportunities, and assess the validity of research findings. The LLMs are trained on a corpus of financial literature, enabling them to understand complex terminology and relationships within the data. The output of this analysis is a set of signals, which represent potential investment recommendations, along with associated confidence scores and supporting evidence derived from the analyzed research. These signals are then passed to the Portfolio Agent for further evaluation and integration into a broader investment strategy.

The Portfolio Agent utilizes investment signals generated by the Alpha Agent and integrates them with risk assessments provided by the Risk Agent to construct optimized portfolios. This process involves weighting assets based on predicted returns, while simultaneously considering factors such as volatility, correlation, and potential drawdowns identified by the Risk Agent. Optimization algorithms, potentially including mean-variance optimization or more complex techniques, are employed to maximize expected returns for a given level of risk, or to minimize risk for a target return. The resulting portfolio weights represent the allocation of capital across different assets, designed to achieve a balance between risk and reward as defined by the user’s investment objectives and constraints.

The Execution Agent receives portfolio weightings from the Portfolio Agent and converts these into specific order instructions for trade execution. This includes determining order type, size, and timing, adhering to pre-defined constraints and exchange regulations. Concurrently, the Audit Agent independently verifies the entire process, from signal generation to order execution, by cross-referencing data across all agents and maintaining a complete audit trail. This verification includes confirming adherence to investment mandates, regulatory compliance, and accurate trade reconciliation, flagging any discrepancies for review and ensuring the integrity of the investment workflow.

The Planner Agent continuously monitors market data, including price movements, volume, and macroeconomic indicators, to assess the validity of the current investment plan. It also receives performance data and diagnostic reports from all other agents – Alpha, Portfolio, Execution, and Risk – regarding signal generation, portfolio construction, order execution, and risk exposure. Based on this combined input, the Planner Agent dynamically adjusts the investment strategy by modifying parameters such as position sizing, asset allocation weights, and trading frequency. These adjustments are designed to optimize portfolio performance while adhering to pre-defined risk constraints and adapting to changing market dynamics. The agent’s decision-making process utilizes quantitative metrics and algorithms to identify deviations from expected outcomes and implement corrective actions, ensuring the overall investment process remains aligned with its objectives.

Validating the System: Rigor in Backtesting

The Backtest Agent serves as a critical component, subjecting the trading strategy to exhaustive analysis using historical data. This process isn’t simply about observing past performance; it involves the calculation of vital metrics like the Sharpe Ratio – a measure of risk-adjusted return – and maximum drawdown, which quantifies the largest peak-to-trough decline during a specific period. These calculations provide a robust assessment of the strategy’s potential profitability and its inherent risk profile. By meticulously evaluating performance across varied historical datasets, the agent helps identify potential weaknesses and ensures the strategy’s resilience under different market conditions. The resulting metrics offer a data-driven foundation for understanding the strategy’s behavior and making informed decisions about its implementation.

Robust backtesting relies heavily on preventing data leakage, a subtle yet critical issue where future information inadvertently influences past performance calculations. This can occur through various means, such as including data in the training set that wouldn’t have been available at the time a trading decision would have been made, leading to unrealistically optimistic results. The framework employs strict data handling protocols – specifically, a temporal split where data is divided into training, validation, and testing sets based on time, ensuring that the model learns from the past and is evaluated on unseen future data. This methodology avoids the pitfalls of look-ahead bias and provides a more accurate assessment of the strategy’s true potential, fostering confidence in its ability to generalize to live market conditions and deliver consistent, realistic returns. Without these preventative measures, reported performance metrics become misleading, failing to reflect the actual risks and rewards an investor would experience.

The system’s adaptability is bolstered by its integration with two prominent financial data providers: Polygon API and yfinance. This dual-source approach ensures robustness and flexibility in data acquisition. Polygon API provides access to real-time and historical stock data with a focus on speed and reliability, while yfinance offers a comprehensive, readily available source of historical data, particularly useful for backtesting and broader market analysis. By seamlessly incorporating both APIs, the framework mitigates the risk of data dependency and allows users to access a wider range of financial instruments and timeframes, ultimately enhancing the scope and reliability of strategy evaluation and implementation.

Rigorous backtesting reveals the framework’s capacity to generate substantial risk-adjusted returns. Specifically, the strategy achieved a Sharpe Ratio of 2.63 when applied to stock data, markedly exceeding the performance of typical Exchange Traded Funds, which range between 0.79 and 1.86. Performance on Bitcoin data also proved strong, with a Sharpe Ratio of 0.38, significantly outperforming a simple Buy & Hold strategy’s 0.168 ratio. Further reinforcing its robustness, the framework exhibits a low volatility of 11.83% for stocks and limits potential downside risk with a maximum drawdown of just -3.59%, indicating a controlled and potentially valuable approach to asset allocation.

The pursuit of agentic trading systems, as detailed in this framework, mirrors the inherent transience of all constructed systems. FinAgent, with its orchestration of LLMs and context protocols, represents a current apex of financial architecture, yet acknowledges the inevitable evolution-and potential obsolescence-of its design. As Blaise Pascal observed, “The eloquence of youth is that it speaks of things as they are; the eloquence of age, of things as they have been.” This rings true; while FinAgent currently demonstrates competitive performance, future iterations-or entirely new paradigms-will undoubtedly emerge, building upon, or replacing, its foundations. The framework’s strength lies not in its permanence, but in its adaptability within the larger cycle of innovation.

What Lies Ahead?

The FinAgent framework, while demonstrating a functional progression from algorithmic execution to agentic behavior, merely marks the opening of a protracted negotiation with complexity. The competitive gains achieved are not, ultimately, the point. Rather, they are the price of admission to a more difficult conversation: how to build systems that understand the inevitable erosion of predictive power in financial markets. Every model, however elegantly constructed, operates within a receding horizon of relevance. The true challenge isn’t maximizing short-term returns, but designing for graceful degradation.

Future work must address the brittleness inherent in current LLM-driven approaches. Context protocols, while providing a structured memory, remain susceptible to the ambiguities of natural language and the non-stationary nature of market signals. A focus on verifiable reasoning – systems that can articulate why a decision was made, and quantify the associated uncertainty – is paramount. Architecture without history is fragile; the system must retain an audit trail of its learning, its mistakes, and the evolving rationale behind its actions.

The integration of external knowledge sources, beyond the confines of historical price data, presents another crucial avenue for exploration. But simply adding data isn’t enough. The framework needs mechanisms to assess the provenance, reliability, and potential biases of these external inputs. Every delay in implementation is the price of understanding; a rushed deployment risks amplifying existing vulnerabilities, not mitigating them. The longevity of such systems will be determined not by their initial performance, but by their capacity to adapt, to learn from failure, and to acknowledge the inherent limitations of prediction.

Original article: https://arxiv.org/pdf/2512.02227.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- The 11 Elden Ring: Nightreign DLC features that would surprise and delight the biggest FromSoftware fans

- 2025 Crypto Wallets: Secure, Smart, and Surprisingly Simple!

- 10 Hulu Originals You’re Missing Out On

- TON PREDICTION. TON cryptocurrency

- Gold Rate Forecast

- 17 Black Voice Actors Who Saved Games With One Line Delivery

- Is T-Mobile’s Dividend Dream Too Good to Be True?

- Is Kalshi the New Polymarket? 🤔💡

- Bitcoin, USDT, and Others: Which Cryptocurrencies Work Best for Online Casinos According to ArabTopCasino

- 39th Developer Notes: 2.5th Anniversary Update

2025-12-03 08:58