Author: Denis Avetisyan

New research reveals that active gradient inversion attacks, designed to steal data in collaborative learning, aren’t as hidden as attackers believe.

Clients in federated learning systems can reliably detect active gradient inversion attacks through analysis of model updates and statistical anomalies, opening avenues for robust defenses.

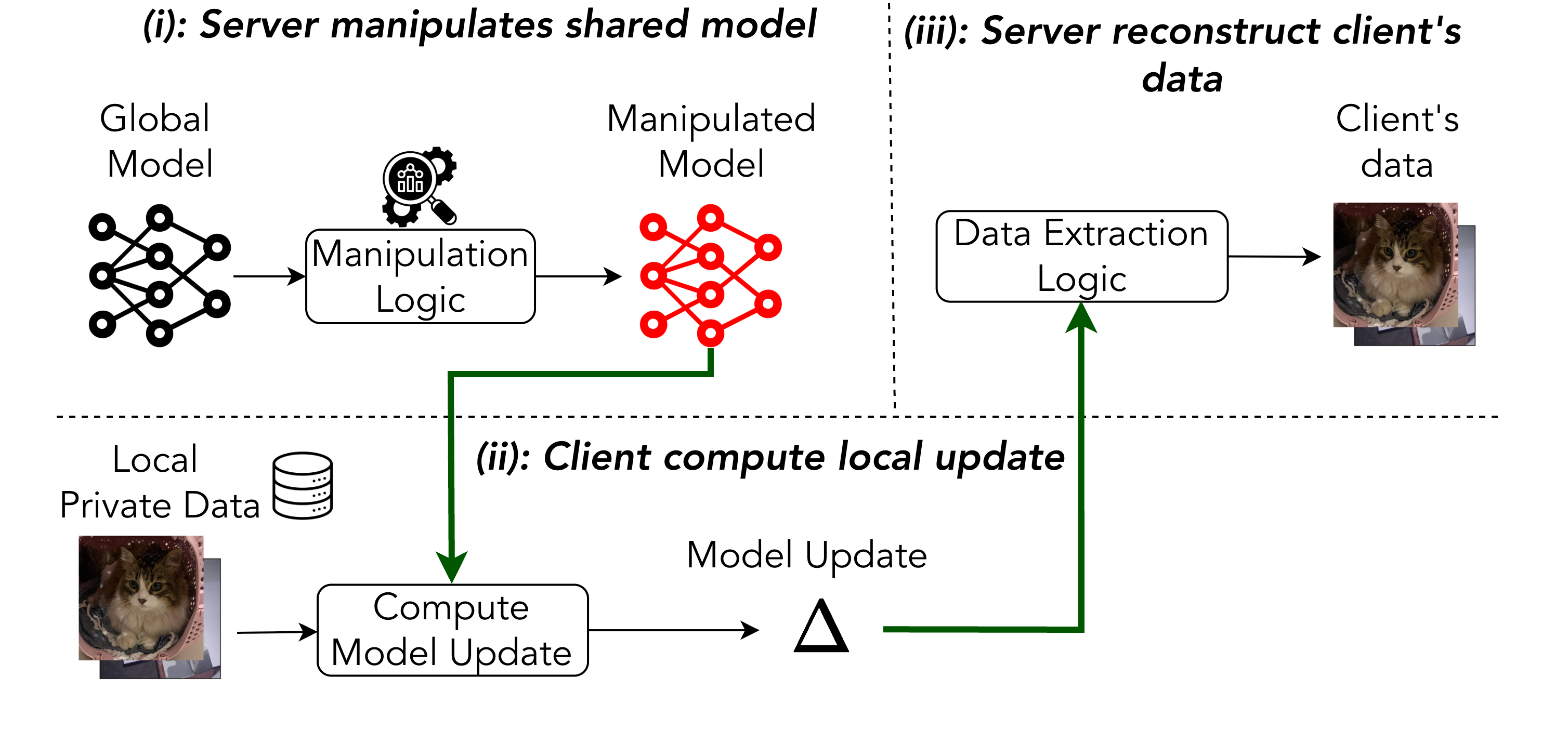

While Federated Learning promises privacy by keeping data decentralized, exchanged model updates remain vulnerable to reconstruction attacks. This vulnerability is particularly acute with active Gradient Inversion Attacks (GIAs), where a malicious server manipulates the learning process to infer client data; however, recent claims suggest these attacks can evade detection. In ‘On the Detectability of Active Gradient Inversion Attacks in Federated Learning’, we demonstrate that these purportedly stealthy GIAs are detectable through lightweight client-side analysis of model weights and training dynamics. Can these findings pave the way for robust, practical defenses against increasingly sophisticated privacy threats in collaborative machine learning?

The Fragility of Shared Knowledge

Federated Learning (FL) enables collaborative machine learning while preserving data privacy by training models across decentralized devices without explicitly exchanging data. However, this distributed paradigm introduces novel security vulnerabilities. Gradient Inversion Attacks (GIAs) exploit shared model updates—gradients—to reconstruct sensitive information about the original training data, potentially compromising user privacy. Existing privacy mechanisms often prove insufficient against sophisticated GIAs. Robust defenses are crucial for practical FL deployment, focusing on techniques like gradient masking and noise injection. The pursuit of absolute privacy is elusive; value lies in thoughtfully reducing exposure.

Consequently, the development of robust defenses against GIAs is crucial for the practical deployment of FL. Current research focuses on techniques like gradient masking, secure aggregation, and the introduction of carefully calibrated noise to obfuscate sensitive information within the shared updates. However, a constant arms race exists between attack and defense strategies, demanding continuous innovation in privacy-preserving machine learning.

The pursuit of perfect privacy in a collaborative system is akin to chasing a ghost – the more we attempt to capture it, the more elusive it becomes, and the value lies not in absolute concealment, but in the thoughtful reduction of what is exposed.

Attacks: Passive Observation vs. Active Manipulation

Gradient inversion attacks (GIAs) pose a significant threat to federated learning. Passive GIAs observe gradients during training, establishing a baseline for vulnerability assessment. Active GIAs manipulate the global model to enhance data reconstruction capabilities. Techniques like Binning Property Attack and Paired Weight Attack demonstrate that even subtle model alterations can significantly compromise data privacy.

Successful attacks don’t merely extract information; they fundamentally alter internal model representations. Specifically, a GIA forces neurons to converge, eliminating the diversity inherent in a well-trained neural network. This homogenization diminishes the model’s capacity for complex pattern recognition.

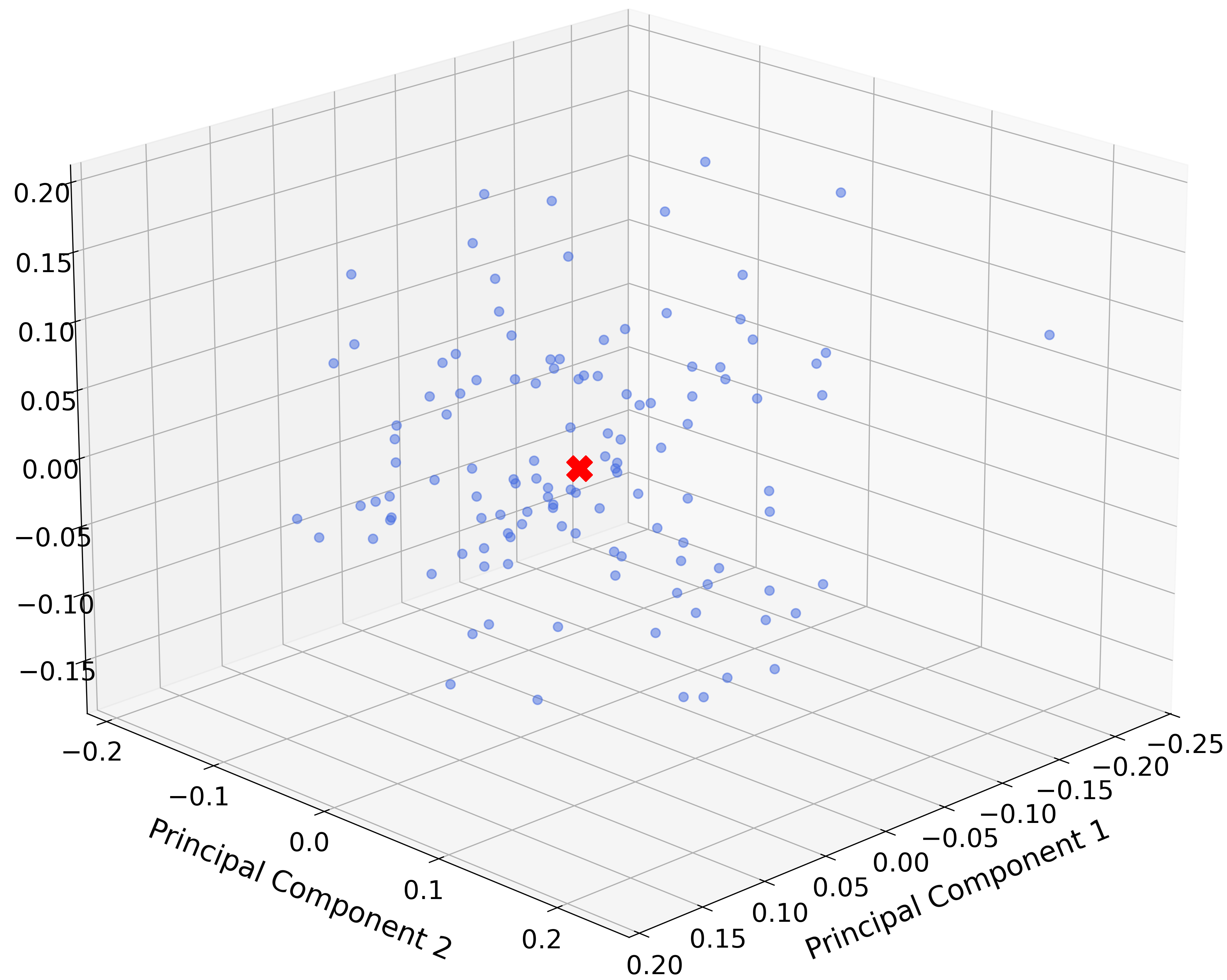

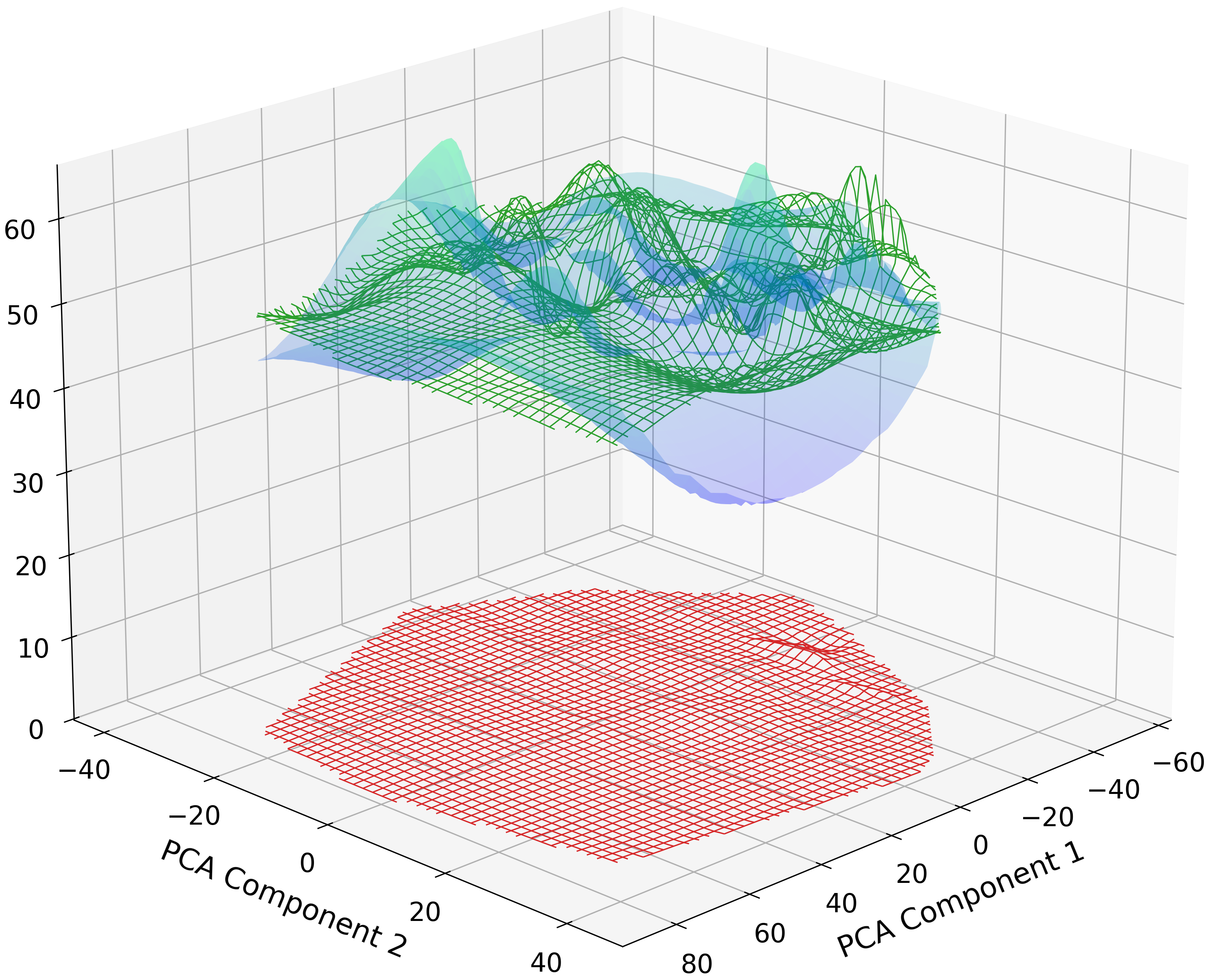

Detecting the Shadow of Manipulation

Gradient-based anomaly detection offers a promising approach to identifying GIAs during model training. Loss Function Analysis and Gradient Characteristic Analysis examine anomalous model behavior. Model Divergence Analysis complements these methods by comparing successive model updates, looking for abrupt shifts in the parameter space.

By monitoring loss functions, gradient characteristics, and model divergence, a robust defense against GIAs can be established.

The Evolving Threat: Beyond Simple Inversion

Learned Generative Inversion Attacks (GIAs), such as SEER and Geminio, represent a significant advancement in federated learning scenarios. These methods employ sophisticated strategies for targeted data reconstruction, surpassing basic gradient descent approaches. They utilize secret disaggregation and natural language queries to refine the reconstruction process, bypassing traditional defenses.

Evaluation reveals limitations in commonly used stealthiness metrics. D-SNR is ineffective in accurately gauging the success of these attacks, necessitating improved evaluation criteria and detection mechanisms.

Towards Resilience: Adapting to the Unseen

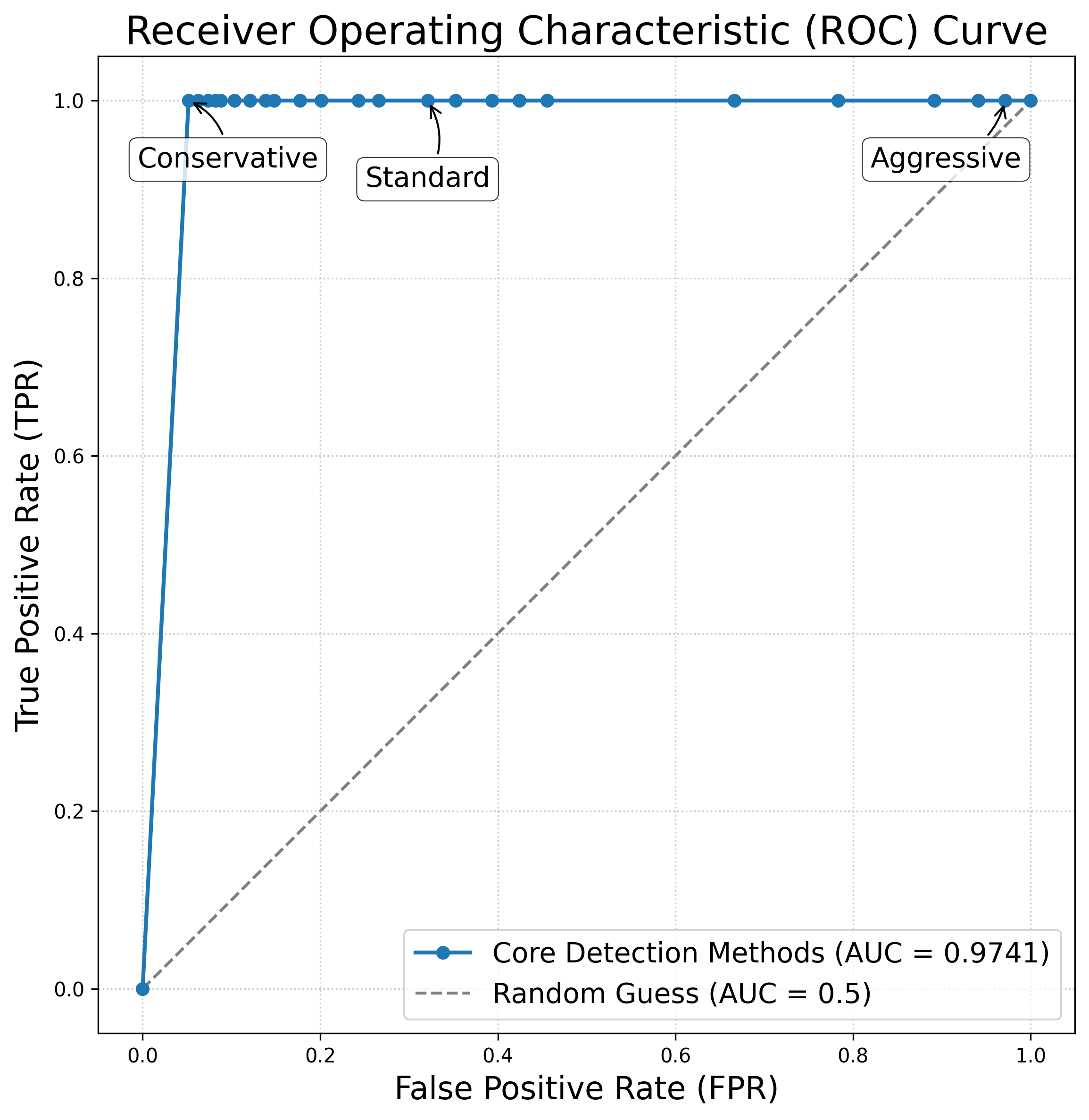

Recent research demonstrates a client-side detection method for advanced attacks, achieving an Area Under the Curve (AUC) of 0.9741. This performance stems from analyzing local model updates for anomalies. The technique maintains a balance between detection accuracy and minimizing false alarms, achieving low False Positive Rates (FPR) of 0.037 and near-perfect True Positive Rates (TPR) approaching 1.0.

Mitigating vulnerabilities necessitates a multi-faceted approach. Exploring robust aggregation algorithms (FedSGD, FedAVG) can lessen the influence of malicious updates. Integrating differential privacy and secure multi-party computation offers enhanced data privacy. Developing adaptive defense mechanisms remains crucial for long-term security. The architecture of trust is built not of impenetrable walls, but of continuous, subtle adjustments.

The pursuit of defensive strategies within Federated Learning often introduces unnecessary complexity. This work, focused on detecting active Gradient Inversion Attacks, underscores a fundamental principle: effective security doesn’t demand elaborate constructions. Rather, it benefits from observing inherent system behavior. As Brian Kernighan observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” Similarly, sophisticated attack methods demand equally intricate defenses, which are inherently fragile. The demonstrated client-side detection relies on identifying statistical anomalies – a straightforward approach offering practical resilience against even stealthy attacks, a testament to the power of simplicity.

The Horizon Beckons

The assertion that active Gradient Inversion Attacks, despite their algorithmic sophistication, are susceptible to client-side detection represents not a culmination, but a necessary excision. The field has labored under the assumption that stealth equates to invulnerability; this work suggests that complexity, in the attacker’s design, generates detectable signatures. Further inquiry must address the limits of this detectability. What level of perturbation can be tolerated before anomalies become obscured by the inherent noise of federated training? The pursuit of perfect stealth is, predictably, a wasteful expenditure of effort – a diminishing return on increasing computational cost.

A critical next step involves formalizing the trade-off between attack efficacy and detectability. Current metrics prioritize reconstruction accuracy without adequately quantifying the risk of exposure. A more nuanced evaluation framework is required, one that incorporates the probability of detection alongside the quality of the inferred data. Unnecessary is violence against attention; the field should prioritize defenses that are both effective and computationally efficient, rather than chasing increasingly elaborate, and ultimately brittle, countermeasures.

Finally, the scope of this analysis should expand beyond the specific attacks examined. While Gradient Inversion Attacks are demonstrably vulnerable, the underlying principles – the generation of detectable statistical anomalies through model manipulation – likely apply to other adversarial strategies. The goal is not merely to defend against one threat, but to establish a general principle: that any attempt to subvert a federated learning system will leave a trace, however faint. Density of meaning is the new minimalism; simplicity in defense is not a weakness, but a strength.

Original article: https://arxiv.org/pdf/2511.10502.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- 39th Developer Notes: 2.5th Anniversary Update

- Gold Rate Forecast

- You Should Not Let Your Kids Watch These Cartoons

- Here’s Whats Inside the Nearly $1 Million Golden Globes Gift Bag

- ‘Bugonia’ Tops Peacock’s Top 10 Most-Watched Movies List This Week Once Again

- The Hidden Treasure in AI Stocks: Alphabet

- South Korea’s Wild Bitcoin ETF Gamble: Can This Ever Work?

- TV Pilots Rejected by Networks

- USD RUB PREDICTION

- Shocking Split! Electric Coin Company Leaves Zcash Over Governance Row! 😲

2025-11-14 17:41